Jens Rieken

A LiDAR-based real-time capable 3D Perception System for Automated Driving in Urban Domains

May 07, 2020

Abstract:We present a LiDAR-based and real-time capable 3D perception system for automated driving in urban domains. The hierarchical system design is able to model stationary and movable parts of the environment simultaneously and under real-time conditions. Our approach extends the state of the art by innovative in-detail enhancements for perceiving road users and drivable corridors even in case of non-flat ground surfaces and overhanging or protruding elements. We describe a runtime-efficient pointcloud processing pipeline, consisting of adaptive ground surface estimation, 3D clustering and motion classification stages. Based on the pipeline's output, the stationary environment is represented in a multi-feature mapping and fusion approach. Movable elements are represented in an object tracking system capable of using multiple reference points to account for viewpoint changes. We further enhance the tracking system by explicit consideration of occlusion and ambiguity cases. Our system is evaluated using a subset of the TUBS Road User Dataset. We enhance common performance metrics by considering application-driven aspects of real-world traffic scenarios. The perception system shows impressive results and is able to cope with the addressed scenarios while still preserving real-time capability.

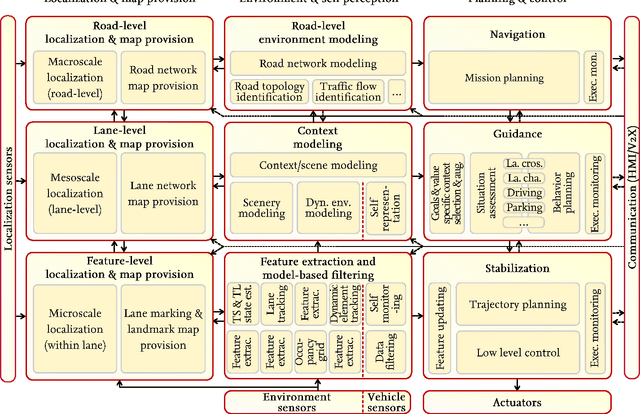

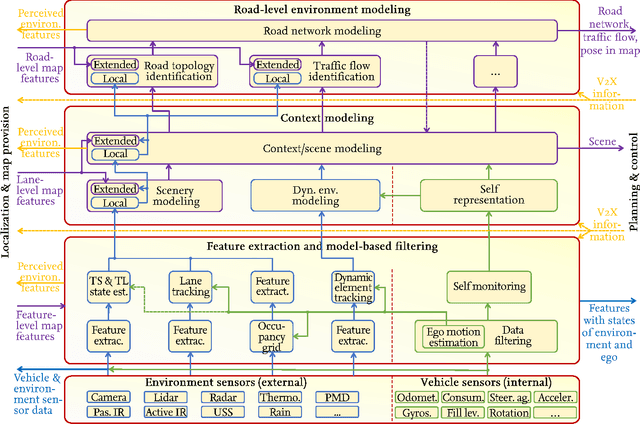

Towards a Functional System Architecture for Automated Vehicles

Mar 30, 2017

Abstract:This paper presents a functional system architecture for an automated vehicle. It provides an overall, generic structure that is independent of a specific implementation of a particular vehicle project. Yet, it has been inspired and cross-checked with a real world automated driving implementation in the Stadtpilot project at the Technische Universit\"at Braunschweig. The architecture entails aspects like environment and self perception, planning and control, localization, map provision, Vehicle-To-X-communication, and interaction with human operators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge