Jens Nevens

Decentralised Emergence of Robust and Adaptive Linguistic Conventions in Populations of Autonomous Agents Grounded in Continuous Worlds

Jan 16, 2024Abstract:This paper introduces a methodology through which a population of autonomous agents can establish a linguistic convention that enables them to refer to arbitrary entities that they observe in their environment. The linguistic convention emerges in a decentralised manner through local communicative interactions between pairs of agents drawn from the population. The convention consists of symbolic labels (word forms) associated to concept representations (word meanings) that are grounded in a continuous feature space. The concept representations of each agent are individually constructed yet compatible on a communicative level. Through a range of experiments, we show (i) that the methodology enables a population to converge on a communicatively effective, coherent and human-interpretable linguistic convention, (ii) that it is naturally robust against sensor defects in individual agents, (iii) that it can effectively deal with noisy observations, uncalibrated sensors and heteromorphic populations, (iv) that the method is adequate for continual learning, and (v) that the convention self-adapts to changes in the environment and communicative needs of the agents.

A Practical Guide to Studying Emergent Communication through Grounded Language Games

Apr 20, 2020

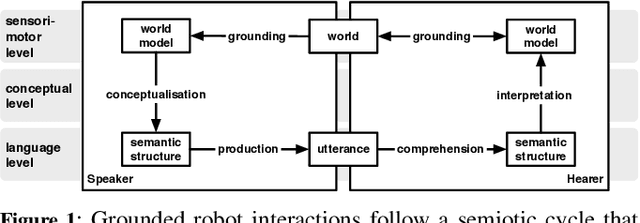

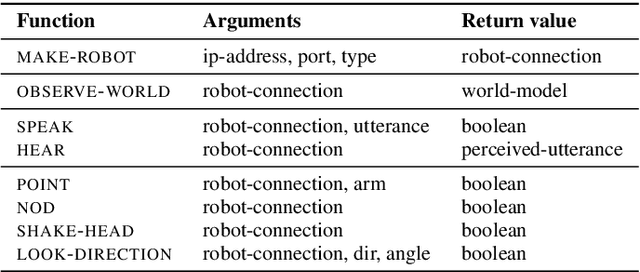

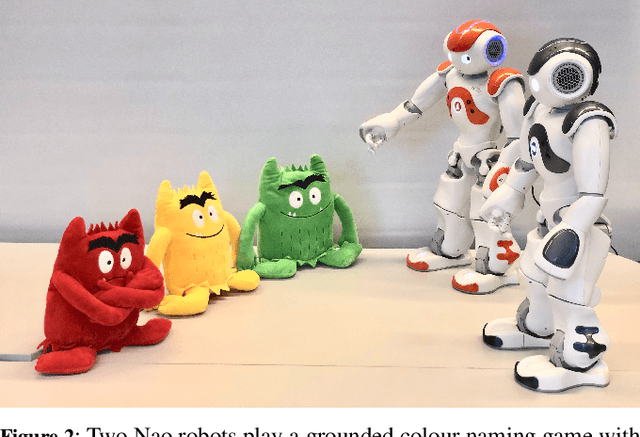

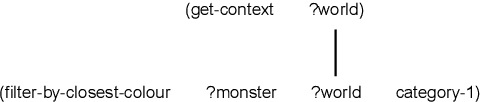

Abstract:The question of how an effective and efficient communication system can emerge in a population of agents that need to solve a particular task attracts more and more attention from researchers in many fields, including artificial intelligence, linguistics and statistical physics. A common methodology for studying this question consists of carrying out multi-agent experiments in which a population of agents takes part in a series of scripted and task-oriented communicative interactions, called 'language games'. While each individual language game is typically played by two agents in the population, a large series of games allows the population to converge on a shared communication system. Setting up an experiment in which a rich system for communicating about the real world emerges is a major enterprise, as it requires a variety of software components for running multi-agent experiments, for interacting with sensors and actuators, for conceptualising and interpreting semantic structures, and for mapping between these semantic structures and linguistic utterances. The aim of this paper is twofold. On the one hand, it introduces a high-level robot interface that extends the Babel software system, presenting for the first time a toolkit that provides flexible modules for dealing with each subtask involved in running advanced grounded language game experiments. On the other hand, it provides a practical guide to using the toolkit for implementing such experiments, taking a grounded colour naming game experiment as a didactic example.

Computational Models of Tutor Feedback in Language Acquisition

Jul 07, 2017

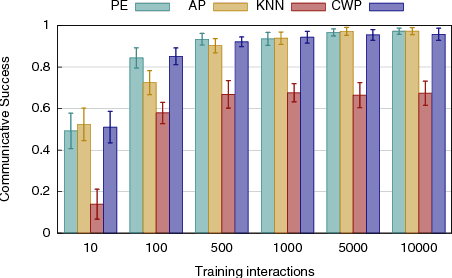

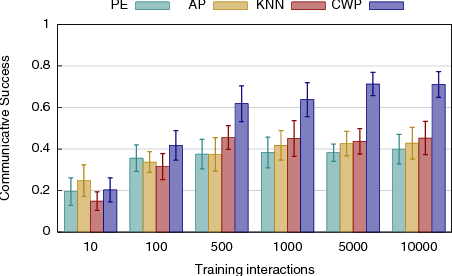

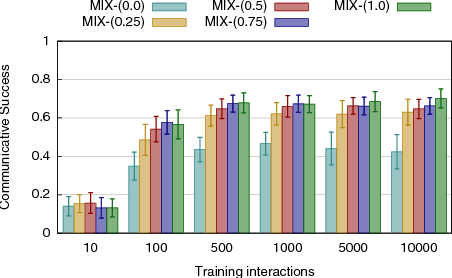

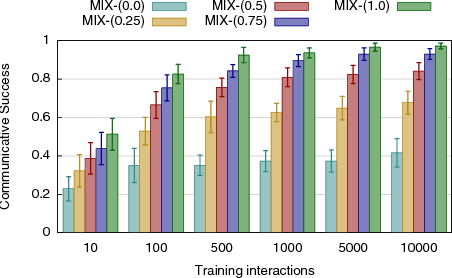

Abstract:This paper investigates the role of tutor feedback in language learning using computational models. We compare two dominant paradigms in language learning: interactive learning and cross-situational learning - which differ primarily in the role of social feedback such as gaze or pointing. We analyze the relationship between these two paradigms and propose a new mixed paradigm that combines the two paradigms and allows to test algorithms in experiments that combine no feedback and social feedback. To deal with mixed feedback experiments, we develop new algorithms and show how they perform with respect to traditional knn and prototype approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge