Jean-Marie Gorce

MARACAS

Neyman-Pearson Detector for Ambient Backscatter Zero-Energy-Devices Beacons

Apr 14, 2025Abstract:Recently, a novel ultra-low power indoor wireless positioning system has been proposed. In this system, Zero-Energy-Devices (ZED) beacons are deployed in Indoor environments, and located on a map with unique broadcast identifiers. They harvest ambient energy to power themselves and backscatter ambient waves from cellular networks to send their identifiers. This paper presents a novel detection method for ZEDs in ambient backscatter systems, with an emphasis on performance evaluation through experimental setups and simulations. We introduce a Neyman-Pearson detection framework, which leverages a predefined false alarm probability to determine the optimal detection threshold. This method, applied to the analysis of backscatter signals in a controlled testbed environment, incorporates the use of BC sequences to enhance signal detection accuracy. The experimental setup, conducted on the FIT/CorteXlab testbed, employs a two-node configuration for signal transmission and reception. Key performance metrics, which is the peak-to-lobe ratio, is evaluated, confirming the effectiveness of the proposed detection model. The results demonstrate a detection system that effectively handles varying noise levels and identifies ZEDs with high reliability. The simulation results show the robustness of the model, highlighting its capacity to achieve desired detection performance even with stringent false alarm thresholds. This work paves the way for robust ZED detection in real-world scenarios, contributing to the advancement of wireless communication technologies.

Streaming Federated Learning with Markovian Data

Mar 24, 2025Abstract:Federated learning (FL) is now recognized as a key framework for communication-efficient collaborative learning. Most theoretical and empirical studies, however, rely on the assumption that clients have access to pre-collected data sets, with limited investigation into scenarios where clients continuously collect data. In many real-world applications, particularly when data is generated by physical or biological processes, client data streams are often modeled by non-stationary Markov processes. Unlike standard i.i.d. sampling, the performance of FL with Markovian data streams remains poorly understood due to the statistical dependencies between client samples over time. In this paper, we investigate whether FL can still support collaborative learning with Markovian data streams. Specifically, we analyze the performance of Minibatch SGD, Local SGD, and a variant of Local SGD with momentum. We answer affirmatively under standard assumptions and smooth non-convex client objectives: the sample complexity is proportional to the inverse of the number of clients with a communication complexity comparable to the i.i.d. scenario. However, the sample complexity for Markovian data streams remains higher than for i.i.d. sampling.

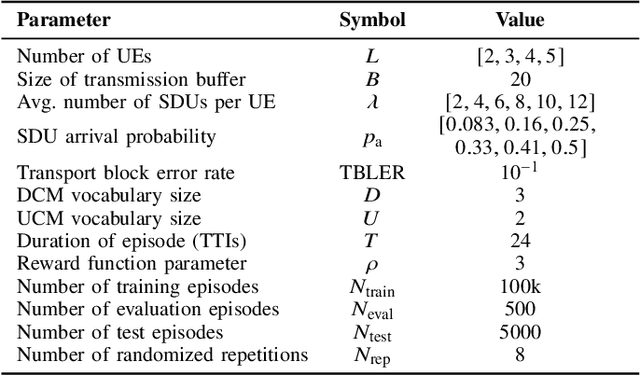

Joint Slot and Power Optimization for Grant Free Random Access with Unknown and Heterogeneous Device Activity

Jul 26, 2024

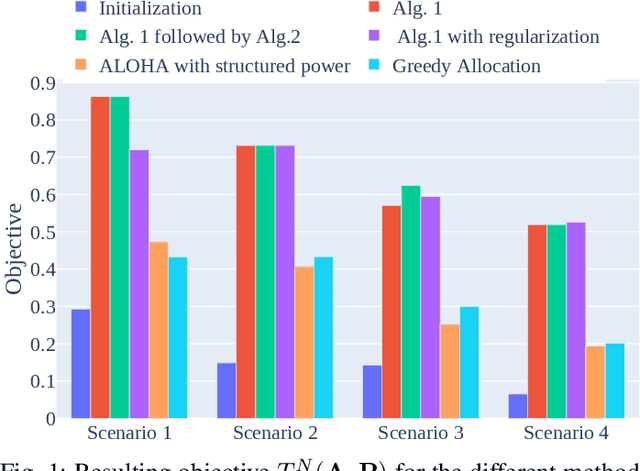

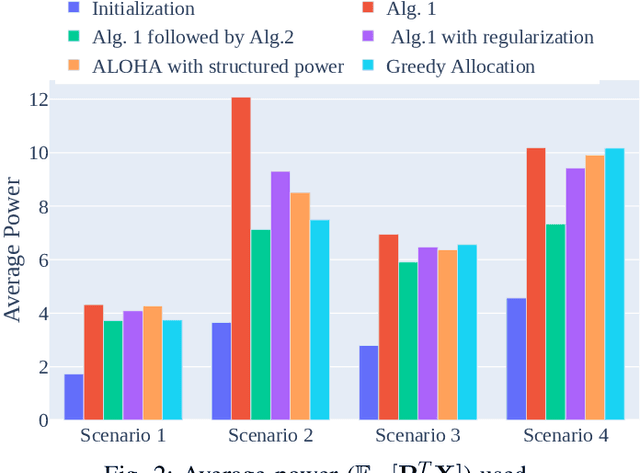

Abstract:Grant Free Random Access (GFRA) is a popular protocol in the Internet of Things (IoT) to reduce the control signaling. GFRA is a framed protocol where each frame is split into two parts: device identification; and data transmission part which can be viewed as a form of Frame Slotted ALOHA (FSA). A common assumption in FSA is device homogeneity; that is the probability that a device seeks to transmit data in a particular frame is common for all devices and independent of the other devices. Recent work has investigated the possibility of tuning the FSA protocol to the statistics of the network by changing the probability for a particular device to access a particular slot. However, power control with a successive interference cancellation (SIC) receiver has not yet been considered to further increase the performance of the tuned FSA protocols. In this paper, we propose algorithms to jointly optimize both the slot selection and the transmit power of the devices to minimize the outage of the devices in the network. We show via a simulation study that our algorithms can outperform baselines (including slotted ALOHA) in terms of expected number of devices transmitting without outage and in term of transmit power.

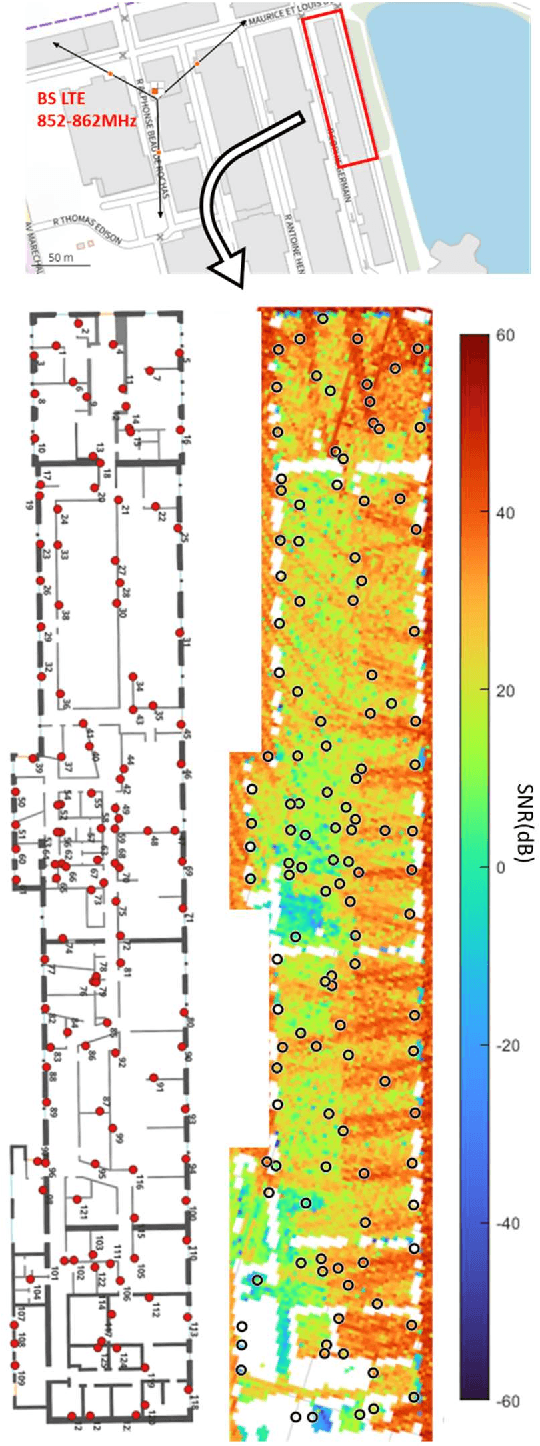

Indoor Localization of Smartphones Thanks to Zero-Energy-Devices Beacons

Feb 26, 2024

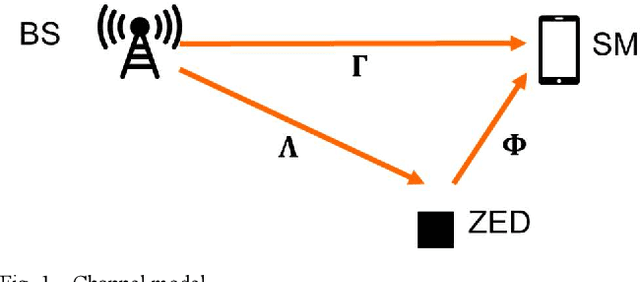

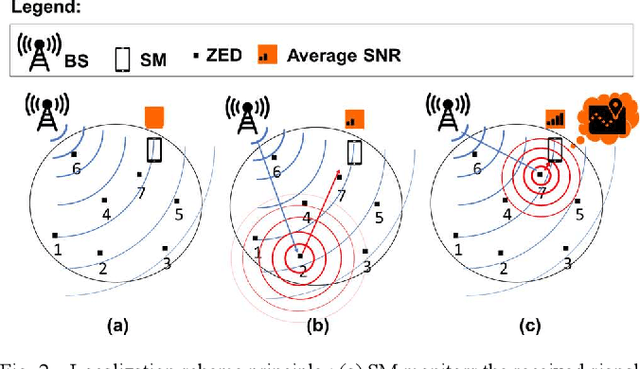

Abstract:In this paper, we present a new ultra-low power method of indoor localization of smartphones (SM) based on zero-energy-devices (ZEDs) beacons instead of active wireless beacons. Each ZED is equipped with a unique identification number coded into a bit-sequence, and its precise position on the map is recorded. An SM inside the building is assumed to have access to the map of ZEDs. The ZED backscatters ambient waves from base stations (BSs) of the cellular network. The SM detects the ZED message in the variations of the received ambient signal from the BS. We accurately simulate the ambient waves from a BS of Orange 4G commercial network, inside an existing large building covered with ZED beacons, thanks to a ray-tracing-based propagation simulation tool. Our first performance evaluation study shows that the proposed localization system enables us to determine in which room a SM is located, in a realistic and challenging propagation scenario.

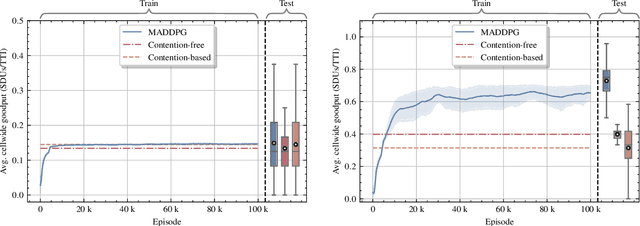

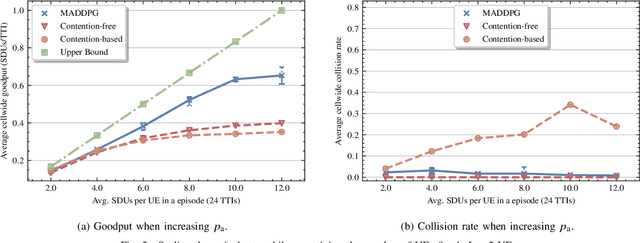

Scalable Joint Learning of Wireless Multiple-Access Policies and their Signaling

Jun 08, 2022

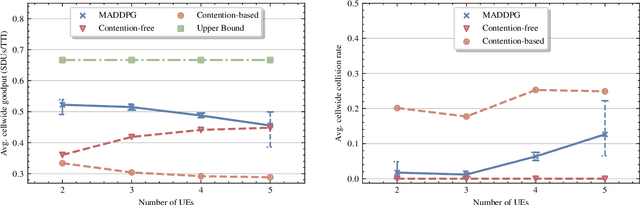

Abstract:In this paper, we apply an multi-agent reinforcement learning (MARL) framework allowing the base station (BS) and the user equipments (UEs) to jointly learn a channel access policy and its signaling in a wireless multiple access scenario. In this framework, the BS and UEs are reinforcement learning (RL) agents that need to cooperate in order to deliver data. The comparison with a contention-free and a contention-based baselines shows that our framework achieves a superior performance in terms of goodput even in high traffic situations while maintaining a low collision rate. The scalability of the proposed method is studied, since it is a major problem in MARL and this paper provides the first results in order to address it.

Learning OFDM Waveforms with PAPR and ACLR Constraints

Oct 21, 2021

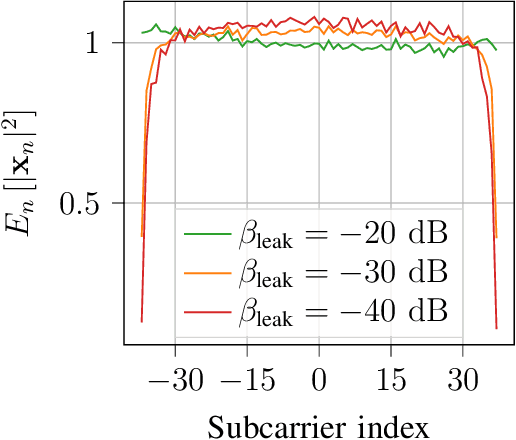

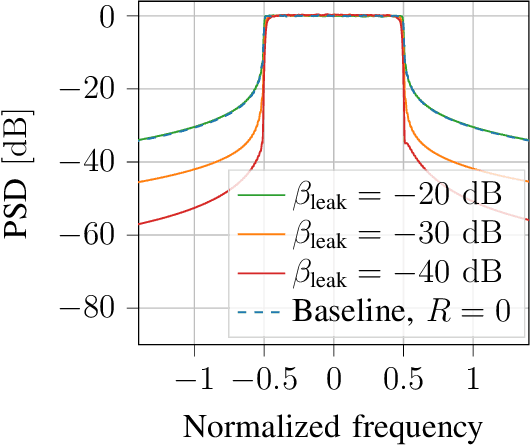

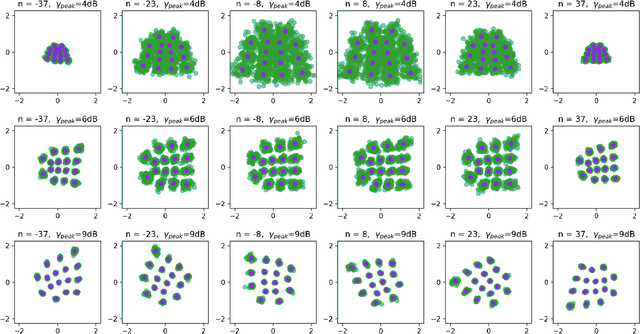

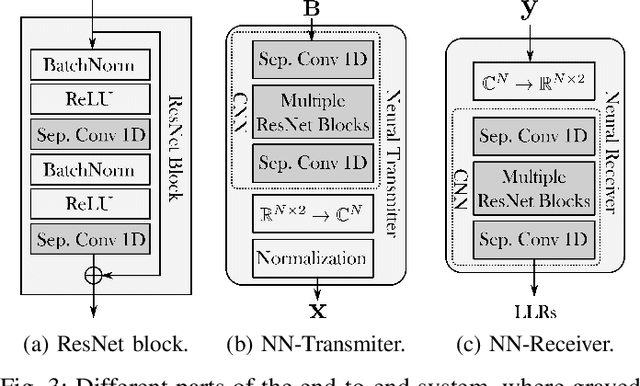

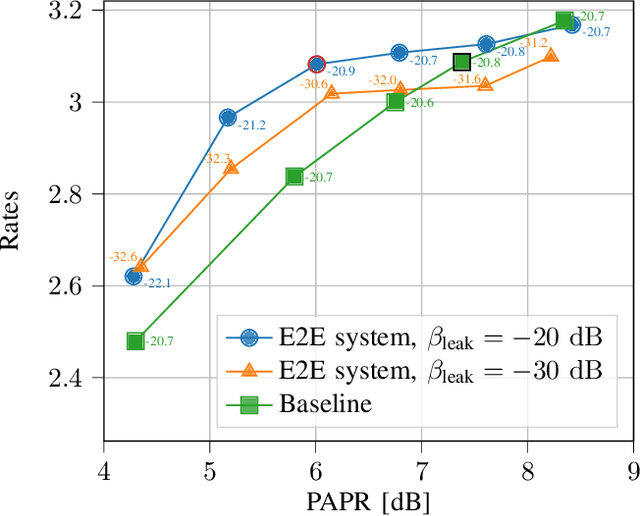

Abstract:An attractive research direction for future communication systems is the design of new waveforms that can both support high throughputs and present advantageous signal characteristics. Although most modern systems use orthogonal frequency-division multiplexing (OFDM) for its efficient equalization, this waveform suffers from multiple limitations such as a high adjacent channel leakage ratio (ACLR) and high peak-to-average power ratio (PAPR). In this paper, we propose a learning-based method to design OFDM-based waveforms that satisfy selected constraints while maximizing an achievable information rate. To that aim, we model the transmitter and the receiver as convolutional neural networks (CNNs) that respectively implement a high-dimensional modulation scheme and perform the detection of the transmitted bits. This leads to an optimization problem that is solved using the augmented Lagrangian method. Evaluation results show that the end-to-end system is able to satisfy target PAPR and ACLR constraints and allows significant throughput gains compared to a tone reservation (TR) baseline. An additional advantage is that no dedicated pilots are needed.

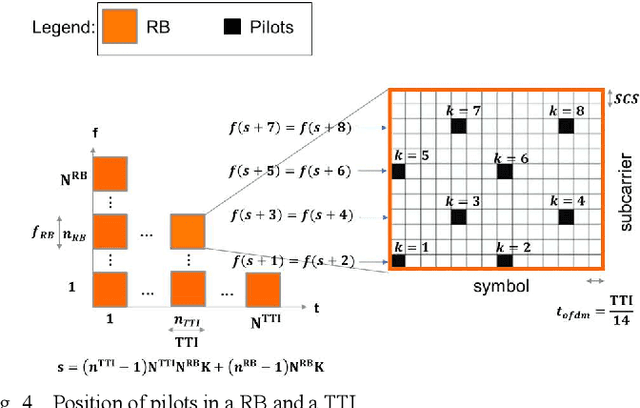

The Emergence of Wireless MAC Protocols with Multi-Agent Reinforcement Learning

Aug 17, 2021

Abstract:In this paper, we propose a new framework, exploiting the multi-agent deep deterministic policy gradient (MADDPG) algorithm, to enable a base station (BS) and user equipment (UE) to come up with a medium access control (MAC) protocol in a multiple access scenario. In this framework, the BS and UEs are reinforcement learning (RL) agents that need to learn to cooperate in order to deliver data. The network nodes can exchange control messages to collaborate and deliver data across the network, but without any prior agreement on the meaning of the control messages. In such a framework, the agents have to learn not only the channel access policy, but also the signaling policy. The collaboration between agents is shown to be important, by comparing the proposed algorithm to ablated versions where either the communication between agents or the central critic is removed. The comparison with a contention-free baseline shows that our framework achieves a superior performance in terms of goodput and can effectively be used to learn a new protocol.

Machine Learning-enhanced Receive Processing for MU-MIMO OFDM Systems

Jun 30, 2021

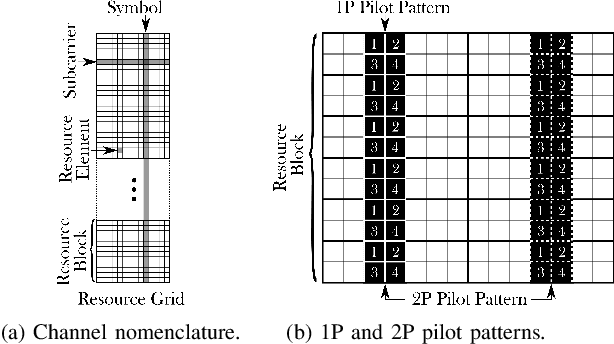

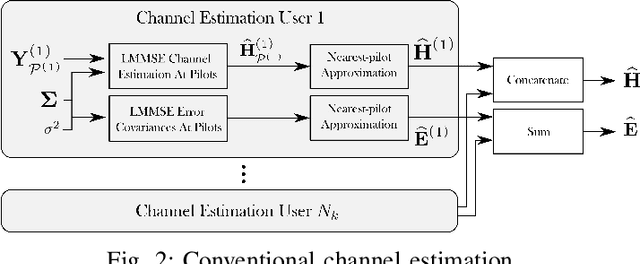

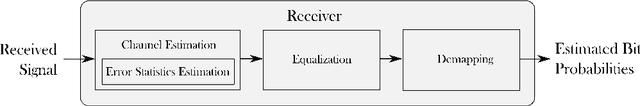

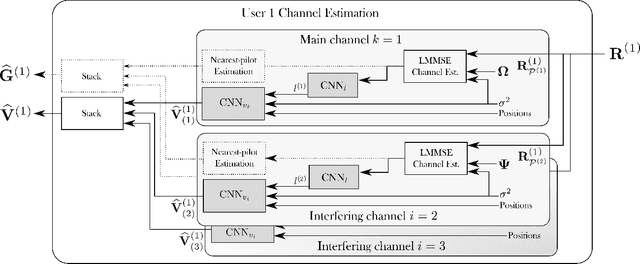

Abstract:Machine learning (ML) can be used in various ways to improve multi-user multiple-input multiple-output (MU-MIMO) receive processing. Typical approaches either augment a single processing step, such as symbol detection, or replace multiple steps jointly by a single neural network (NN). These techniques demonstrate promising results but often assume perfect channel state information (CSI) or fail to satisfy the interpretability and scalability constraints imposed by practical systems. In this paper, we propose a new strategy which preserves the benefits of a conventional receiver, but enhances specific parts with ML components. The key idea is to exploit the orthogonal frequency-division multiplexing (OFDM) signal structure to improve both the demapping and the computation of the channel estimation error statistics. Evaluation results show that the proposed ML-enhanced receiver beats practical baselines on all considered scenarios, with significant gains at high speeds.

End-to-End Learning of OFDM Waveforms with PAPR and ACLR Constraints

Jun 30, 2021

Abstract:Orthogonal frequency-division multiplexing (OFDM) is widely used in modern wireless networks thanks to its efficient handling of multipath environment. However, it suffers from a poor peak-to-average power ratio (PAPR) which requires a large power backoff, degrading the power amplifier (PA) efficiency. In this work, we propose to use a neural network (NN) at the transmitter to learn a high-dimensional modulation scheme allowing to control the PAPR and adjacent channel leakage ratio (ACLR). On the receiver side, a NN-based receiver is implemented to carry out demapping of the transmitted bits. The two NNs operate on top of OFDM, and are jointly optimized in and end-to-end manner using a training algorithm that enforces constraints on the PAPR and ACLR. Simulation results show that the learned waveforms enable higher information rates than a tone reservation baseline, while satisfying predefined PAPR and ACLR targets.

Machine Learning for MU-MIMO Receive Processing in OFDM Systems

Dec 15, 2020

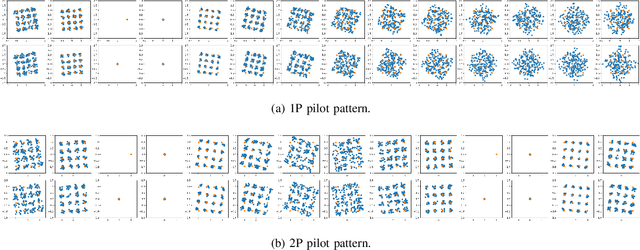

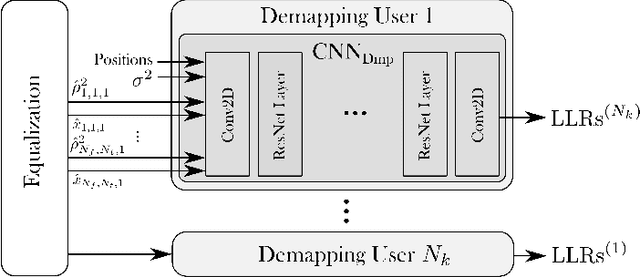

Abstract:Machine learning (ML) starts to be widely used to enhance the performance of multi-user multiple-input multiple-output (MU-MIMO) receivers. However, it is still unclear if such methods are truly competitive with respect to conventional methods in realistic scenarios and under practical constraints. In addition to enabling accurate signal reconstruction on realistic channel models, MU-MIMO receive algorithms must allow for easy adaptation to a varying number of users without the need for retraining. In contrast to existing work, we propose an ML-enhanced MU-MIMO receiver that builds on top of a conventional linear minimum mean squared error (LMMSE) architecture. It preserves the interpretability and scalability of the LMMSE receiver, while improving its accuracy in two ways. First, convolutional neural networks (CNNs) are used to compute an approximation of the second-order statistics of the channel estimation error which are required for accurate equalization. Second, a CNN-based demapper jointly processes a large number of orthogonal frequency-division multiplexing (OFDM) symbols and subcarriers, which allows it to compute better log likelihood ratios (LLRs) by compensating for channel aging. The resulting architecture can be used in the up- and downlink and is trained in an end-to-end manner, removing the need for hard-to-get perfect channel state information (CSI) during the training phase. Simulation results demonstrate consistent performance improvements over the baseline which are especially pronounced in high mobility scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge