Jean-Marc Jézéquel

DiverSe

Learning Software Configuration Spaces: A Systematic Literature Review

Jun 07, 2019

Abstract:Most modern software systems (operating systems like Linux or Android, Web browsers like Firefox or Chrome, video encoders like ffmpeg, x264 or VLC, mobile and cloud applications, etc.) are highly-configurable. Hundreds of configuration options, features, or plugins can be combined, each potentially with distinct functionality and effects on execution time, security, energy consumption, etc. Due to the combinatorial explosion and the cost of executing software, it is quickly impossible to exhaustively explore the whole configuration space. Hence, numerous works have investigated the idea of learning it from a small sample of configurations' measurements. The pattern "sampling, measuring, learning" has emerged in the literature, with several practical interests for both software developers and end-users of configurable systems. In this survey, we report on the different application objectives (e.g., performance prediction, configuration optimization, constraint mining), use-cases, targeted software systems and application domains. We review the various strategies employed to gather a representative and cost-effective sample. We describe automated software techniques used to measure functional and non-functional properties of configurations. We classify machine learning algorithms and how they relate to the pursued application. Finally, we also describe how researchers evaluate the quality of the learning process. The findings from this systematic review show that the potential application objective is important; there are a vast number of case studies reported in the literature from the basis of several domains and software systems. Yet, the huge variant space of configurable systems is still challenging and calls to further investigate the synergies between artificial intelligence and software engineering.

Towards Adversarial Configurations for Software Product Lines

May 30, 2018

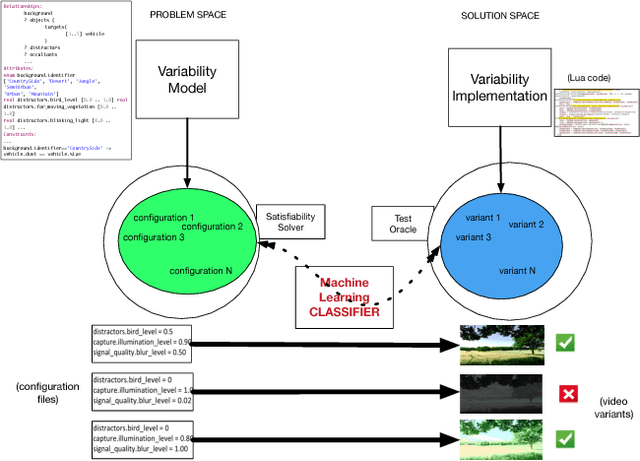

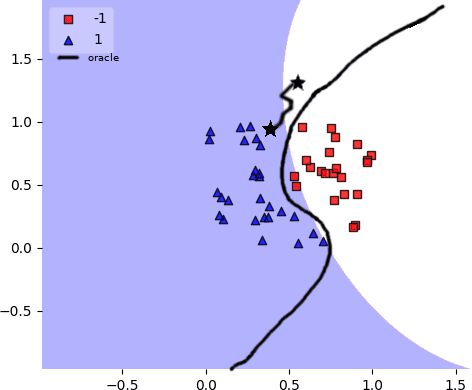

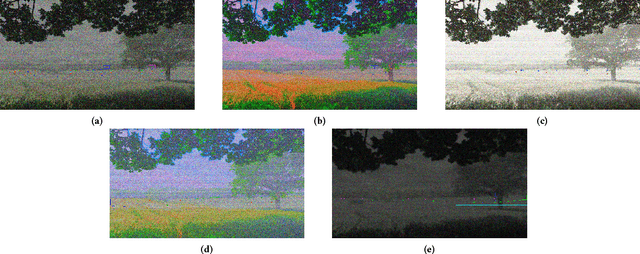

Abstract:Ensuring that all supposedly valid configurations of a software product line (SPL) lead to well-formed and acceptable products is challenging since it is most of the time impractical to enumerate and test all individual products of an SPL. Machine learning classifiers have been recently used to predict the acceptability of products associated with unseen configurations. For some configurations, a tiny change in their feature values can make them pass from acceptable to non-acceptable regarding users' requirements and vice-versa. In this paper, we introduce the idea of leveraging these specific configurations and their positions in the feature space to improve the classifier and therefore the engineering of an SPL. Starting from a variability model, we propose to use Adversarial Machine Learning techniques to create new, adversarial configurations out of already known configurations by modifying their feature values. Using an industrial video generator we show how adversarial configurations can improve not only the classifier, but also the variability model, the variability implementation, and the testing oracle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge