Jean Pierre Richa

UWED: Unsigned Distance Field for Accurate 3D Scene Representation and Completion

Mar 17, 2022

Abstract:Scene Completion is the task of completing missing geometry from a partial scan of a scene. The majority of previous methods compute an implicit representation from range data using a Truncated Signed Distance Function (TSDF) on a 3D grid as input to neural networks. The truncation limits but does not remove the ambiguous cases introduced by the sign for non-closed surfaces. As an alternative, we present an Unsigned Distance Function (UDF) called Unsigned Weighted Euclidean Distance (UWED) as input to the scene completion neural networks. UWED is simple and efficient as a surface representation, and can be computed on any noisy point cloud without normals. To obtain the explicit geometry, we present a method for extracting a point cloud from discretized UDF values on a regular grid. We compare different SDFs and UDFs for the scene completion task on indoor and outdoor point clouds collected from RGB-D and LiDAR sensors and show improved completion using the proposed UWED function.

AdaSplats: Adaptative Splats from Semantic Point Cloud for Fast and High-Fidelity LiDAR Simulation

Mar 17, 2022

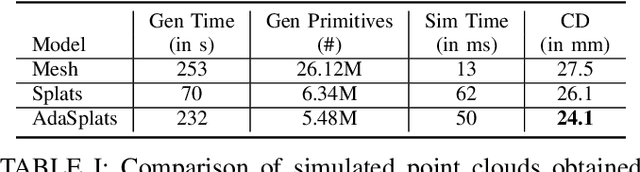

Abstract:LiDAR sensors provide rich 3D information about surrounding scenes and are becoming increasingly important for autonomous vehicles' tasks, such as semantic segmentation, object detection, and tracking. Being able to simulate a LiDAR sensor will accelerate the testing, validation, and deployment of autonomous vehicles while reducing the cost and eliminating the risks of testing in real-world scenarios. To tackle the issue of simulating LiDAR data with high fidelity, we present a pipeline that leverages real-world point clouds acquired by mobile mapping systems. Point-based geometry representations, more specifically splats, have proven their ability to accurately model the underlying surface in very large point clouds. Showing the limits of basic splatting, we introduce an adaptative splats generation method that accurately models the underlying 3D geometry, especially for thin structures. We have also developed a LiDAR simulation that is 200 times faster-than-real-time by ray casting on GPU while focusing on efficiently handling large point clouds. We test our LiDAR simulation in real-world conditions, showing qualitative and quantitative results against basic splatting and meshing, demonstrating the superiority of our modeling technique.

Paris-CARLA-3D: A Real and Synthetic Outdoor Point Cloud Dataset for Challenging Tasks in 3D Mapping

Nov 22, 2021

Abstract:Paris-CARLA-3D is a dataset of several dense colored point clouds of outdoor environments built by a mobile LiDAR and camera system. The data are composed of two sets with synthetic data from the open source CARLA simulator (700 million points) and real data acquired in the city of Paris (60 million points), hence the name Paris-CARLA-3D. One of the advantages of this dataset is to have simulated the same LiDAR and camera platform in the open source CARLA simulator as the one used to produce the real data. In addition, manual annotation of the classes using the semantic tags of CARLA was performed on the real data, allowing the testing of transfer methods from the synthetic to the real data. The objective of this dataset is to provide a challenging dataset to evaluate and improve methods on difficult vision tasks for the 3D mapping of outdoor environments: semantic segmentation, instance segmentation, and scene completion. For each task, we describe the evaluation protocol as well as the experiments carried out to establish a baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge