Jayakumar Rajadas

FragmentNet: Adaptive Graph Fragmentation for Graph-to-Sequence Molecular Representation Learning

Feb 03, 2025

Abstract:Molecular property prediction uses molecular structure to infer chemical properties. Chemically interpretable representations that capture meaningful intramolecular interactions enhance the usability and effectiveness of these predictions. However, existing methods often rely on atom-based or rule-based fragment tokenization, which can be chemically suboptimal and lack scalability. We introduce FragmentNet, a graph-to-sequence foundation model with an adaptive, learned tokenizer that decomposes molecular graphs into chemically valid fragments while preserving structural connectivity. FragmentNet integrates VQVAE-GCN for hierarchical fragment embeddings, spatial positional encodings for graph serialization, global molecular descriptors, and a transformer. Pre-trained with Masked Fragment Modeling and fine-tuned on MoleculeNet tasks, FragmentNet outperforms models with similarly scaled architectures and datasets while rivaling larger state-of-the-art models requiring significantly more resources. This novel framework enables adaptive decomposition, serialization, and reconstruction of molecular graphs, facilitating fragment-based editing and visualization of property trends in learned embeddings - a powerful tool for molecular design and optimization.

MVMTnet: A Multi-variate Multi-modal Transformer for Multi-class Classification of Cardiac Irregularities Using ECG Waveforms and Clinical Notes

Feb 21, 2023

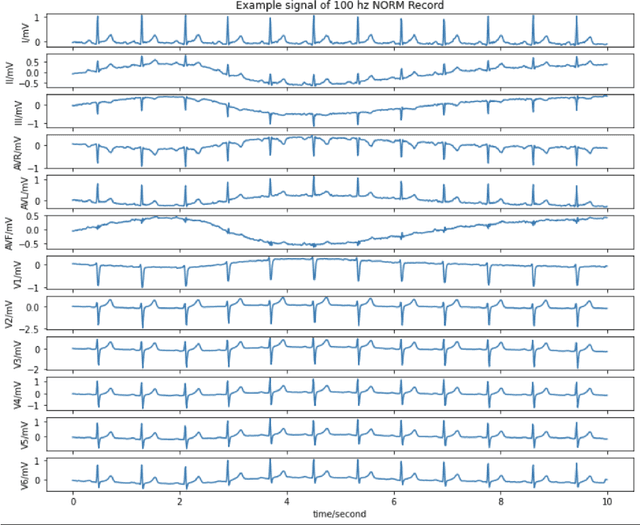

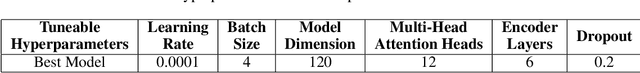

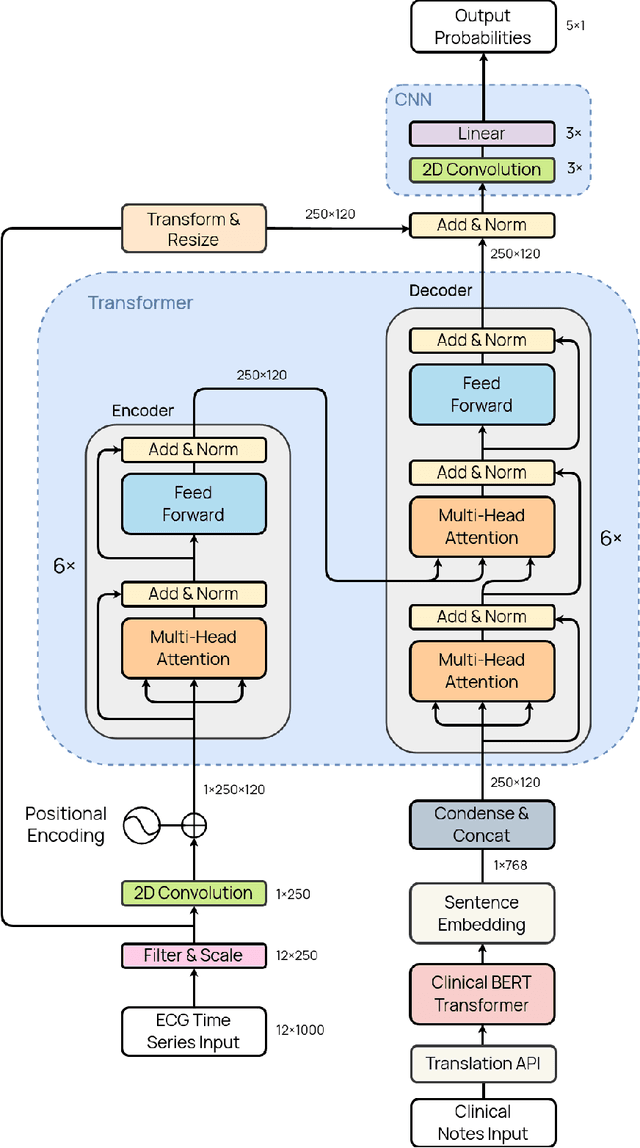

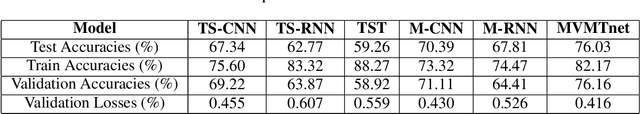

Abstract:Deep learning provides an excellent avenue for optimizing diagnosis and patient monitoring for clinical-based applications, which can critically enhance the response time to the onset of various conditions. For cardiovascular disease, one such condition where the rising number of patients increasingly outweighs the availability of medical resources in different parts of the world, a core challenge is the automated classification of various cardiac abnormalities. Existing deep learning approaches have largely been limited to detecting the existence of an irregularity, as in binary classification, which has been achieved using networks such as CNNs and RNN/LSTMs. The next step is to accurately perform multi-class classification and determine the specific condition(s) from the inherently noisy multi-variate waveform, which is a difficult task that could benefit from (1) a more powerful sequential network, and (2) the integration of clinical notes, which provide valuable semantic and clinical context from human doctors. Recently, Transformers have emerged as the state-of-the-art architecture for forecasting and prediction using time-series data, with their multi-headed attention mechanism, and ability to process whole sequences and learn both long and short-range dependencies. The proposed novel multi-modal Transformer architecture would be able to accurately perform this task while demonstrating the cross-domain effectiveness of Transformers, establishing a method for incorporating multiple data modalities within a Transformer for classification tasks, and laying the groundwork for automating real-time patient condition monitoring in clinical and ER settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge