Mark Karlov

Rehabilitation Exercise Quality Assessment through Supervised Contrastive Learning with Hard and Soft Negatives

Mar 05, 2024Abstract:Exercise-based rehabilitation programs have proven to be effective in enhancing the quality of life and reducing mortality and rehospitalization rates. AI-driven virtual rehabilitation, which allows patients to independently complete exercises at home, utilizes AI algorithms to analyze exercise data, providing feedback to patients and updating clinicians on their progress. These programs commonly prescribe a variety of exercise types, leading to a distinct challenge in rehabilitation exercise assessment datasets: while abundant in overall training samples, these datasets often have a limited number of samples for each individual exercise type. This disparity hampers the ability of existing approaches to train generalizable models with such a small sample size per exercise. Addressing this issue, our paper introduces a novel supervised contrastive learning framework with hard and soft negative samples that effectively utilizes the entire dataset to train a single model applicable to all exercise types. This model, with a Spatial-Temporal Graph Convolutional Network (ST-GCN) architecture, demonstrated enhanced generalizability across exercises and a decrease in overall complexity. Through extensive experiments on three publicly available rehabilitation exercise assessment datasets, the University of Idaho-Physical Rehabilitation Movement Data (UI-PRMD), IntelliRehabDS (IRDS), and KInematic assessment of MOvement and clinical scores for remote monitoring of physical REhabilitation (KIMORE), our method has shown to surpass existing methods, setting a new benchmark in rehabilitation exercise assessment accuracy.

MVMTnet: A Multi-variate Multi-modal Transformer for Multi-class Classification of Cardiac Irregularities Using ECG Waveforms and Clinical Notes

Feb 21, 2023

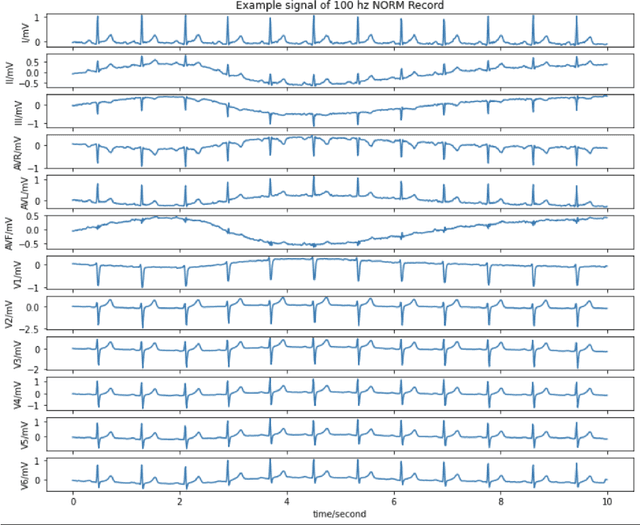

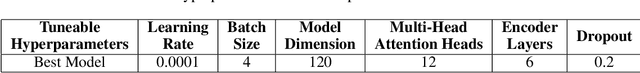

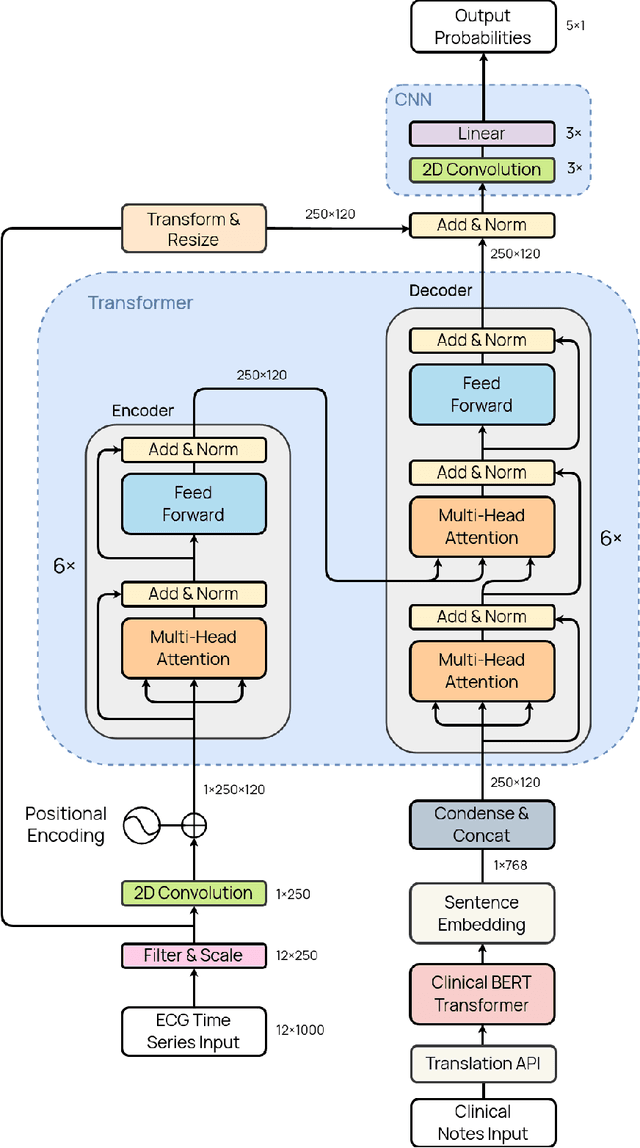

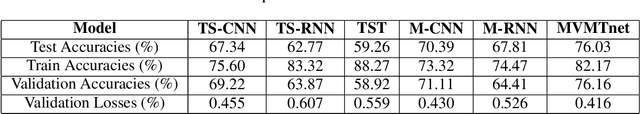

Abstract:Deep learning provides an excellent avenue for optimizing diagnosis and patient monitoring for clinical-based applications, which can critically enhance the response time to the onset of various conditions. For cardiovascular disease, one such condition where the rising number of patients increasingly outweighs the availability of medical resources in different parts of the world, a core challenge is the automated classification of various cardiac abnormalities. Existing deep learning approaches have largely been limited to detecting the existence of an irregularity, as in binary classification, which has been achieved using networks such as CNNs and RNN/LSTMs. The next step is to accurately perform multi-class classification and determine the specific condition(s) from the inherently noisy multi-variate waveform, which is a difficult task that could benefit from (1) a more powerful sequential network, and (2) the integration of clinical notes, which provide valuable semantic and clinical context from human doctors. Recently, Transformers have emerged as the state-of-the-art architecture for forecasting and prediction using time-series data, with their multi-headed attention mechanism, and ability to process whole sequences and learn both long and short-range dependencies. The proposed novel multi-modal Transformer architecture would be able to accurately perform this task while demonstrating the cross-domain effectiveness of Transformers, establishing a method for incorporating multiple data modalities within a Transformer for classification tasks, and laying the groundwork for automating real-time patient condition monitoring in clinical and ER settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge