Jay Turcot

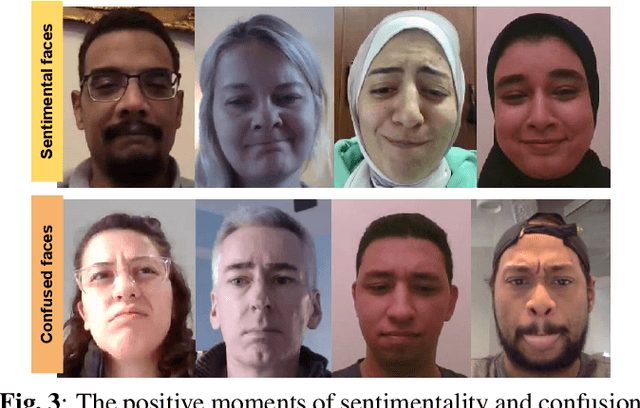

Automatic Detection of Sentimentality from Facial Expressions

Sep 11, 2022

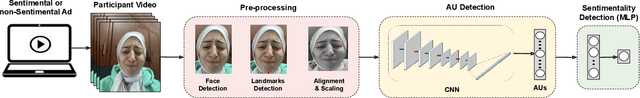

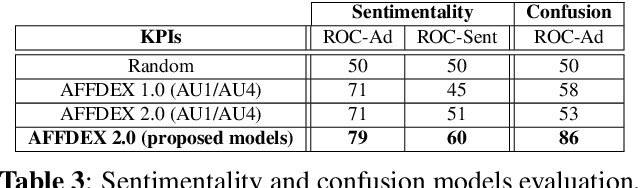

Abstract:Emotion recognition has received considerable attention from the Computer Vision community in the last 20 years. However, most of the research focused on analyzing the six basic emotions (e.g. joy, anger, surprise), with a limited work directed to other affective states. In this paper, we tackle sentimentality (strong feeling of heartwarming or nostalgia), a new emotional state that has few works in the literature, and no guideline defining its facial markers. To this end, we first collect a dataset of 4.9K videos of participants watching some sentimental and non-sentimental ads, and then we label the moments evoking sentimentality in the ads. Second, we use the ad-level labels and the facial Action Units (AUs) activation across different frames for defining some weak frame-level sentimentality labels. Third, we train a Multilayer Perceptron (MLP) using the AUs activation for sentimentality detection. Finally, we define two new ad-level metrics for evaluating our model performance. Quantitative and qualitative results show promising results for sentimentality detection. To the best of our knowledge this is the first work to address the problem of sentimentality detection.

AFFDEX 2.0: A Real-Time Facial Expression Analysis Toolkit

Feb 24, 2022

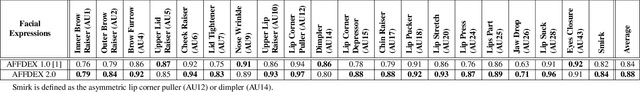

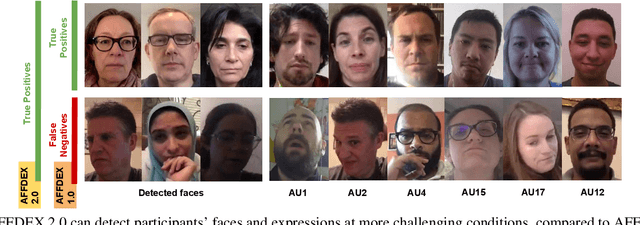

Abstract:In this paper we introduce AFFDEX 2.0 - a toolkit for analyzing facial expressions in the wild, that is, it is intended for users aiming to; a) estimate the 3D head pose, b) detect facial Action Units (AUs), c) recognize basic emotions and 2 new emotional states (sentimentality and confusion), and d) detect high-level expressive metrics like blink and attention. AFFDEX 2.0 models are mainly based on Deep Learning, and are trained using a large-scale naturalistic dataset consisting of thousands of participants from different demographic groups. AFFDEX 2.0 is an enhanced version of our previous toolkit [1], that is capable of tracking efficiently faces at more challenging conditions, detecting more accurately facial expressions, and recognizing new emotional states (sentimentality and confusion). AFFDEX 2.0 can process multiple faces in real time, and is working across the Windows and Linux platforms.

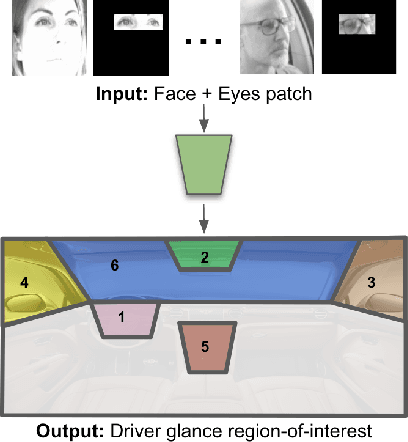

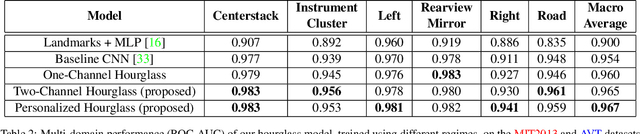

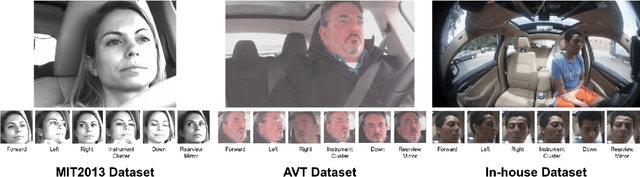

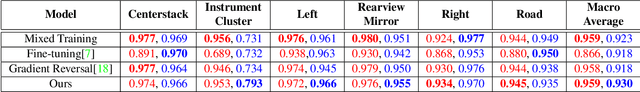

Driver Glance Classification In-the-wild: Towards Generalization Across Domains and Subjects

Dec 05, 2020

Abstract:Distracted drivers are dangerous drivers. Equipping advanced driver assistance systems (ADAS) with the ability to detect driver distraction can help prevent accidents and improve driver safety. In order to detect driver distraction, an ADAS must be able to monitor their visual attention. We propose a model that takes as input a patch of the driver's face along with a crop of the eye-region and classifies their glance into 6 coarse regions-of-interest (ROIs) in the vehicle. We demonstrate that an hourglass network, trained with an additional reconstruction loss, allows the model to learn stronger contextual feature representations than a traditional encoder-only classification module. To make the system robust to subject-specific variations in appearance and behavior, we design a personalized hourglass model tuned with an auxiliary input representing the driver's baseline glance behavior. Finally, we present a weakly supervised multi-domain training regimen that enables the hourglass to jointly learn representations from different domains (varying in camera type, angle), utilizing unlabeled samples and thereby reducing annotation cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge