Javier Ribera

Estimating Phenotypic Traits From UAV Based RGB Imagery

Jul 02, 2018

Abstract:In many agricultural applications one wants to characterize physical properties of plants and use the measurements to predict, for example biomass and environmental influence. This process is known as phenotyping. Traditional collection of phenotypic information is labor-intensive and time-consuming. Use of imagery is becoming popular for phenotyping. In this paper, we present methods to estimate traits of sorghum plants from RBG cameras on board of an unmanned aerial vehicle (UAV). The position and orientation of the imagery together with the coordinates of sparse points along the area of interest are derived through a new triangulation method. A rectified orthophoto mosaic is then generated from the imagery. The number of leaves is estimated and a model-based method to analyze the leaf morphology for leaf segmentation is proposed. We present a statistical model to find the location of each individual sorghum plant.

Weighted Hausdorff Distance: A Loss Function For Object Localization

Jun 20, 2018

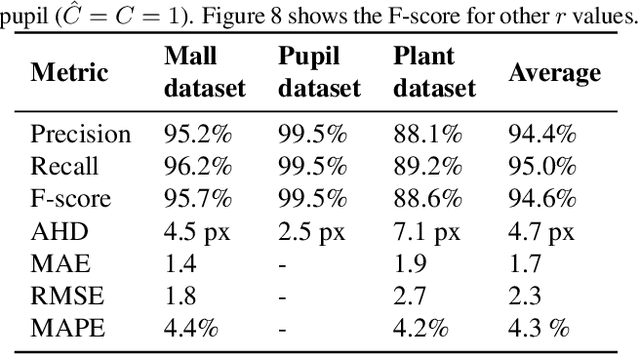

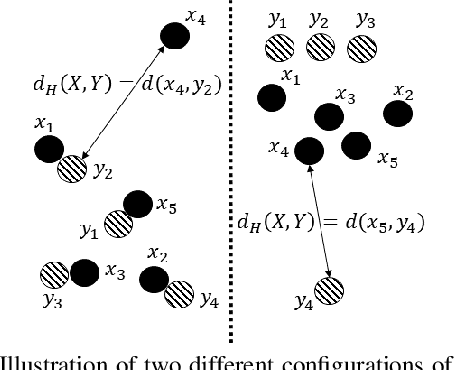

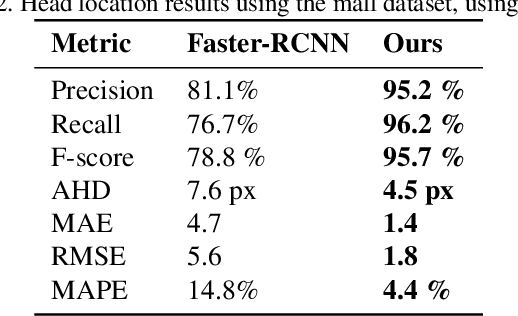

Abstract:Recent advances in Convolutional Neural Networks (CNN) have achieved remarkable results in localizing objects in images. In these networks, the training procedure usually requires providing bounding boxes or the maximum number of expected objects. In this paper, we address the task of estimating object locations without annotated bounding boxes, which are typically hand-drawn and time consuming to label. We propose a loss function that can be used in any Fully Convolutional Network (FCN) to estimate object locations. This loss function is a modification of the Average Hausdorff Distance between two unordered sets of points. The proposed method does not require one to "guess" the maximum number of objects in the image, and has no notion of bounding boxes, region proposals, or sliding windows. We evaluate our method with three datasets designed to locate people's heads, pupil centers and plant centers. We report an average precision and recall of 94% for the three datasets, and an average location error of 6 pixels in 256x256 images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge