James R. Kubricht

Symbolic Semantic Segmentation and Interpretation of COVID-19 Lung Infections in Chest CT volumes based on Emergent Languages

Aug 22, 2020

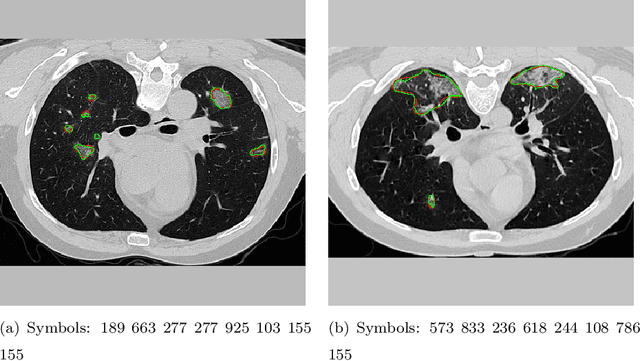

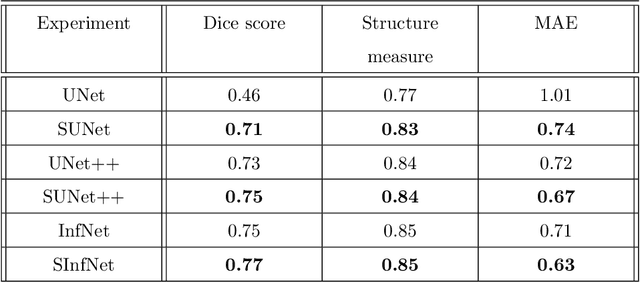

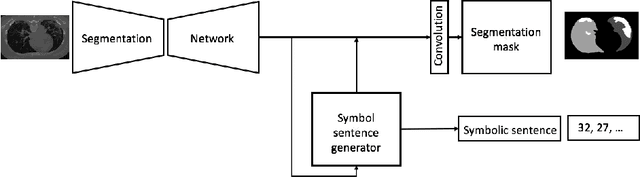

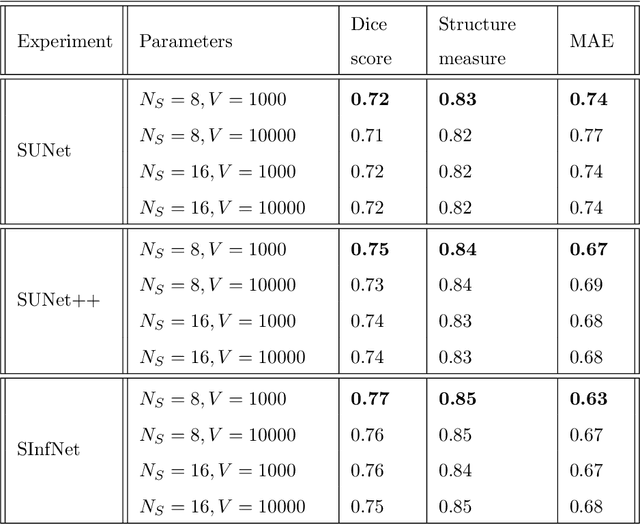

Abstract:The coronavirus disease (COVID-19) has resulted in a pandemic crippling the a breadth of services critical to daily life. Segmentation of lung infections in computerized tomography (CT) slices could be be used to improve diagnosis and understanding of COVID-19 in patients. Deep learning systems lack interpretability because of their black box nature. Inspired by human communication of complex ideas through language, we propose a symbolic framework based on emergent languages for the segmentation of COVID-19 infections in CT scans of lungs. We model the cooperation between two artificial agents - a Sender and a Receiver. These agents synergistically cooperate using emergent symbolic language to solve the task of semantic segmentation. Our game theoretic approach is to model the cooperation between agents unlike Generative Adversarial Networks (GANs). The Sender retrieves information from one of the higher layers of the deep network and generates a symbolic sentence sampled from a categorical distribution of vocabularies. The Receiver ingests the stream of symbols and cogenerates the segmentation mask. A private emergent language is developed that forms the communication channel used to describe the task of segmentation of COVID infections. We augment existing state of the art semantic segmentation architectures with our symbolic generator to form symbolic segmentation models. Our symbolic segmentation framework achieves state of the art performance for segmentation of lung infections caused by COVID-19. Our results show direct interpretation of symbolic sentences to discriminate between normal and infected regions, infection morphology and image characteristics. We show state of the art results for segmentation of COVID-19 lung infections in CT.

Emergent symbolic language based deep medical image classification

Aug 22, 2020

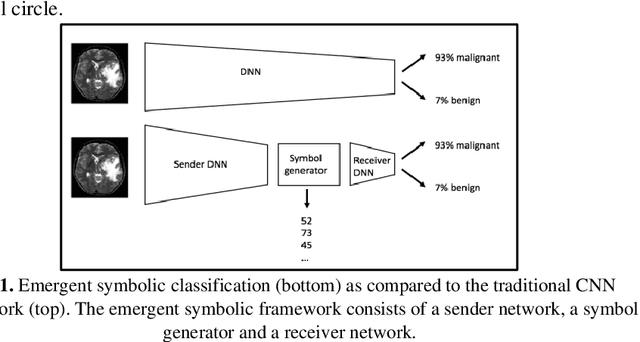

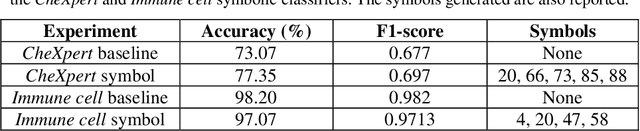

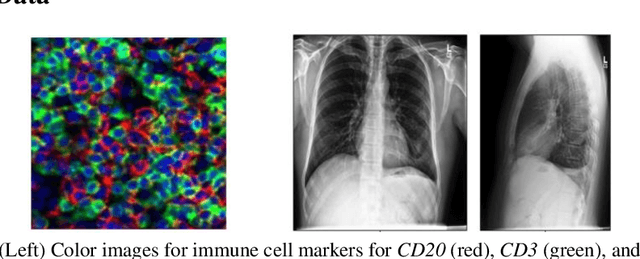

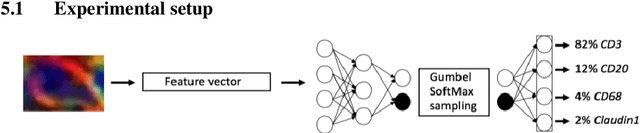

Abstract:Modern deep learning systems for medical image classification have demonstrated exceptional capabilities for distinguishing between image based medical categories. However, they are severely hindered by their ina-bility to explain the reasoning behind their decision making. This is partly due to the uninterpretable continuous latent representations of neural net-works. Emergent languages (EL) have recently been shown to enhance the capabilities of neural networks by equipping them with symbolic represen-tations in the framework of referential games. Symbolic representations are one of the cornerstones of highly explainable good old fashioned AI (GOFAI) systems. In this work, we demonstrate for the first time, the emer-gence of deep symbolic representations of emergent language in the frame-work of image classification. We show that EL based classification models can perform as well as, if not better than state of the art deep learning mod-els. In addition, they provide a symbolic representation that opens up an entire field of possibilities of interpretable GOFAI methods involving symbol manipulation. We demonstrate the EL classification framework on immune cell marker based cell classification and chest X-ray classification using the CheXpert dataset. Code is available online at https://github.com/AriChow/EL.

ESCELL: Emergent Symbolic Cellular Language

Jul 18, 2020

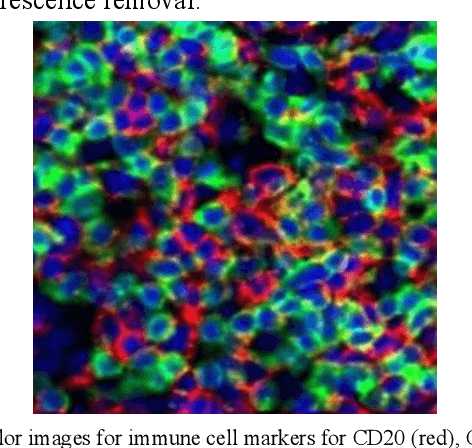

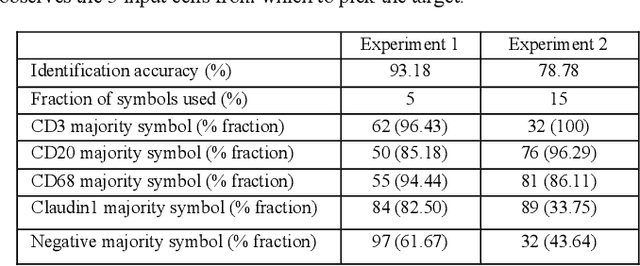

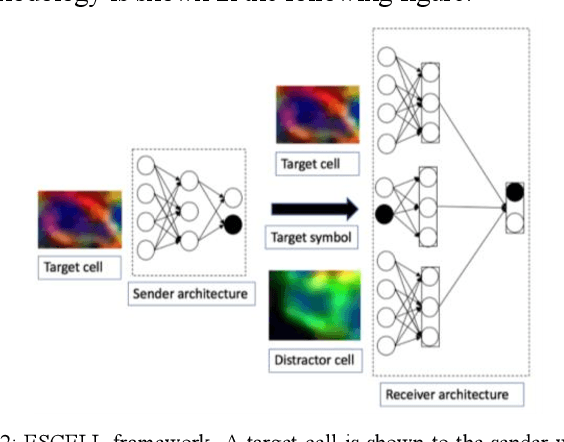

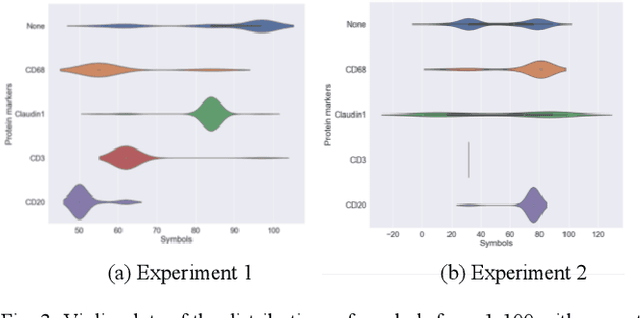

Abstract:We present ESCELL, a method for developing an emergent symbolic language of communication between multiple agents reasoning about cells. We show how agents are able to cooperate and communicate successfully in the form of symbols similar to human language to accomplish a task in the form of a referential game (Lewis' signaling game). In one form of the game, a sender and a receiver observe a set of cells from 5 different cell phenotypes. The sender is told one cell is a target and is allowed to send one symbol to the receiver from a fixed arbitrary vocabulary size. The receiver relies on the information in the symbol to identify the target cell. We train the sender and receiver networks to develop an innate emergent language between themselves to accomplish this task. We observe that the networks are able to successfully identify cells from 5 different phenotypes with an accuracy of 93.2%. We also introduce a new form of the signaling game where the sender is shown one image instead of all the images that the receiver sees. The networks successfully develop an emergent language to get an identification accuracy of 77.8%.

* IEEE International Symposium on Biomedical Imaging (IEEE ISBI 2020)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge