Jaegeun Park

Deep Learning-based Synthetic High-Resolution In-Depth Imaging Using an Attachable Dual-element Endoscopic Ultrasound Probe

Sep 13, 2023

Abstract:Endoscopic ultrasound (EUS) imaging has a trade-off between resolution and penetration depth. By considering the in-vivo characteristics of human organs, it is necessary to provide clinicians with appropriate hardware specifications for precise diagnosis. Recently, super-resolution (SR) ultrasound imaging studies, including the SR task in deep learning fields, have been reported for enhancing ultrasound images. However, most of those studies did not consider ultrasound imaging natures, but rather they were conventional SR techniques based on downsampling of ultrasound images. In this study, we propose a novel deep learning-based high-resolution in-depth imaging probe capable of offering low- and high-frequency ultrasound image pairs. We developed an attachable dual-element EUS probe with customized low- and high-frequency ultrasound transducers under small hardware constraints. We also designed a special geared structure to enable the same image plane. The proposed system was evaluated with a wire phantom and a tissue-mimicking phantom. After the evaluation, 442 ultrasound image pairs from the tissue-mimicking phantom were acquired. We then applied several deep learning models to obtain synthetic high-resolution in-depth images, thus demonstrating the feasibility of our approach for clinical unmet needs. Furthermore, we quantitatively and qualitatively analyzed the results to find a suitable deep-learning model for our task. The obtained results demonstrate that our proposed dual-element EUS probe with an image-to-image translation network has the potential to provide synthetic high-frequency ultrasound images deep inside tissues.

Cyclops: Open Platform for Scale Truck Platooning

Mar 03, 2022

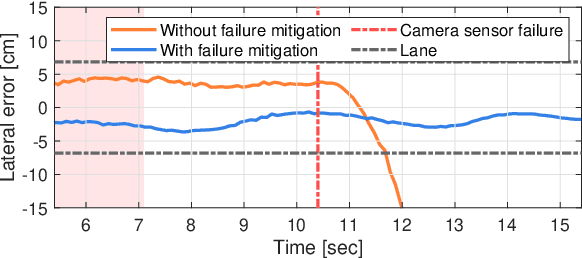

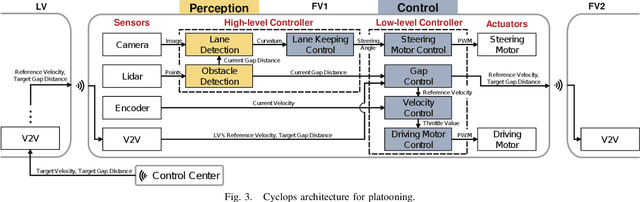

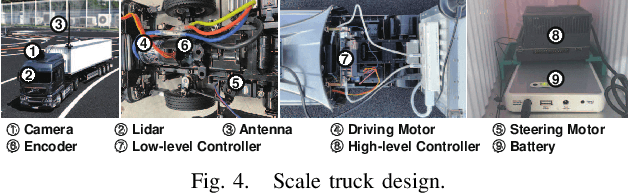

Abstract:Cyclops, introduced in this paper, is an open research platform for everyone that wants to validate novel ideas and approaches in the area of self-driving heavy-duty vehicle platooning. The platform consists of multiple 1/14 scale semi-trailer trucks, a scale proving ground, and associated computing, communication and control modules that enable self-driving on the proving ground. A perception system for each vehicle is composed of a lidar-based object tracking system and a lane detection/control system. The former is to maintain the gap to the leading vehicle and the latter is to maintain the vehicle within the lane by steering control. The lane detection system is optimized for truck platooning where the field of view of the front-facing camera is severely limited due to a small gap to the leading vehicle. This platform is particularly amenable to validate mitigation strategies for safety-critical situations. Indeed, a simplex structure is adopted in the embedded module for testing various fail safe operations. We illustrate a scenario where camera sensor fails in the perception system but the vehicle operates at a reduced capacity to a graceful stop. Details of the Cyclops including 3D CAD designs and algorithm source codes are released for those who want to build similar testbeds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge