Jacques Palicot

Wireless Connectivity in the Sub-THz Spectrum: A Path to 6G

Jan 17, 2022

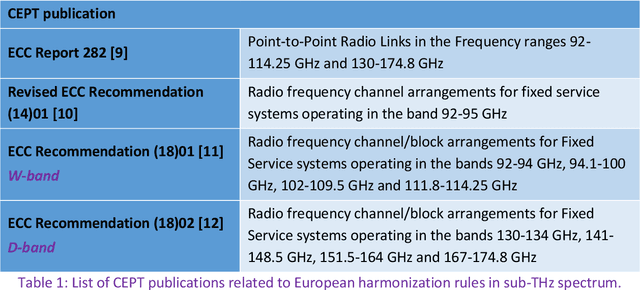

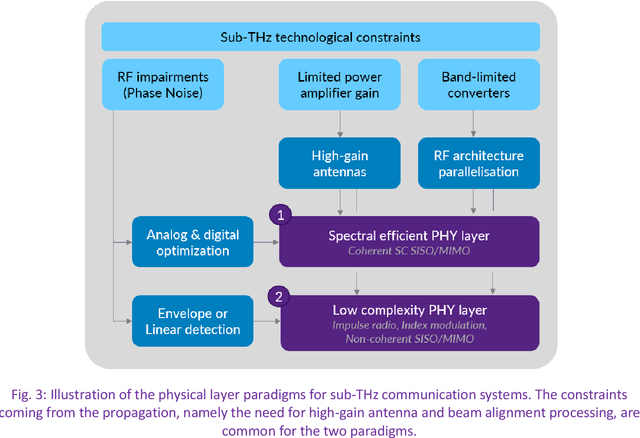

Abstract:Wireless communication in millimetre wave bands, namely above 20 GHz and up to 300 GHz, is foreseen as a key enabler technology for the next generation of wireless systems. The huge available bandwidth is contemplated to achieve high data-rate wireless communications, and hence, to fulfil the requirements of future wireless networks. In this paper, we discuss and illustrate new paradigms for the sub-THz physical layer, which either aim at maximizing the spectral efficiency, minimizing the device complexity, or finding good tradeoff. The solutions offered by appropriate modulation schemes and multi-antenna systems are assessed based on various potential scenarios.

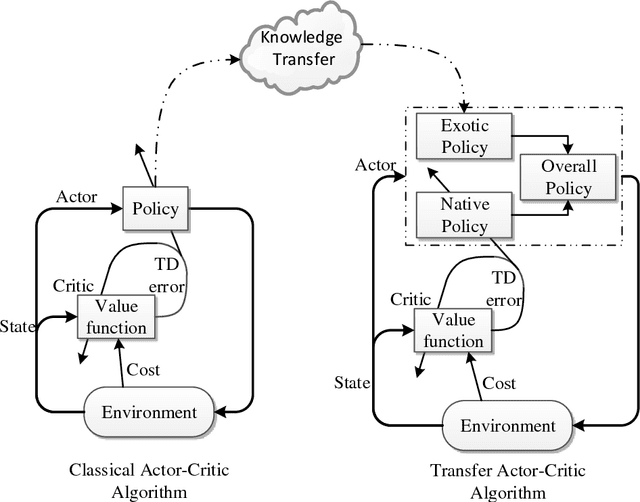

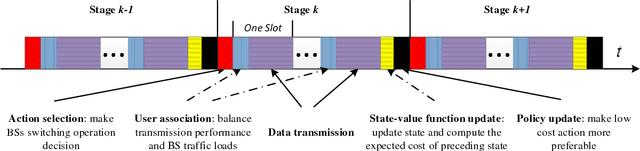

TACT: A Transfer Actor-Critic Learning Framework for Energy Saving in Cellular Radio Access Networks

Apr 04, 2014

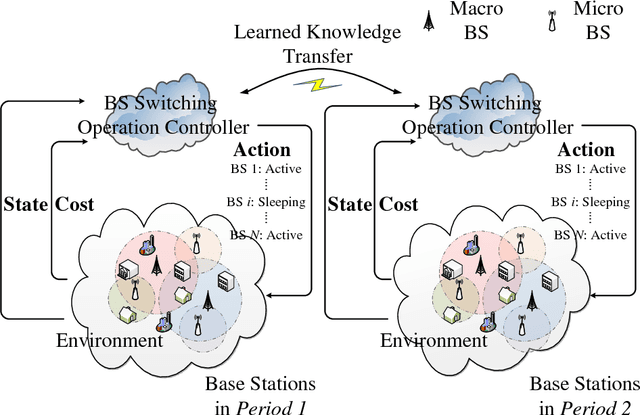

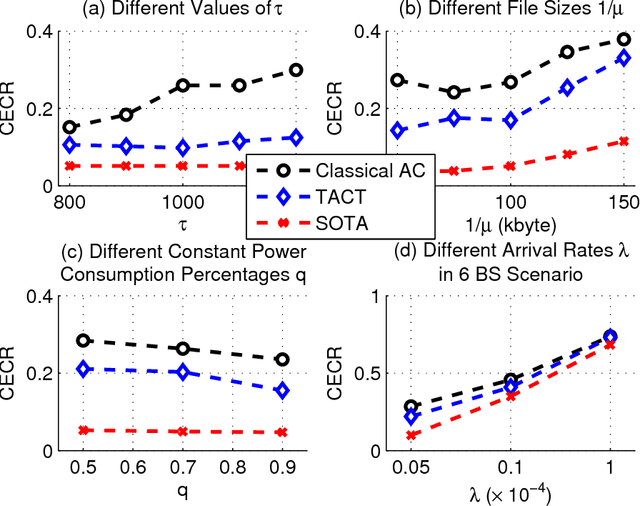

Abstract:Recent works have validated the possibility of improving energy efficiency in radio access networks (RANs), achieved by dynamically turning on/off some base stations (BSs). In this paper, we extend the research over BS switching operations, which should match up with traffic load variations. Instead of depending on the dynamic traffic loads which are still quite challenging to precisely forecast, we firstly formulate the traffic variations as a Markov decision process. Afterwards, in order to foresightedly minimize the energy consumption of RANs, we design a reinforcement learning framework based BS switching operation scheme. Furthermore, to avoid the underlying curse of dimensionality in reinforcement learning, a transfer actor-critic algorithm (TACT), which utilizes the transferred learning expertise in historical periods or neighboring regions, is proposed and provably converges. In the end, we evaluate our proposed scheme by extensive simulations under various practical configurations and show that the proposed TACT algorithm contributes to a performance jumpstart and demonstrates the feasibility of significant energy efficiency improvement at the expense of tolerable delay performance.

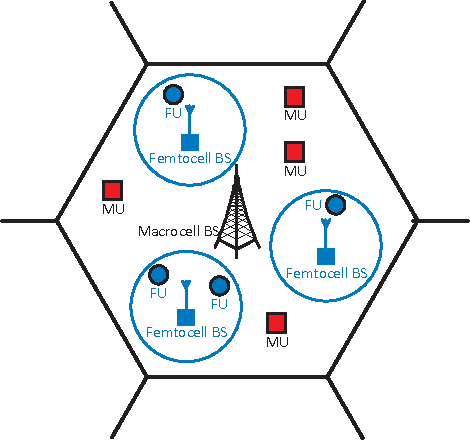

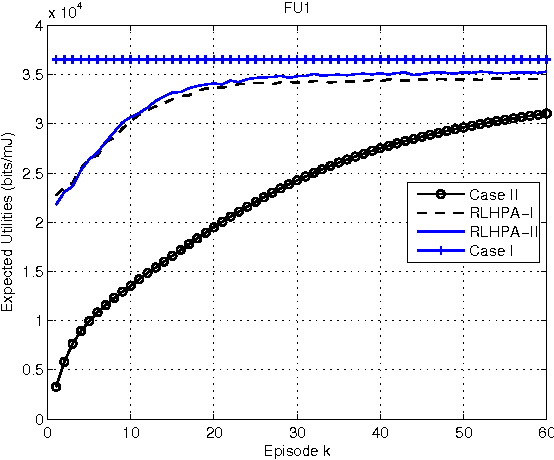

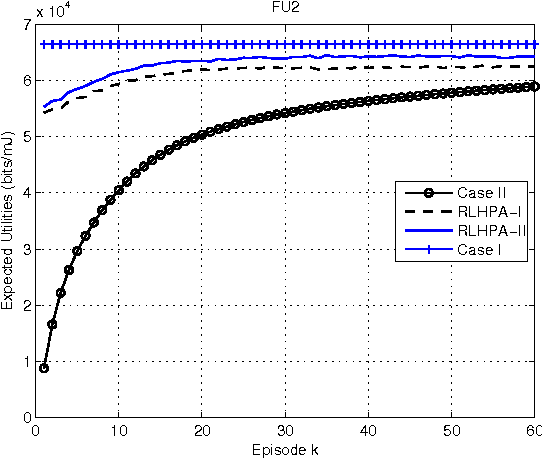

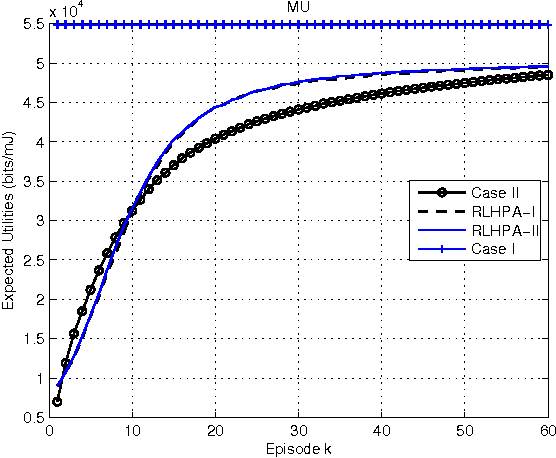

On Improving Energy Efficiency within Green Femtocell Networks: A Hierarchical Reinforcement Learning Approach

Mar 13, 2013

Abstract:One of the efficient solutions of improving coverage and increasing capacity in cellular networks is the deployment of femtocells. As the cellular networks are becoming more complex, energy consumption of whole network infrastructure is becoming important in terms of both operational costs and environmental impacts. This paper investigates energy efficiency of two-tier femtocell networks through combining game theory and stochastic learning. With the Stackelberg game formulation, a hierarchical reinforcement learning framework is applied for studying the joint expected utility maximization of macrocells and femtocells subject to the minimum signal-to-interference-plus-noise-ratio requirements. In the learning procedure, the macrocells act as leaders and the femtocells are followers. At each time step, the leaders commit to dynamic strategies based on the best responses of the followers, while the followers compete against each other with no further information but the leaders' transmission parameters. In this paper, we propose two reinforcement learning based intelligent algorithms to schedule each cell's stochastic power levels. Numerical experiments are presented to validate the investigations. The results show that the two learning algorithms substantially improve the energy efficiency of the femtocell networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge