Jacob Penney

SkillScope: A Tool to Predict Fine-Grained Skills Needed to Solve Issues on GitHub

Jan 27, 2025

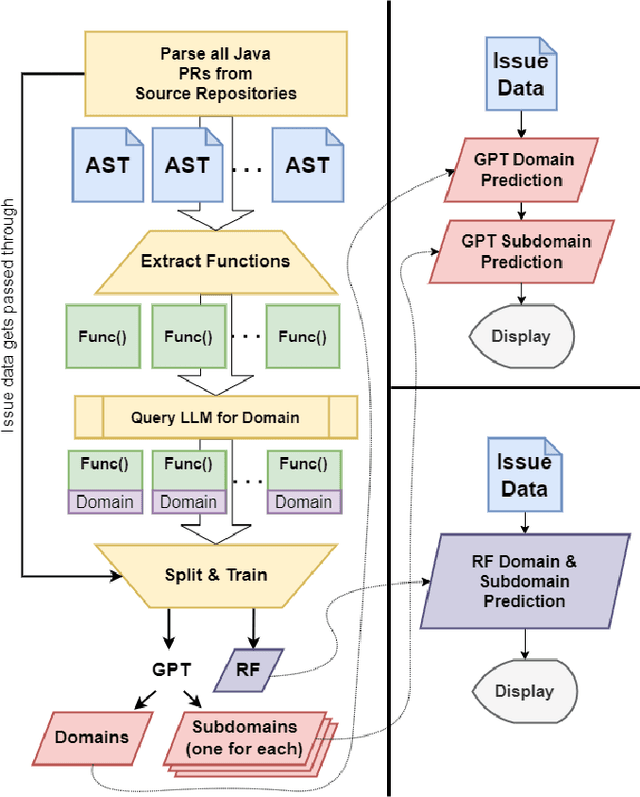

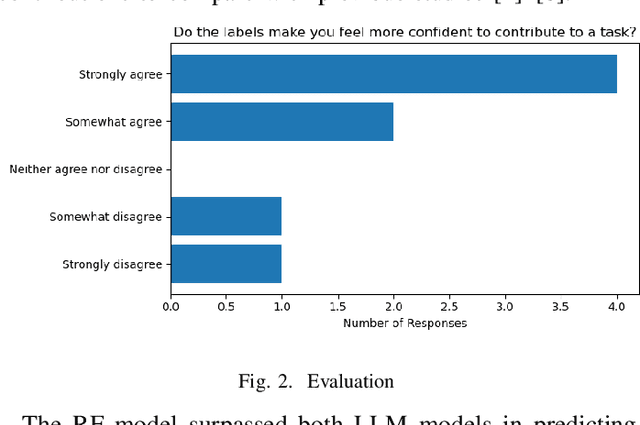

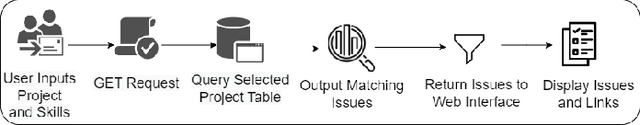

Abstract:New contributors often struggle to find tasks that they can tackle when onboarding onto a new Open Source Software (OSS) project. One reason for this difficulty is that issue trackers lack explanations about the knowledge or skills needed to complete a given task successfully. These explanations can be complex and time-consuming to produce. Past research has partially addressed this problem by labeling issues with issue types, issue difficulty level, and issue skills. However, current approaches are limited to a small set of labels and lack in-depth details about their semantics, which may not sufficiently help contributors identify suitable issues. To surmount this limitation, this paper explores large language models (LLMs) and Random Forest (RF) to predict the multilevel skills required to solve the open issues. We introduce a novel tool, SkillScope, which retrieves current issues from Java projects hosted on GitHub and predicts the multilevel programming skills required to resolve these issues. In a case study, we demonstrate that SkillScope could predict 217 multilevel skills for tasks with 91% precision, 88% recall, and 89% F-measure on average. Practitioners can use this tool to better delegate or choose tasks to solve in OSS projects.

Anticipating User Needs: Insights from Design Fiction on Conversational Agents for Computational Thinking

Nov 12, 2023Abstract:Computational thinking, and by extension, computer programming, is notoriously challenging to learn. Conversational agents and generative artificial intelligence (genAI) have the potential to facilitate this learning process by offering personalized guidance, interactive learning experiences, and code generation. However, current genAI-based chatbots focus on professional developers and may not adequately consider educational needs. Involving educators in conceiving educational tools is critical for ensuring usefulness and usability. We enlisted \numParticipants{} instructors to engage in design fiction sessions in which we elicited abilities such a conversational agent supported by genAI should display. Participants envisioned a conversational agent that guides students stepwise through exercises, tuning its method of guidance with an awareness of the educational background, skills and deficits, and learning preferences. The insights obtained in this paper can guide future implementations of tutoring conversational agents oriented toward teaching computational thinking and computer programming.

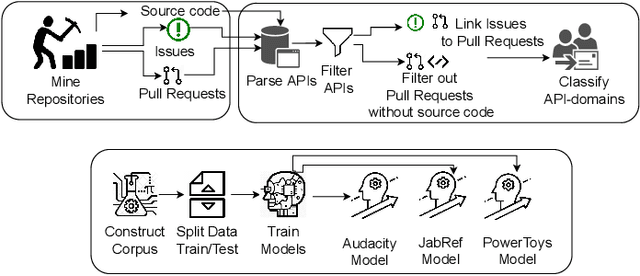

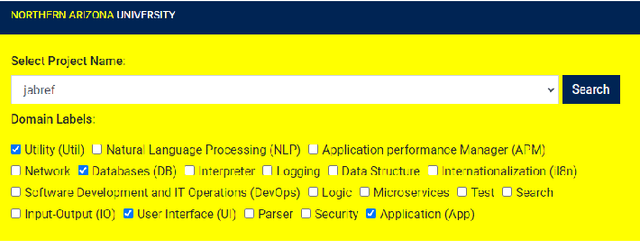

Tag that issue: Applying API-domain labels in issue tracking systems

Apr 06, 2023Abstract:Labeling issues with the skills required to complete them can help contributors to choose tasks in Open Source Software projects. However, manually labeling issues is time-consuming and error-prone, and current automated approaches are mostly limited to classifying issues as bugs/non-bugs. We investigate the feasibility and relevance of automatically labeling issues with what we call "API-domains," which are high-level categories of APIs. Therefore, we posit that the APIs used in the source code affected by an issue can be a proxy for the type of skills (e.g., DB, security, UI) needed to work on the issue. We ran a user study (n=74) to assess API-domain labels' relevancy to potential contributors, leveraged the issues' descriptions and the project history to build prediction models, and validated the predictions with contributors (n=20) of the projects. Our results show that (i) newcomers to the project consider API-domain labels useful in choosing tasks, (ii) labels can be predicted with a precision of 84% and a recall of 78.6% on average, (iii) the results of the predictions reached up to 71.3% in precision and 52.5% in recall when training with a project and testing in another (transfer learning), and (iv) project contributors consider most of the predictions helpful in identifying needed skills. These findings suggest our approach can be applied in practice to automatically label issues, assisting developers in finding tasks that better match their skills.

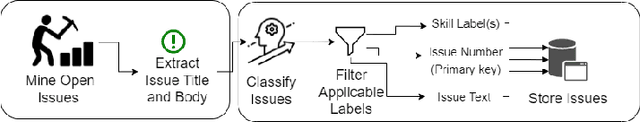

GiveMeLabeledIssues: An Open Source Issue Recommendation System

Mar 23, 2023

Abstract:Developers often struggle to navigate an Open Source Software (OSS) project's issue-tracking system and find a suitable task. Proper issue labeling can aid task selection, but current tools are limited to classifying the issues according to their type (e.g., bug, question, good first issue, feature, etc.). In contrast, this paper presents a tool (GiveMeLabeledIssues) that mines project repositories and labels issues based on the skills required to solve them. We leverage the domain of the APIs involved in the solution (e.g., User Interface (UI), Test, Databases (DB), etc.) as a proxy for the required skills. GiveMeLabeledIssues facilitates matching developers' skills to tasks, reducing the burden on project maintainers. The tool obtained a precision of 83.9% when predicting the API domains involved in the issues. The replication package contains instructions on executing the tool and including new projects. A demo video is available at https://www.youtube.com/watch?v=ic2quUue7i8

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge