Jack Cao

Learning Representations by Humans, for Humans

May 29, 2019

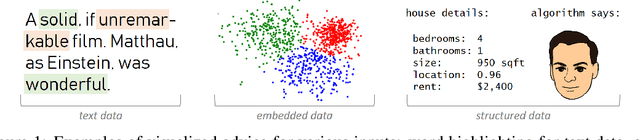

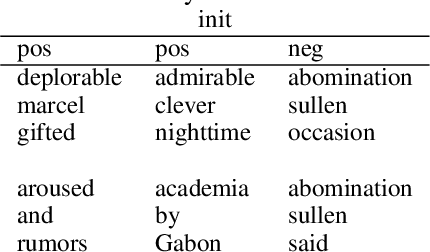

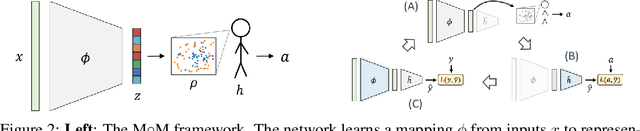

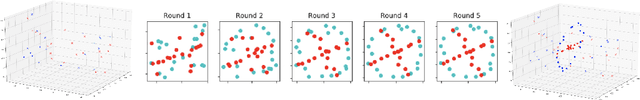

Abstract:We propose a new, complementary approach to interpretability, in which machines are not considered as experts whose role it is to suggest what should be done and why, but rather as advisers. The objective of these models is to communicate to a human decision-maker not what to decide but how to decide. In this way, we propose that machine learning pipelines will be more readily adopted, since they allow a decision-maker to retain agency. Specifically, we develop a framework for learning representations by humans, for humans, in which we learn representations of inputs ("advice") that are effective for human decision-making. Representation-generating models are trained with humans-in-the-loop, implicitly incorporating the human decision-making model. We show that optimizing for human decision-making rather than accuracy is effective in promoting good decisions in various classification tasks while inherently maintaining a sense of interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge