J-Anne Yow

Simulating Safe Bite Transfer in Robot-Assisted Feeding with a Soft Head and Articulated Jaw

Feb 26, 2025Abstract:Ensuring safe and comfortable bite transfer during robot-assisted feeding is challenging due to the close physical human-robot interaction required. This paper presents a novel approach to modeling physical human-robot interaction in a physics-based simulator (MuJoCo) using soft-body dynamics. We integrate a flexible head model with a rigid skeleton while accounting for internal dynamics, enabling the flexible model to be actuated by the skeleton. Incorporating realistic soft-skin contact dynamics in simulation allows for systematically evaluating bite transfer parameters, such as insertion depth and entry angle, and their impact on user safety and comfort. Our findings suggest that a straight-in-straight-out strategy minimizes forces and enhances user comfort in robot-assisted feeding, assuming a static head. This simulation-based approach offers a safer and more controlled alternative to real-world experimentation. Supplementary videos can be found at: https://tinyurl.com/224yh2kx.

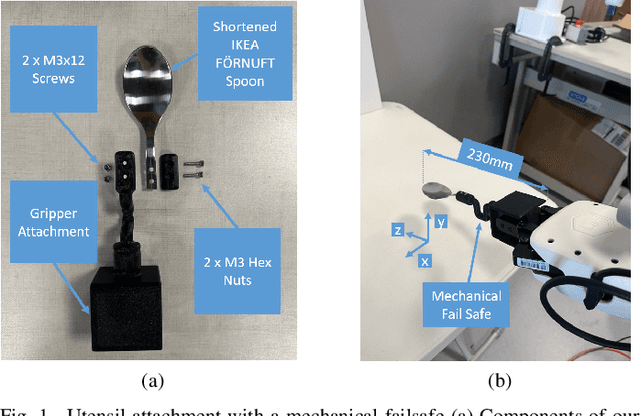

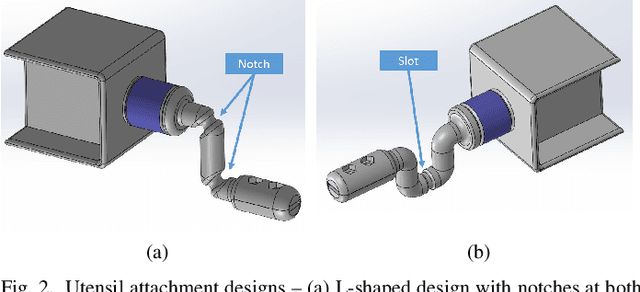

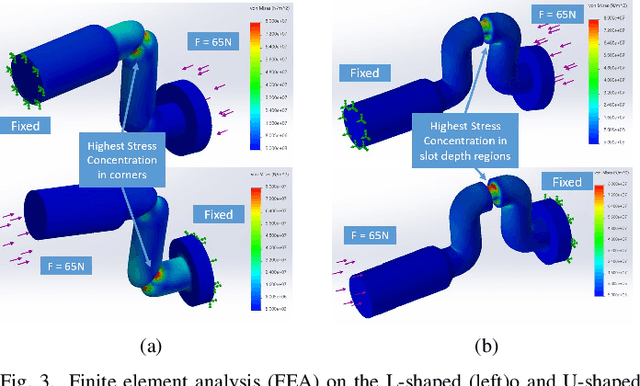

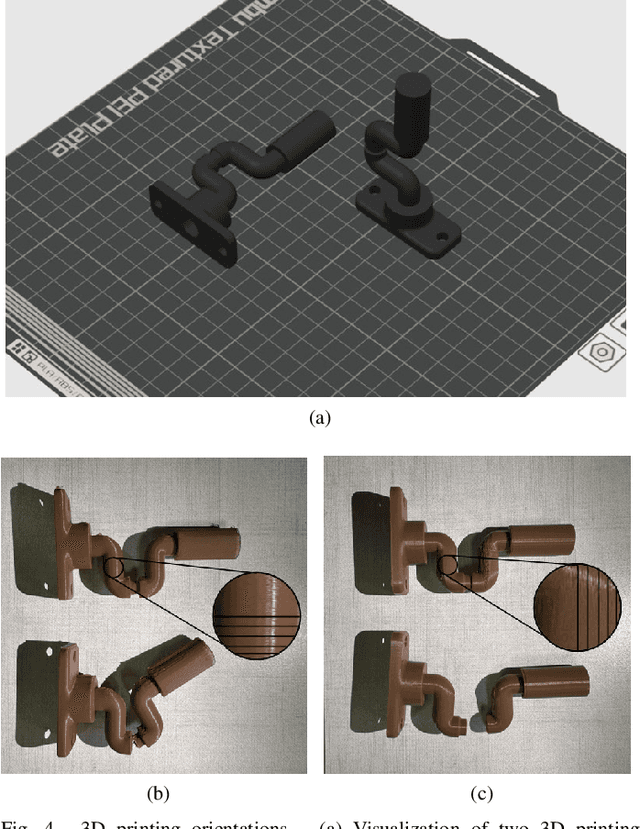

Design of a Breakaway Utensil Attachment for Enhanced Safety in Robot-Assisted Feeding

Feb 25, 2025

Abstract:Robot-assisted feeding systems enhance the independence of individuals with motor impairments and alleviate caregiver burden. While existing systems predominantly rely on software-based safety features to mitigate risks during unforeseen collisions, this study explores the use of a mechanical fail-safe to improve safety. We designed a breakaway utensil attachment that decouples forces exerted by the robot on the user when excessive forces occur. Finite element analysis (FEA) simulations were performed to predict failure points under various loading conditions, followed by experimental validation using 3D-printed attachments with variations in slot depth and wall loops. To facilitate testing, a drop test rig was developed and validated. Our results demonstrated a consistent failure point at the slot of the attachment, with a slot depth of 1 mm and three wall loops achieving failure at the target force of 65 N. Additionally, the parameters can be tailored to customize the breakaway force based on user-specific factors, such as comfort and pain tolerance. CAD files and utensil assembly instructions can be found here: https://tinyurl.com/rfa-utensil-attachment

ExTraCT -- Explainable Trajectory Corrections from language inputs using Textual description of features

Jan 08, 2024Abstract:Natural language provides an intuitive and expressive way of conveying human intent to robots. Prior works employed end-to-end methods for learning trajectory deformations from language corrections. However, such methods do not generalize to new initial trajectories or object configurations. This work presents ExTraCT, a modular framework for trajectory corrections using natural language that combines Large Language Models (LLMs) for natural language understanding and trajectory deformation functions. Given a scene, ExTraCT generates the trajectory modification features (scene-specific and scene-independent) and their corresponding natural language textual descriptions for the objects in the scene online based on a template. We use LLMs for semantic matching of user utterances to the textual descriptions of features. Based on the feature matched, a trajectory modification function is applied to the initial trajectory, allowing generalization to unseen trajectories and object configurations. Through user studies conducted both in simulation and with a physical robot arm, we demonstrate that trajectories deformed using our method were more accurate and were preferred in about 80\% of cases, outperforming the baseline. We also showcase the versatility of our system in a manipulation task and an assistive feeding task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge