Ivy B. Peng

Perspectives on AI Architectures and Co-design for Earth System Predictability

Apr 07, 2023Abstract:Recently, the U.S. Department of Energy (DOE), Office of Science, Biological and Environmental Research (BER), and Advanced Scientific Computing Research (ASCR) programs organized and held the Artificial Intelligence for Earth System Predictability (AI4ESP) workshop series. From this workshop, a critical conclusion that the DOE BER and ASCR community came to is the requirement to develop a new paradigm for Earth system predictability focused on enabling artificial intelligence (AI) across the field, lab, modeling, and analysis activities, called ModEx. The BER's `Model-Experimentation', ModEx, is an iterative approach that enables process models to generate hypotheses. The developed hypotheses inform field and laboratory efforts to collect measurement and observation data, which are subsequently used to parameterize, drive, and test model (e.g., process-based) predictions. A total of 17 technical sessions were held in this AI4ESP workshop series. This paper discusses the topic of the `AI Architectures and Co-design' session and associated outcomes. The AI Architectures and Co-design session included two invited talks, two plenary discussion panels, and three breakout rooms that covered specific topics, including: (1) DOE HPC Systems, (2) Cloud HPC Systems, and (3) Edge computing and Internet of Things (IoT). We also provide forward-looking ideas and perspectives on potential research in this co-design area that can be achieved by synergies with the other 16 session topics. These ideas include topics such as: (1) reimagining co-design, (2) data acquisition to distribution, (3) heterogeneous HPC solutions for integration of AI/ML and other data analytics like uncertainty quantification with earth system modeling and simulation, and (4) AI-enabled sensor integration into earth system measurements and observations. Such perspectives are a distinguishing aspect of this paper.

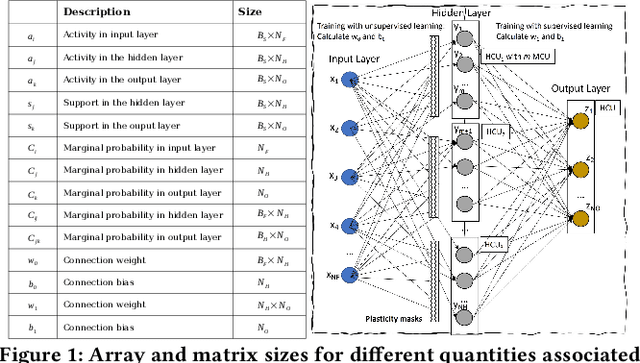

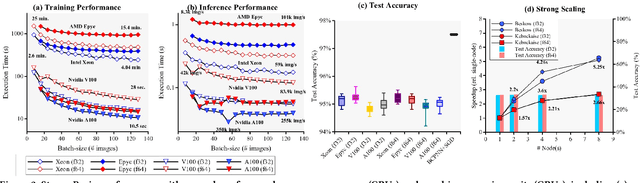

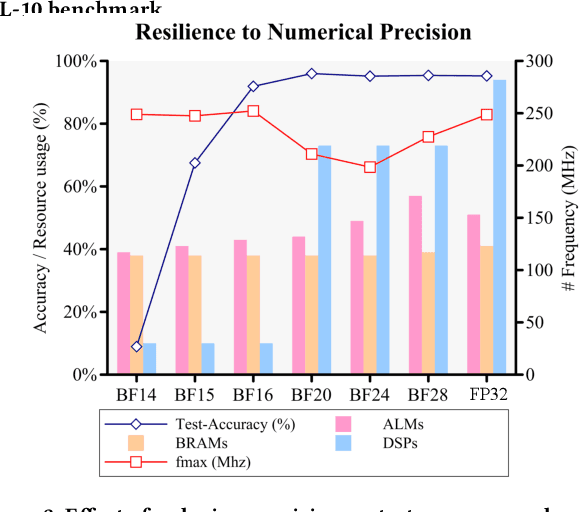

StreamBrain: An HPC Framework for Brain-like Neural Networks on CPUs, GPUs and FPGAs

Jun 09, 2021

Abstract:The modern deep learning method based on backpropagation has surged in popularity and has been used in multiple domains and application areas. At the same time, there are other -- less-known -- machine learning algorithms with a mature and solid theoretical foundation whose performance remains unexplored. One such example is the brain-like Bayesian Confidence Propagation Neural Network (BCPNN). In this paper, we introduce StreamBrain -- a framework that allows neural networks based on BCPNN to be practically deployed in High-Performance Computing systems. StreamBrain is a domain-specific language (DSL), similar in concept to existing machine learning (ML) frameworks, and supports backends for CPUs, GPUs, and even FPGAs. We empirically demonstrate that StreamBrain can train the well-known ML benchmark dataset MNIST within seconds, and we are the first to demonstrate BCPNN on STL-10 size networks. We also show how StreamBrain can be used to train with custom floating-point formats and illustrate the impact of using different bfloat variations on BCPNN using FPGAs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge