Itai Panasoff

Effective Sampling for Robot Motion Planning Through the Lens of Lattices

Feb 07, 2025Abstract:Sampling-based methods for motion planning, which capture the structure of the robot's free space via (typically random) sampling, have gained popularity due to their scalability, simplicity, and for offering global guarantees, such as probabilistic completeness and asymptotic optimality. Unfortunately, the practicality of those guarantees remains limited as they do not provide insights into the behavior of motion planners for a finite number of samples (i.e., a finite running time). In this work, we harness lattice theory and the concept of $(\delta,\epsilon)$-completeness by Tsao et al. (2020) to construct deterministic sample sets that endow their planners with strong finite-time guarantees while minimizing running time. In particular, we introduce a highly-efficient deterministic sampling approach based on the $A_d^*$ lattice, which is the best-known geometric covering in dimensions $\leq 21$. Using our new sampling approach, we obtain at least an order-of-magnitude speedup over existing deterministic and uniform random sampling methods for complex motion-planning problems. Overall, our work provides deep mathematical insights while advancing the practical applicability of sampling-based motion planning.

On the Perceptron's Compression

Jun 14, 2018

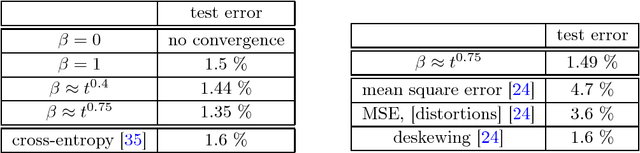

Abstract:We study and provide exposition to several phenomena that are related to the perceptron's compression. One theme concerns modifications of the perceptron algorithm that yield better guarantees on the margin of the hyperplane it outputs. These modifications can be useful in training neural networks as well, and we demonstrate them with some experimental data. In a second theme, we deduce conclusions from the perceptron's compression in various contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge