Ibrahim Shaer

Pruning-Based TinyML Optimization of Machine Learning Models for Anomaly Detection in Electric Vehicle Charging Infrastructure

Mar 19, 2025

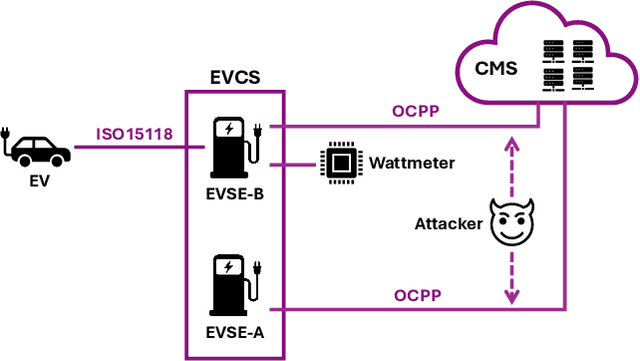

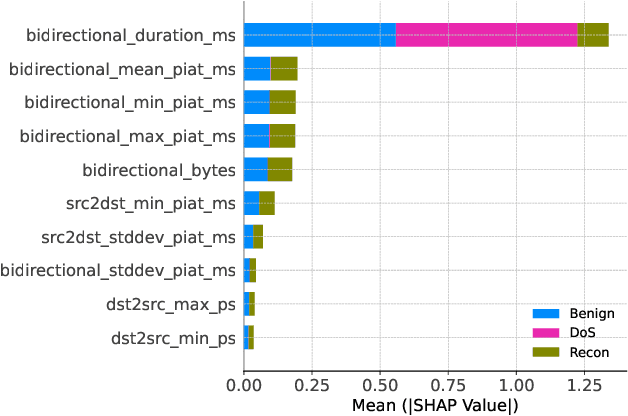

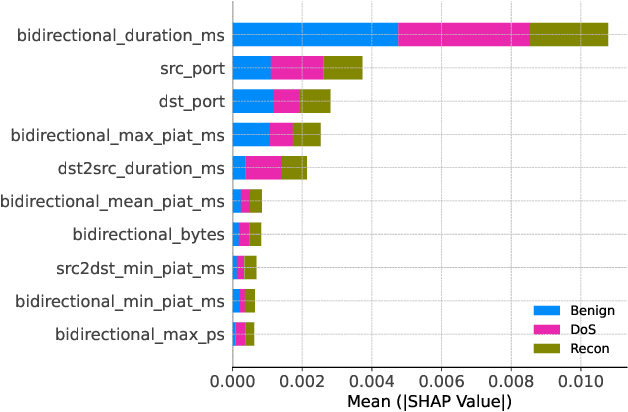

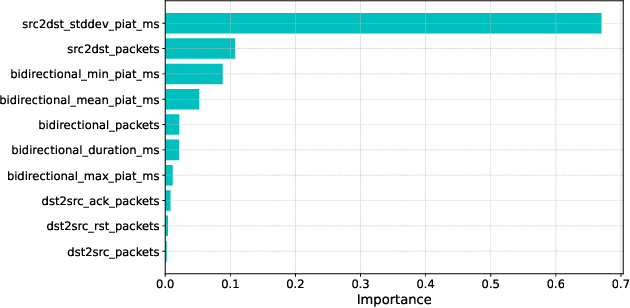

Abstract:With the growing need for real-time processing on IoT devices, optimizing machine learning (ML) models' size, latency, and computational efficiency is essential. This paper investigates a pruning method for anomaly detection in resource-constrained environments, specifically targeting Electric Vehicle Charging Infrastructure (EVCI). Using the CICEVSE2024 dataset, we trained and optimized three models-Multi-Layer Perceptron (MLP), Long Short-Term Memory (LSTM), and XGBoost-through hyperparameter tuning with Optuna, further refining them using SHapley Additive exPlanations (SHAP)-based feature selection (FS) and unstructured pruning techniques. The optimized models achieved significant reductions in model size and inference times, with only a marginal impact on their performance. Notably, our findings indicate that, in the context of EVCI, pruning and FS can enhance computational efficiency while retaining critical anomaly detection capabilities.

Thwarting Cybersecurity Attacks with Explainable Concept Drift

Mar 18, 2024

Abstract:Cyber-security attacks pose a significant threat to the operation of autonomous systems. Particularly impacted are the Heating, Ventilation, and Air Conditioning (HVAC) systems in smart buildings, which depend on data gathered by sensors and Machine Learning (ML) models using the captured data. As such, attacks that alter the readings of these sensors can severely affect the HVAC system operations impacting residents' comfort and energy reduction goals. Such attacks may induce changes in the online data distribution being fed to the ML models, violating the fundamental assumption of similarity in training and testing data distribution. This leads to a degradation in model prediction accuracy due to a phenomenon known as Concept Drift (CD) - the alteration in the relationship between input features and the target variable. Addressing CD requires identifying the source of drift to apply targeted mitigation strategies, a process termed drift explanation. This paper proposes a Feature Drift Explanation (FDE) module to identify the drifting features. FDE utilizes an Auto-encoder (AE) that reconstructs the activation of the first layer of the regression Deep Learning (DL) model and finds their latent representations. When a drift is detected, each feature of the drifting data is replaced by its representative counterpart from the training data. The Minkowski distance is then used to measure the divergence between the altered drifting data and the original training data. The results show that FDE successfully identifies 85.77 % of drifting features and showcases its utility in the DL adaptation method under the CD phenomenon. As a result, the FDE method is an effective strategy for identifying drifting features towards thwarting cyber-security attacks.

Efficient Transformer-based Hyper-parameter Optimization for Resource-constrained IoT Environments

Mar 18, 2024

Abstract:The hyper-parameter optimization (HPO) process is imperative for finding the best-performing Convolutional Neural Networks (CNNs). The automation process of HPO is characterized by its sizable computational footprint and its lack of transparency; both important factors in a resource-constrained Internet of Things (IoT) environment. In this paper, we address these problems by proposing a novel approach that combines transformer architecture and actor-critic Reinforcement Learning (RL) model, TRL-HPO, equipped with multi-headed attention that enables parallelization and progressive generation of layers. These assumptions are founded empirically by evaluating TRL-HPO on the MNIST dataset and comparing it with state-of-the-art approaches that build CNN models from scratch. The results show that TRL-HPO outperforms the classification results of these approaches by 6.8% within the same time frame, demonstrating the efficiency of TRL-HPO for the HPO process. The analysis of the results identifies the main culprit for performance degradation attributed to stacking fully connected layers. This paper identifies new avenues for improving RL-based HPO processes in resource-constrained environments.

CorrFL: Correlation-Based Neural Network Architecture for Unavailability Concerns in a Heterogeneous IoT Environment

Jul 22, 2023Abstract:The Federated Learning (FL) paradigm faces several challenges that limit its application in real-world environments. These challenges include the local models' architecture heterogeneity and the unavailability of distributed Internet of Things (IoT) nodes due to connectivity problems. These factors posit the question of "how can the available models fill the training gap of the unavailable models?". This question is referred to as the "Oblique Federated Learning" problem. This problem is encountered in the studied environment that includes distributed IoT nodes responsible for predicting CO2 concentrations. This paper proposes the Correlation-based FL (CorrFL) approach influenced by the representational learning field to address this problem. CorrFL projects the various model weights to a common latent space to address the model heterogeneity. Its loss function minimizes the reconstruction loss when models are absent and maximizes the correlation between the generated models. The latter factor is critical because of the intersection of the feature spaces of the IoT devices. CorrFL is evaluated on a realistic use case, involving the unavailability of one IoT device and heightened activity levels that reflect occupancy. The generated CorrFL models for the unavailable IoT device from the available ones trained on the new environment are compared against models trained on different use cases, referred to as the benchmark model. The evaluation criteria combine the mean absolute error (MAE) of predictions and the impact of the amount of exchanged data on the prediction performance improvement. Through a comprehensive experimental procedure, the CorrFL model outperformed the benchmark model in every criterion.

* 17 pages, 12 figures, IEEE Transactions on Network and Service Management

Sound Event Classification in an Industrial Environment: Pipe Leakage Detection Use Case

May 05, 2022

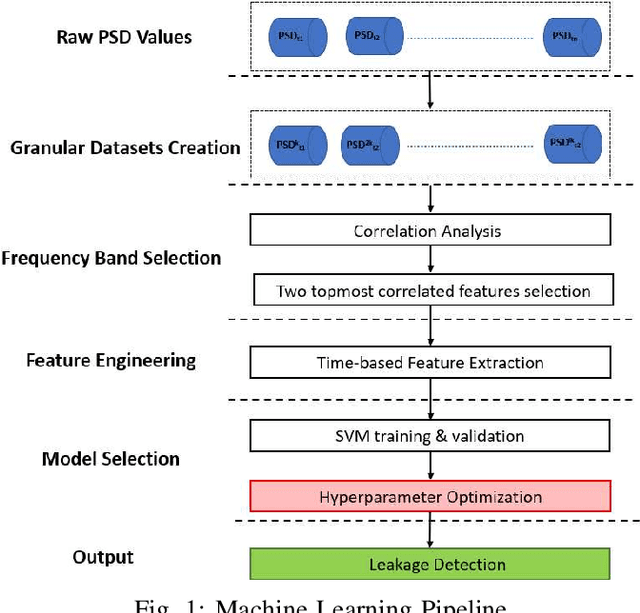

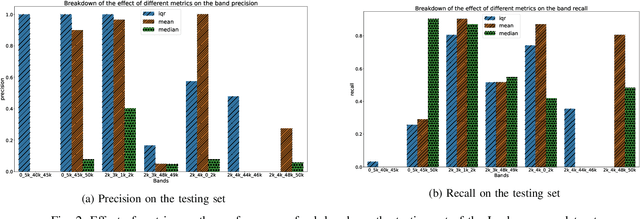

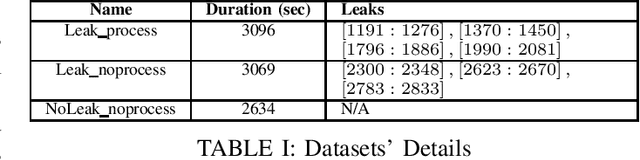

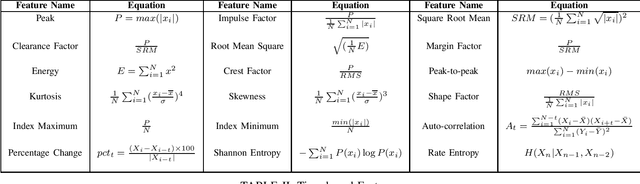

Abstract:In this work, a multi-stage Machine Learning (ML) pipeline is proposed for pipe leakage detection in an industrial environment. As opposed to other industrial and urban environments, the environment under study includes many interfering background noises, complicating the identification of leaks. Furthermore, the harsh environmental conditions limit the amount of data collected and impose the use of low-complexity algorithms. To address the environment's constraints, the developed ML pipeline applies multiple steps, each addressing the environment's challenges. The proposed ML pipeline first reduces the data dimensionality by feature selection techniques and then incorporates time correlations by extracting time-based features. The resultant features are fed to a Support Vector Machine (SVM) of low-complexity that generalizes well to a small amount of data. An extensive experimental procedure was carried out on two datasets, one with background industrial noise and one without, to evaluate the validity of the proposed pipeline. The SVM hyper-parameters and parameters specific to the pipeline steps were tuned as part of the experimental procedure. The best models obtained from the dataset with industrial noise and leaks were applied to datasets without noise and with and without leaks to test their generalizability. The results show that the model produces excellent results with 99\% accuracy and an F1-score of 0.93 and 0.9 for the respective datasets.

Concept Drift Detection in Federated Networked Systems

Sep 13, 2021

Abstract:As next-generation networks materialize, increasing levels of intelligence are required. Federated Learning has been identified as a key enabling technology of intelligent and distributed networks; however, it is prone to concept drift as with any machine learning application. Concept drift directly affects the model's performance and can result in severe consequences considering the critical and emergency services provided by modern networks. To mitigate the adverse effects of drift, this paper proposes a concept drift detection system leveraging the federated learning updates provided at each iteration of the federated training process. Using dimensionality reduction and clustering techniques, a framework that isolates the system's drifted nodes is presented through experiments using an Intelligent Transportation System as a use case. The presented work demonstrates that the proposed framework is able to detect drifted nodes in a variety of non-iid scenarios at different stages of drift and different levels of system exposure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge