Hyunkyung Han

Mono-Modalizing Extremely Heterogeneous Multi-Modal Medical Image Registration

Jun 18, 2025Abstract:In clinical practice, imaging modalities with functional characteristics, such as positron emission tomography (PET) and fractional anisotropy (FA), are often aligned with a structural reference (e.g., MRI, CT) for accurate interpretation or group analysis, necessitating multi-modal deformable image registration (DIR). However, due to the extreme heterogeneity of these modalities compared to standard structural scans, conventional unsupervised DIR methods struggle to learn reliable spatial mappings and often distort images. We find that the similarity metrics guiding these models fail to capture alignment between highly disparate modalities. To address this, we propose M2M-Reg (Multi-to-Mono Registration), a novel framework that trains multi-modal DIR models using only mono-modal similarity while preserving the established architectural paradigm for seamless integration into existing models. We also introduce GradCyCon, a regularizer that leverages M2M-Reg's cyclic training scheme to promote diffeomorphism. Furthermore, our framework naturally extends to a semi-supervised setting, integrating pre-aligned and unaligned pairs only, without requiring ground-truth transformations or segmentation masks. Experiments on the Alzheimer's Disease Neuroimaging Initiative (ADNI) dataset demonstrate that M2M-Reg achieves up to 2x higher DSC than prior methods for PET-MRI and FA-MRI registration, highlighting its effectiveness in handling highly heterogeneous multi-modal DIR. Our code is available at https://github.com/MICV-yonsei/M2M-Reg.

Rethinking Random Masking in Self Distillation on ViT

Jun 12, 2025Abstract:Vision Transformers (ViTs) have demonstrated remarkable performance across a wide range of vision tasks. In particular, self-distillation frameworks such as DINO have contributed significantly to these advances. Within such frameworks, random masking is often utilized to improve training efficiency and introduce regularization. However, recent studies have raised concerns that indiscriminate random masking may inadvertently eliminate critical semantic information, motivating the development of more informed masking strategies. In this study, we explore the role of random masking in the self-distillation setting, focusing on the DINO framework. Specifically, we apply random masking exclusively to the student's global view, while preserving the student's local views and the teacher's global view in their original, unmasked forms. This design leverages DINO's multi-view augmentation scheme to retain clean supervision while inducing robustness through masked inputs. We evaluate our approach using DINO-Tiny on the mini-ImageNet dataset and show that random masking under this asymmetric setup yields more robust and fine-grained attention maps, ultimately enhancing downstream performance.

Capsule Neural Networks as Noise Stabilizer for Time Series Data

Mar 20, 2024

Abstract:Capsule Neural Networks utilize capsules, which bind neurons into a single vector and learn position equivariant features, which makes them more robust than original Convolutional Neural Networks. CapsNets employ an affine transformation matrix and dynamic routing with coupling coefficients to learn robustly. In this paper, we investigate the effectiveness of CapsNets in analyzing highly sensitive and noisy time series sensor data. To demonstrate CapsNets robustness, we compare their performance with original CNNs on electrocardiogram data, a medical time series sensor data with complex patterns and noise. Our study provides empirical evidence that CapsNets function as noise stabilizers, as investigated by manual and adversarial attack experiments using the fast gradient sign method and three manual attacks, including offset shifting, gradual drift, and temporal lagging. In summary, CapsNets outperform CNNs in both manual and adversarial attacked data. Our findings suggest that CapsNets can be effectively applied to various sensor systems to improve their resilience to noise attacks. These results have significant implications for designing and implementing robust machine learning models in real world applications. Additionally, this study contributes to the effectiveness of CapsNet models in handling noisy data and highlights their potential for addressing the challenges of noise data in time series analysis.

* 3 pages, 3 figures

CardioCaps: Attention-based Capsule Network for Class-Imbalanced Echocardiogram Classification

Mar 15, 2024

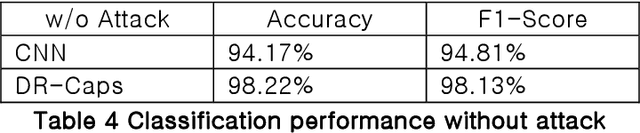

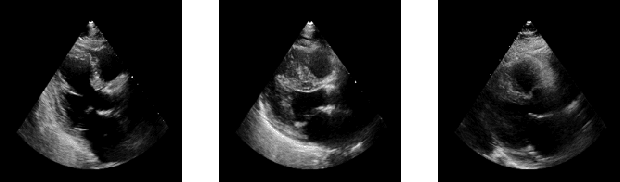

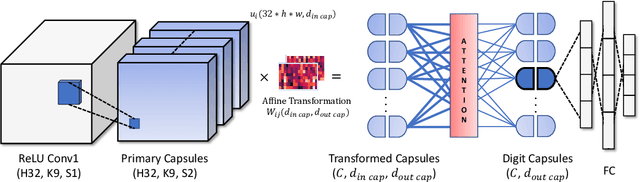

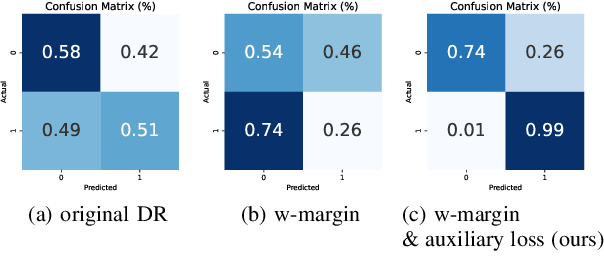

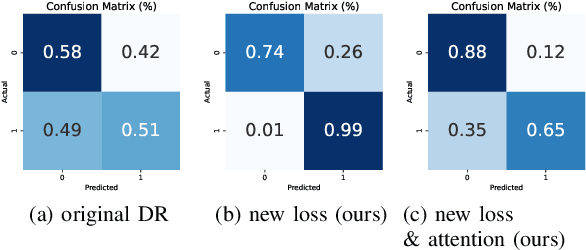

Abstract:Capsule Neural Networks (CapsNets) is a novel architecture that utilizes vector-wise representations formed by multiple neurons. Specifically, the Dynamic Routing CapsNets (DR-CapsNets) employ an affine matrix and dynamic routing mechanism to train capsules and acquire translation-equivariance properties, enhancing its robustness compared to traditional Convolutional Neural Networks (CNNs). Echocardiograms, which capture moving images of the heart, present unique challenges for traditional image classification methods. In this paper, we explore the potential of DR-CapsNets and propose CardioCaps, a novel attention-based DR-CapsNet architecture for class-imbalanced echocardiogram classification. CardioCaps comprises two key components: a weighted margin loss incorporating a regression auxiliary loss and an attention mechanism. First, the weighted margin loss prioritizes positive cases, supplemented by an auxiliary loss function based on the Ejection Fraction (EF) regression task, a crucial measure of cardiac function. This approach enhances the model's resilience in the face of class imbalance. Second, recognizing the quadratic complexity of dynamic routing leading to training inefficiencies, we adopt the attention mechanism as a more computationally efficient alternative. Our results demonstrate that CardioCaps surpasses traditional machine learning baseline methods, including Logistic Regression, Random Forest, and XGBoost with sampling methods and a class weight matrix. Furthermore, CardioCaps outperforms other deep learning baseline methods such as CNNs, ResNets, U-Nets, and ViTs, as well as advanced CapsNets methods such as EM-CapsNets and Efficient-CapsNets. Notably, our model demonstrates robustness to class imbalance, achieving high precision even in datasets with a substantial proportion of negative cases.

* 8 pages, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge