Hyun Jae Jang

Network Representation Learning for Biophysical Neural Network Analysis

Oct 15, 2024

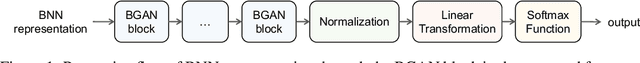

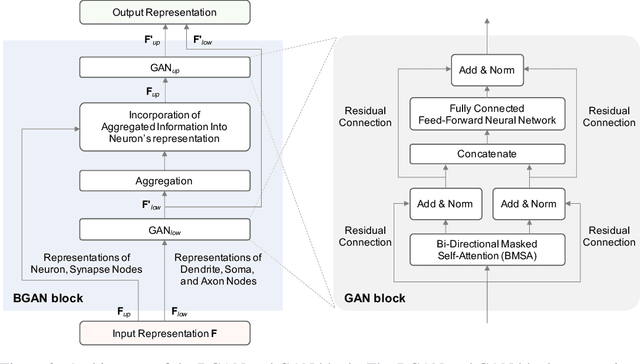

Abstract:The analysis of biophysical neural networks (BNNs) has been a longstanding focus in computational neuroscience. A central yet unresolved challenge in BNN analysis lies in deciphering the correlations between neuronal and synaptic dynamics, their connectivity patterns, and learning process. To address this, we introduce a novel BNN analysis framework grounded in network representation learning (NRL), which leverages attention scores to uncover intricate correlations between network components and their features. Our framework integrates a new computational graph (CG)-based BNN representation, a bio-inspired graph attention network (BGAN) that enables multiscale correlation analysis across BNN representations, and an extensive BNN dataset. The CG-based representation captures key computational features, information flow, and structural relationships underlying neuronal and synaptic dynamics, while BGAN reflects the compositional structure of neurons, including dendrites, somas, and axons, as well as bidirectional information flows between BNN components. The dataset comprises publicly available models from ModelDB, reconstructed using the Python and standardized in NeuroML format, and is augmented with data derived from canonical neuron and synapse models. To our knowledge, this study is the first to apply an NRL-based approach to the full spectrum of BNNs and their analysis.

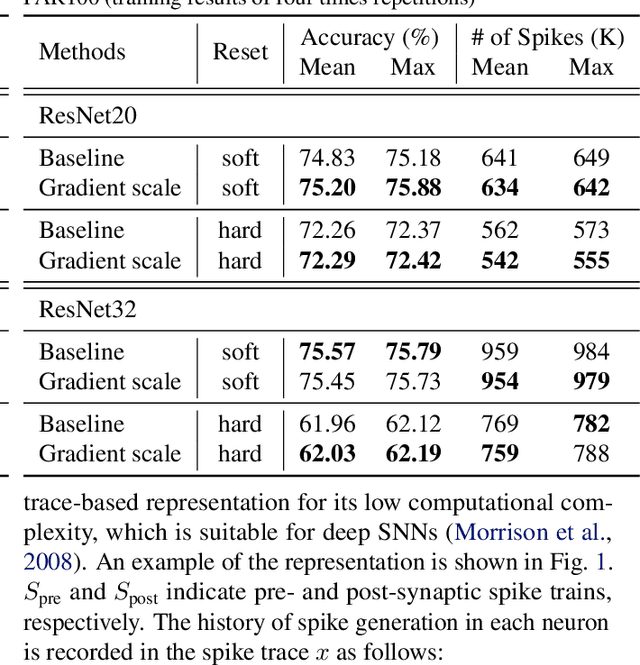

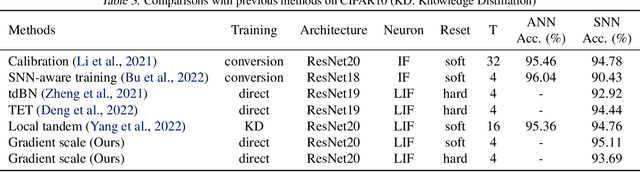

Gradient Scaling on Deep Spiking Neural Networks with Spike-Dependent Local Information

Aug 01, 2023

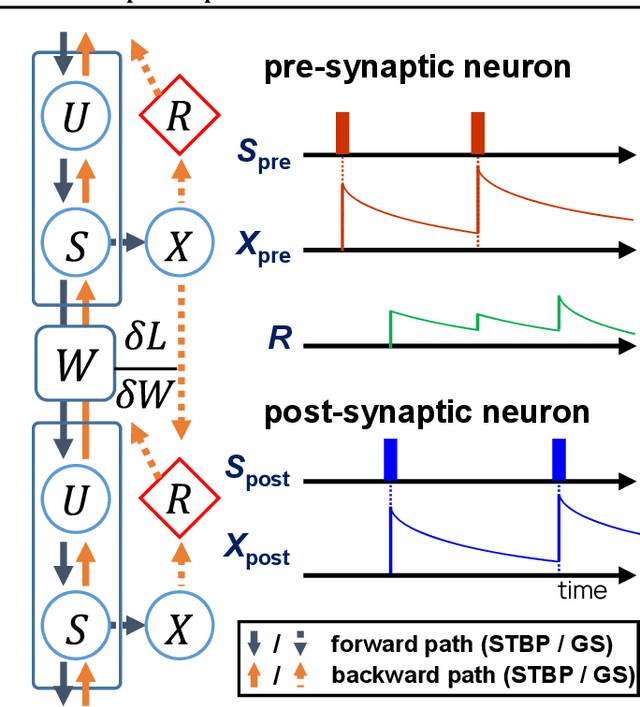

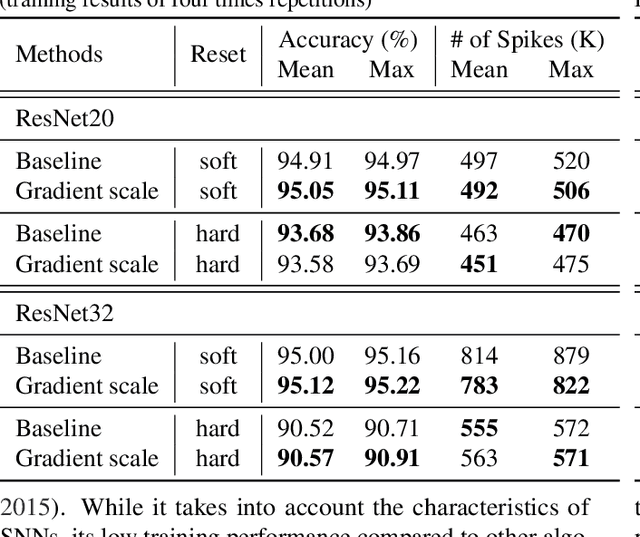

Abstract:Deep spiking neural networks (SNNs) are promising neural networks for their model capacity from deep neural network architecture and energy efficiency from SNNs' operations. To train deep SNNs, recently, spatio-temporal backpropagation (STBP) with surrogate gradient was proposed. Although deep SNNs have been successfully trained with STBP, they cannot fully utilize spike information. In this work, we proposed gradient scaling with local spike information, which is the relation between pre- and post-synaptic spikes. Considering the causality between spikes, we could enhance the training performance of deep SNNs. According to our experiments, we could achieve higher accuracy with lower spikes by adopting the gradient scaling on image classification tasks, such as CIFAR10 and CIFAR100.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge