Hyemin Um

Multiple resolution residual network for automatic thoracic organs-at-risk segmentation from CT

May 31, 2020

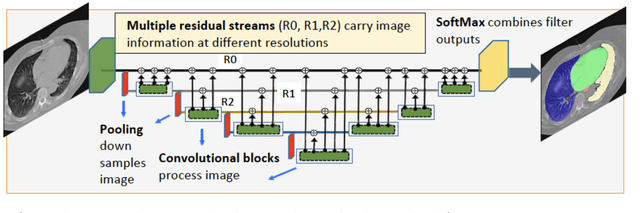

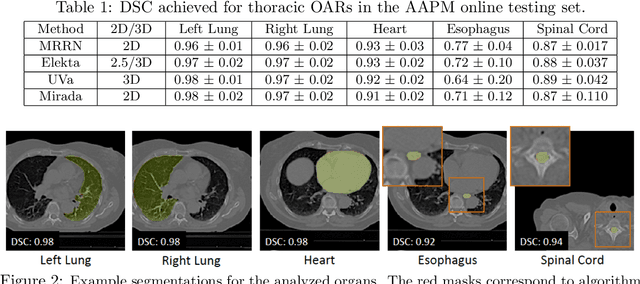

Abstract:We implemented and evaluated a multiple resolution residual network (MRRN) for multiple normal organs-at-risk (OAR) segmentation from computed tomography (CT) images for thoracic radiotherapy treatment (RT) planning. Our approach simultaneously combines feature streams computed at multiple image resolutions and feature levels through residual connections. The feature streams at each level are updated as the images are passed through various feature levels. We trained our approach using 206 thoracic CT scans of lung cancer patients with 35 scans held out for validation to segment the left and right lungs, heart, esophagus, and spinal cord. This approach was tested on 60 CT scans from the open-source AAPM Thoracic Auto-Segmentation Challenge dataset. Performance was measured using the Dice Similarity Coefficient (DSC). Our approach outperformed the best-performing method in the grand challenge for hard-to-segment structures like the esophagus and achieved comparable results for all other structures. Median DSC using our method was 0.97 (interquartile range [IQR]: 0.97-0.98) for the left and right lungs, 0.93 (IQR: 0.93-0.95) for the heart, 0.78 (IQR: 0.76-0.80) for the esophagus, and 0.88 (IQR: 0.86-0.89) for the spinal cord.

Local block-wise self attention for normal organ segmentation

Sep 11, 2019

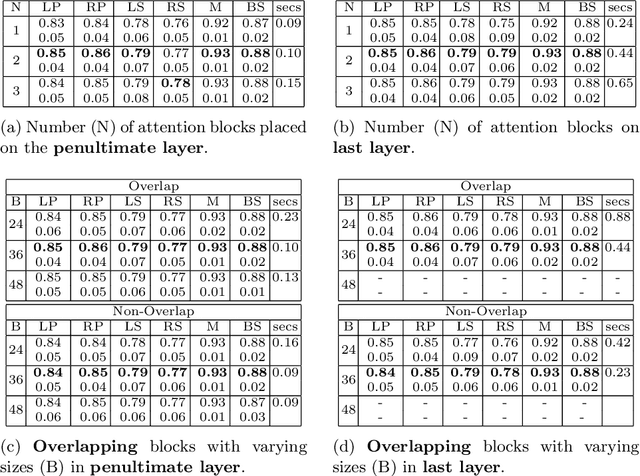

Abstract:We developed a new and computationally simple local block-wise self attention based normal structures segmentation approach applied to head and neck computed tomography (CT) images. Our method uses the insight that normal organs exhibit regularity in their spatial location and inter-relation within images, which can be leveraged to simplify the computations required to aggregate feature information. We accomplish this by using local self attention blocks that pass information between each other to derive the attention map. We show that adding additional attention layers increases the contextual field and captures focused attention from relevant structures. We developed our approach using U-net and compared it against multiple state-of-the-art self attention methods. All models were trained on 48 internal headneck CT scans and tested on 48 CT scans from the external public domain database of computational anatomy dataset. Our method achieved the highest Dice similarity coefficient segmentation accuracy of 0.85$\pm$0.04, 0.86$\pm$0.04 for left and right parotid glands, 0.79$\pm$0.07 and 0.77$\pm$0.05 for left and right submandibular glands, 0.93$\pm$0.01 for mandible and 0.88$\pm$0.02 for the brain stem with the lowest increase of 66.7\% computing time per image and 0.15\% increase in model parameters compared with standard U-net. The best state-of-the-art method called point-wise spatial attention, achieved \textcolor{black}{comparable accuracy but with 516.7\% increase in computing time and 8.14\% increase in parameters compared with standard U-net.} Finally, we performed ablation tests and studied the impact of attention block size, overlap of the attention blocks, additional attention layers, and attention block placement on segmentation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge