Hung Truong Thanh Nguyen

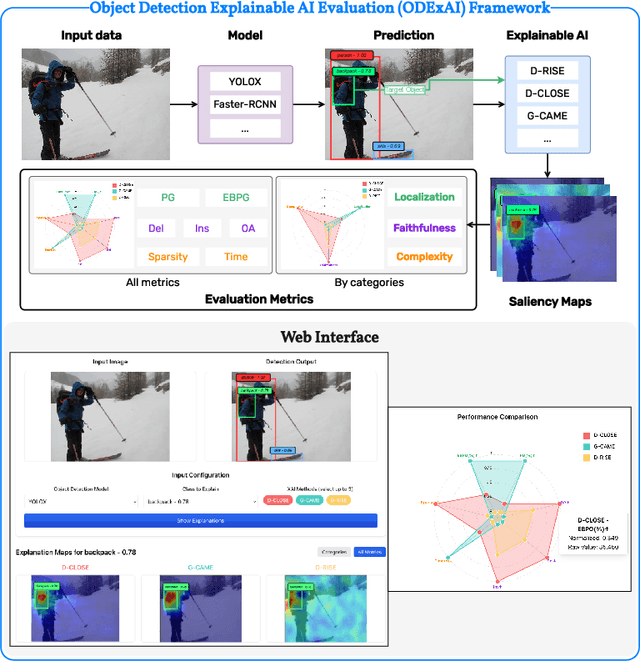

ODExAI: A Comprehensive Object Detection Explainable AI Evaluation

Apr 27, 2025

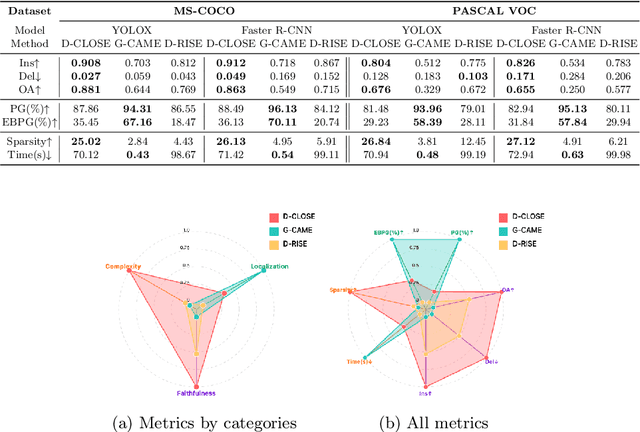

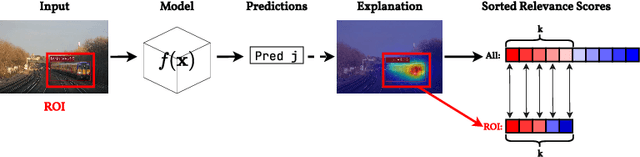

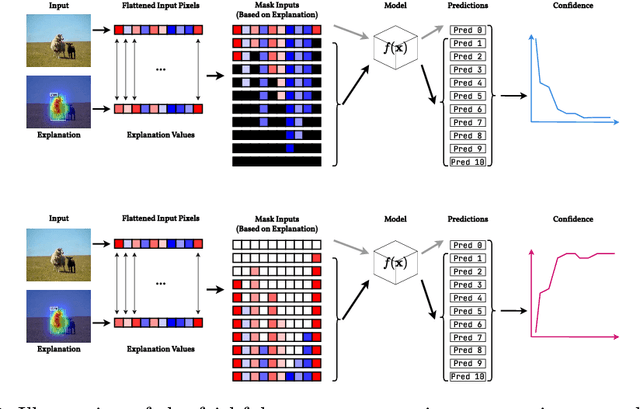

Abstract:Explainable Artificial Intelligence (XAI) techniques for interpreting object detection models remain in an early stage, with no established standards for systematic evaluation. This absence of consensus hinders both the comparative analysis of methods and the informed selection of suitable approaches. To address this gap, we introduce the Object Detection Explainable AI Evaluation (ODExAI), a comprehensive framework designed to assess XAI methods in object detection based on three core dimensions: localization accuracy, faithfulness to model behavior, and computational complexity. We benchmark a set of XAI methods across two widely used object detectors (YOLOX and Faster R-CNN) and standard datasets (MS-COCO and PASCAL VOC). Empirical results demonstrate that region-based methods (e.g., D-CLOSE) achieve strong localization (PG = 88.49%) and high model faithfulness (OA = 0.863), though with substantial computational overhead (Time = 71.42s). On the other hand, CAM-based methods (e.g., G-CAME) achieve superior localization (PG = 96.13%) and significantly lower runtime (Time = 0.54s), but at the expense of reduced faithfulness (OA = 0.549). These findings demonstrate critical trade-offs among existing XAI approaches and reinforce the need for task-specific evaluation when deploying them in object detection pipelines. Our implementation and evaluation benchmarks are publicly available at: https://github.com/Analytics-Everywhere-Lab/odexai.

Beyond explaining: XAI-based Adaptive Learning with SHAP Clustering for Energy Consumption Prediction

Feb 07, 2024Abstract:This paper presents an approach integrating explainable artificial intelligence (XAI) techniques with adaptive learning to enhance energy consumption prediction models, with a focus on handling data distribution shifts. Leveraging SHAP clustering, our method provides interpretable explanations for model predictions and uses these insights to adaptively refine the model, balancing model complexity with predictive performance. We introduce a three-stage process: (1) obtaining SHAP values to explain model predictions, (2) clustering SHAP values to identify distinct patterns and outliers, and (3) refining the model based on the derived SHAP clustering characteristics. Our approach mitigates overfitting and ensures robustness in handling data distribution shifts. We evaluate our method on a comprehensive dataset comprising energy consumption records of buildings, as well as two additional datasets to assess the transferability of our approach to other domains, regression, and classification problems. Our experiments demonstrate the effectiveness of our approach in both task types, resulting in improved predictive performance and interpretable model explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge