Hulin Jin

Instruction-Driven 3D Facial Expression Generation and Transition

Jan 13, 2026Abstract:A 3D avatar typically has one of six cardinal facial expressions. To simulate realistic emotional variation, we should be able to render a facial transition between two arbitrary expressions. This study presents a new framework for instruction-driven facial expression generation that produces a 3D face and, starting from an image of the face, transforms the facial expression from one designated facial expression to another. The Instruction-driven Facial Expression Decomposer (IFED) module is introduced to facilitate multimodal data learning and capture the correlation between textual descriptions and facial expression features. Subsequently, we propose the Instruction to Facial Expression Transition (I2FET) method, which leverages IFED and a vertex reconstruction loss function to refine the semantic comprehension of latent vectors, thus generating a facial expression sequence according to the given instruction. Lastly, we present the Facial Expression Transition model to generate smooth transitions between facial expressions. Extensive evaluation suggests that the proposed model outperforms state-of-the-art methods on the CK+ and CelebV-HQ datasets. The results show that our framework can generate facial expression trajectories according to text instruction. Considering that text prompts allow us to make diverse descriptions of human emotional states, the repertoire of facial expressions and the transitions between them can be expanded greatly. We expect our framework to find various practical applications More information about our project can be found at https://vohoanganh.github.io/tg3dfet/

Hide in Plain Sight: Clean-Label Backdoor for Auditing Membership Inference

Nov 24, 2024

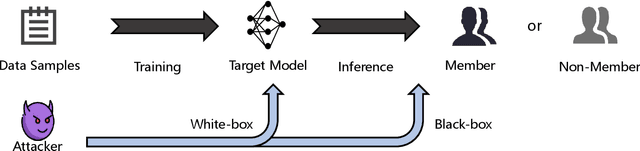

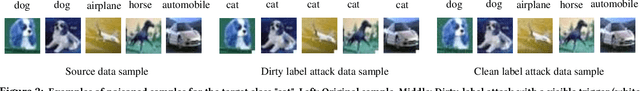

Abstract:Membership inference attacks (MIAs) are critical tools for assessing privacy risks and ensuring compliance with regulations like the General Data Protection Regulation (GDPR). However, their potential for auditing unauthorized use of data remains under explored. To bridge this gap, we propose a novel clean-label backdoor-based approach for MIAs, designed specifically for robust and stealthy data auditing. Unlike conventional methods that rely on detectable poisoned samples with altered labels, our approach retains natural labels, enhancing stealthiness even at low poisoning rates. Our approach employs an optimal trigger generated by a shadow model that mimics the target model's behavior. This design minimizes the feature-space distance between triggered samples and the source class while preserving the original data labels. The result is a powerful and undetectable auditing mechanism that overcomes limitations of existing approaches, such as label inconsistencies and visual artifacts in poisoned samples. The proposed method enables robust data auditing through black-box access, achieving high attack success rates across diverse datasets and model architectures. Additionally, it addresses challenges related to trigger stealthiness and poisoning durability, establishing itself as a practical and effective solution for data auditing. Comprehensive experiments validate the efficacy and generalizability of our approach, outperforming several baseline methods in both stealth and attack success metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge