Huiqin Jiang

CCAT-NET: A Novel Transformer Based Semi-supervised Framework for Covid-19 Lung Lesion Segmentation

Apr 06, 2022

Abstract:The spread of the novel coronavirus disease 2019 (COVID-19) has claimed millions of lives. Automatic segmentation of lesions from CT images can assist doctors with screening, treatment, and monitoring. However, accurate segmentation of lesions from CT images can be very challenging due to data and model limitations. Recently, Transformer-based networks have attracted a lot of attention in the area of computer vision, as Transformer outperforms CNN at a bunch of tasks. In this work, we propose a novel network structure that combines CNN and Transformer for the segmentation of COVID-19 lesions. We further propose an efficient semi-supervised learning framework to address the shortage of labeled data. Extensive experiments showed that our proposed network outperforms most existing networks and the semi-supervised learning framework can outperform the base network by 3.0% and 8.2% in terms of Dice coefficient and sensitivity.

AMA-GCN: Adaptive Multi-layer Aggregation Graph Convolutional Network for Disease Prediction

Jun 16, 2021

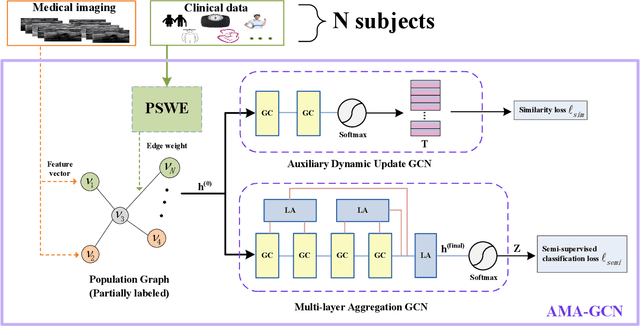

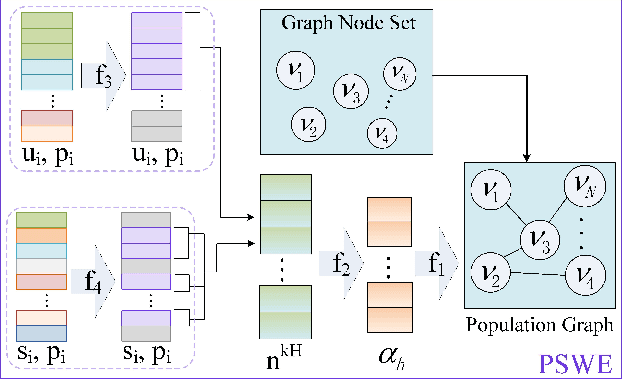

Abstract:Recently, Graph Convolutional Networks (GCNs) have proven to be a powerful mean for Computer Aided Diagnosis (CADx). This approach requires building a population graph to aggregate structural information, where the graph adjacency matrix represents the relationship between nodes. Until now, this adjacency matrix is usually defined manually based on phenotypic information. In this paper, we propose an encoder that automatically selects the appropriate phenotypic measures according to their spatial distribution, and uses the text similarity awareness mechanism to calculate the edge weights between nodes. The encoder can automatically construct the population graph using phenotypic measures which have a positive impact on the final results, and further realizes the fusion of multimodal information. In addition, a novel graph convolution network architecture using multi-layer aggregation mechanism is proposed. The structure can obtain deep structure information while suppressing over-smooth, and increase the similarity between the same type of nodes. Experimental results on two databases show that our method can significantly improve the diagnostic accuracy for Autism spectrum disorder and breast cancer, indicating its universality in leveraging multimodal data for disease prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge