Hovannes Kulhandjian

AI-based Drone Assisted Human Rescue in Disaster Environments: Challenges and Opportunities

Jun 22, 2024Abstract:In this survey we are focusing on utilizing drone-based systems for the detection of individuals, particularly by identifying human screams and other distress signals. This study has significant relevance in post-disaster scenarios, including events such as earthquakes, hurricanes, military conflicts, wildfires, and more. These drones are capable of hovering over disaster-stricken areas that may be challenging for rescue teams to access directly. Unmanned aerial vehicles (UAVs), commonly referred to as drones, are frequently deployed for search-and-rescue missions during disaster situations. Typically, drones capture aerial images to assess structural damage and identify the extent of the disaster. They also employ thermal imaging technology to detect body heat signatures, which can help locate individuals. In some cases, larger drones are used to deliver essential supplies to people stranded in isolated disaster-stricken areas. In our discussions, we delve into the unique challenges associated with locating humans through aerial acoustics. The auditory system must distinguish between human cries and sounds that occur naturally, such as animal calls and wind. Additionally, it should be capable of recognizing distinct patterns related to signals like shouting, clapping, or other ways in which people attempt to signal rescue teams. To tackle this challenge, one solution involves harnessing artificial intelligence (AI) to analyze sound frequencies and identify common audio signatures. Deep learning-based networks, such as convolutional neural networks (CNNs), can be trained using these signatures to filter out noise generated by drone motors and other environmental factors. Furthermore, employing signal processing techniques like the direction of arrival (DOA) based on microphone array signals can enhance the precision of tracking the source of human noises.

Delay-Doppler Domain Pulse Design for OTFS-NOMA

May 29, 2024

Abstract:We address the challenge of developing an orthogonal time-frequency space (OTFS)-based non-orthogonal multiple access (NOMA) system where each user is modulated using orthogonal pulses in the delay Doppler domain. Building upon the concept of the sufficient (bi)orthogonality train-pulse [1], we extend this idea by introducing Hermite functions, known for their orthogonality properties. Simulation results demonstrate that our proposed Hermite functions outperform the traditional OTFS-NOMA schemes, including power-domain (PDM) NOMA and code-domain (CDM) NOMA, in terms of bit error rate (BER) over a high-mobility channel. The algorithm's complexity is minimal, primarily involving the demodulation of OTFS. The spectrum efficiency of Hermite-based OTFS-NOMA is K times that of OTFS-CDM-NOMA scheme, where K is the spreading length of the NOMA waveform.

NOMA Computation Over Multi-Access Channels for Multimodal Sensing

Jan 01, 2022

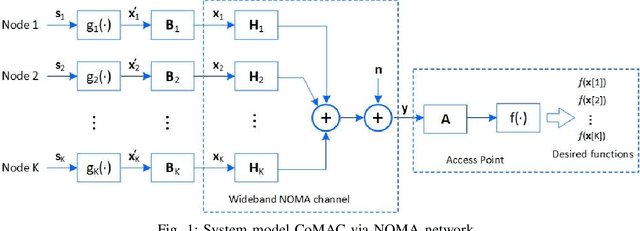

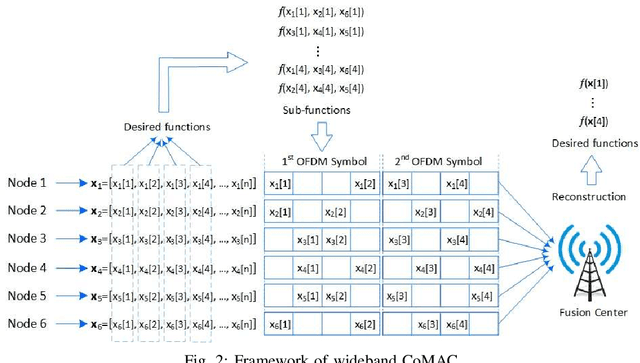

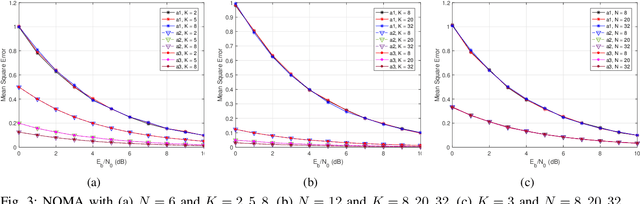

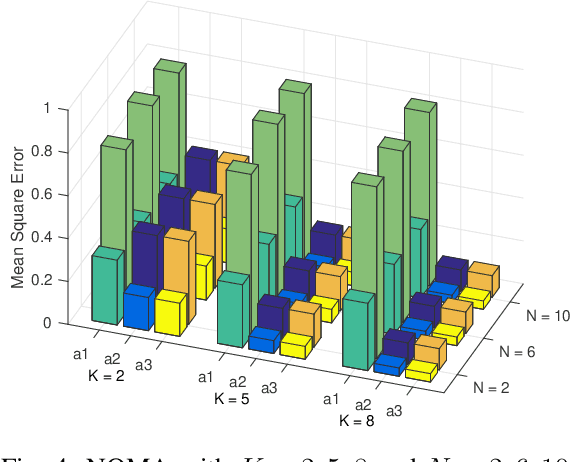

Abstract:An improved mean squared error (MSE) minimization solution based on eigenvector decomposition approach is conceived for wideband non-orthogonal multiple-access based computation over multi-access channel (NOMA-CoMAC) framework. This work aims at further developing NOMA-CoMAC for next-generation multimodal sensor networks, where a multimodal sensor monitors several environmental parameters such as temperature, pollution, humidity, or pressure. We demonstrate that our proposed scheme achieves an MSE value approximately 0.7 lower at E_b/N_o = 1 dB in comparison to that for the average sum-channel based method. Moreover, the MSE performance gain of our proposed solution increases even more for larger values of subcarriers and sensor nodes due to the benefit of the diversity gain. This, in return, suggests that our proposed scheme is eminently suitable for multimodal sensor networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge