Hossein Soleimani

Energy Efficient Task Offloading in UAV-Enabled MEC Using a Fully Decentralized Deep Reinforcement Learning Approach

Aug 09, 2025Abstract:Unmanned aerial vehicles (UAVs) have been recently utilized in multi-access edge computing (MEC) as edge servers. It is desirable to design UAVs' trajectories and user to UAV assignments to ensure satisfactory service to the users and energy efficient operation simultaneously. The posed optimization problem is challenging to solve because: (i) The formulated problem is non-convex, (ii) Due to the mobility of ground users, their future positions and channel gains are not known in advance, (iii) Local UAVs' observations should be communicated to a central entity that solves the optimization problem. The (semi-) centralized processing leads to communication overhead, communication/processing bottlenecks, lack of flexibility and scalability, and loss of robustness to system failures. To simultaneously address all these limitations, we advocate a fully decentralized setup with no centralized entity. Each UAV obtains its local observation and then communicates with its immediate neighbors only. After sharing information with neighbors, each UAV determines its next position via a locally run deep reinforcement learning (DRL) algorithm. None of the UAVs need to know the global communication graph. Two main components of our proposed solution are (i) Graph attention layers (GAT), and (ii) Experience and parameter sharing proximal policy optimization (EPS-PPO). Our proposed approach eliminates all the limitations of semi-centralized MADRL methods such as MAPPO and MA deep deterministic policy gradient (MADDPG), while guaranteeing a better performance than independent local DRLs such as in IPPO. Numerical results reveal notable performance gains in several different criteria compared to the existing MADDPG algorithm, demonstrating the potential for offering a better performance, while utilizing local communications only.

GNSS/GPS Spoofing and Jamming Identification Using Machine Learning and Deep Learning

Jan 04, 2025

Abstract:The increasing reliance on Global Navigation Satellite Systems (GNSS), particularly the Global Positioning System (GPS), underscores the urgent need to safeguard these technologies against malicious threats such as spoofing and jamming. As the backbone for positioning, navigation, and timing (PNT) across various applications including transportation, telecommunications, and emergency services GNSS is vulnerable to deliberate interference that poses significant risks. Spoofing attacks, which involve transmitting counterfeit GNSS signals to mislead receivers into calculating incorrect positions, can result in serious consequences, from navigational errors in civilian aviation to security breaches in military operations. Furthermore, the lack of inherent security measures within GNSS systems makes them attractive targets for adversaries. While GNSS/GPS jamming and spoofing systems consist of numerous components, the ability to distinguish authentic signals from malicious ones is essential for maintaining system integrity. Recent advancements in machine learning and deep learning provide promising avenues for enhancing detection and mitigation strategies against these threats. This paper addresses both spoofing and jamming by tackling real-world challenges through machine learning, deep learning, and computer vision techniques. Through extensive experiments on two real-world datasets related to spoofing and jamming detection using advanced algorithms, we achieved state of the art results. In the GNSS/GPS jamming detection task, we attained approximately 99% accuracy, improving performance by around 5% compared to previous studies. Additionally, we addressed a challenging tasks related to spoofing detection, yielding results that underscore the potential of machine learning and deep learning in this domain.

Deep Learning Framework for the Design of Orbital Angular Momentum Generators Enabled by Leaky-wave Holograms

Apr 25, 2023

Abstract:In this paper, we present a novel approach for the design of leaky-wave holographic antennas that generates OAM-carrying electromagnetic waves by combining Flat Optics (FO) and machine learning (ML) techniques. To improve the performance of our system, we use a machine learning technique to discover a mathematical function that can effectively control the entire radiation pattern, i.e., decrease the side lobe level (SLL) while simultaneously increasing the central null depth of the radiation pattern. Precise tuning of the parameters of the impedance equation based on holographic theory is necessary to achieve optimal results in a variety of scenarios. In this research, we applied machine learning to determine the approximate values of the parameters. We can determine the optimal values for each parameter, resulting in the desired radiation pattern, using a total of 77,000 generated datasets. Furthermore, the use of ML not only saves time, but also yields more precise and accurate results than manual parameter tuning and conventional optimization methods.

Self-Supervised In-Domain Representation Learning for Remote Sensing Image Scene Classification

Feb 03, 2023

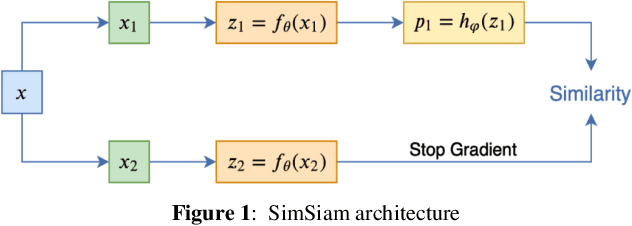

Abstract:Transferring the ImageNet pre-trained weights to the various remote sensing tasks has produced acceptable results and reduced the need for labeled samples. However, the domain differences between ground imageries and remote sensing images cause the performance of such transfer learning to be limited. Recent research has demonstrated that self-supervised learning methods capture visual features that are more discriminative and transferable than the supervised ImageNet weights. We are motivated by these facts to pre-train the in-domain representations of remote sensing imagery using contrastive self-supervised learning and transfer the learned features to other related remote sensing datasets. Specifically, we used the SimSiam algorithm to pre-train the in-domain knowledge of remote sensing datasets and then transferred the obtained weights to the other scene classification datasets. Thus, we have obtained state-of-the-art results on five land cover classification datasets with varying numbers of classes and spatial resolutions. In addition, By conducting appropriate experiments, including feature pre-training using datasets with different attributes, we have identified the most influential factors that make a dataset a good choice for obtaining in-domain features. We have transferred the features obtained by pre-training SimSiam on remote sensing datasets to various downstream tasks and used them as initial weights for fine-tuning. Moreover, we have linearly evaluated the obtained representations in cases where the number of samples per class is limited. Our experiments have demonstrated that using a higher-resolution dataset during the self-supervised pre-training stage results in learning more discriminative and general representations.

Simultaneous estimation of wall and object parameters in TWR using deep neural network

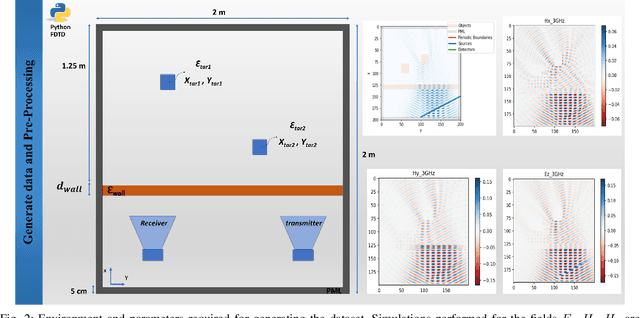

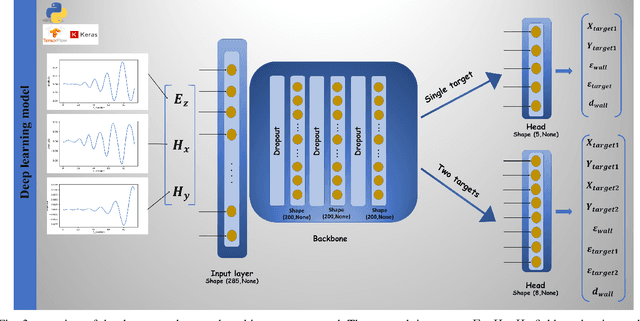

Oct 30, 2021

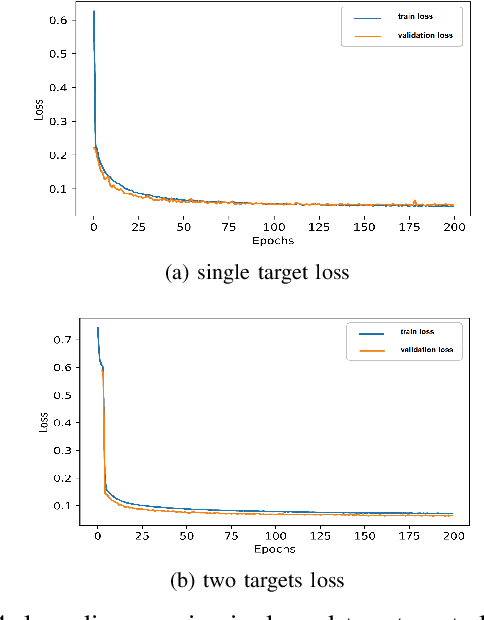

Abstract:This paper presents a deep learning model for simultaneously estimating target and wall parameters in Through-the-Wall Radar. In this work, we consider two modes: single-target and two-targets. In both cases, we consider the permittivity and thickness for the wall, as well as the two-dimensional coordinates of the target's center and permittivity. This means that in the case of a single target, we estimate five values, whereas, in the case of two targets, we estimate eight values simultaneously, each of which represents the mentioned parameters. We discovered that when using deep neural networks to solve the target locating problem, giving the model more parameters of the problem increases the location accuracy. As a result, we included two wall parameters in the problem and discovered that the accuracy of target locating improves while the wall parameters are estimated. We were able to estimate the parameters of wall permittivity and thickness, as well as two-dimensional coordinates and permittivity of targets in single-target and two-target modes with 99\% accuracy by using a deep neural network model.

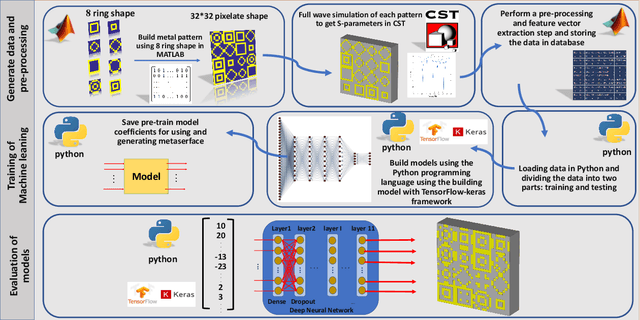

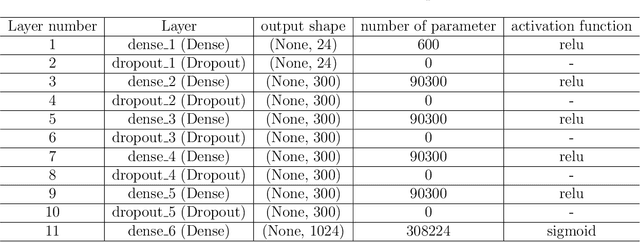

A deep learning approach for inverse design of the metasurface for dual-polarized waves

May 12, 2021

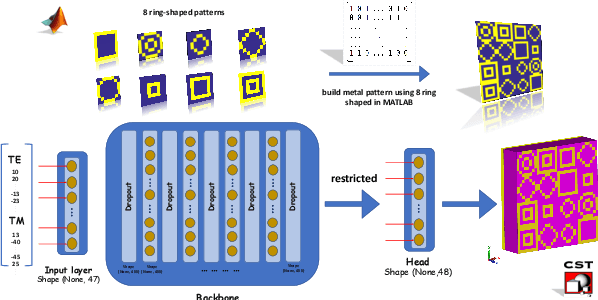

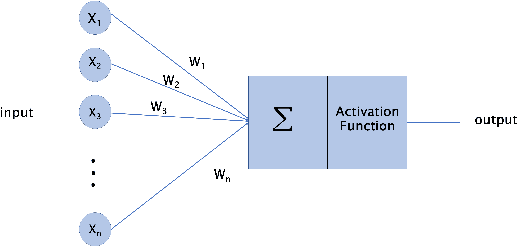

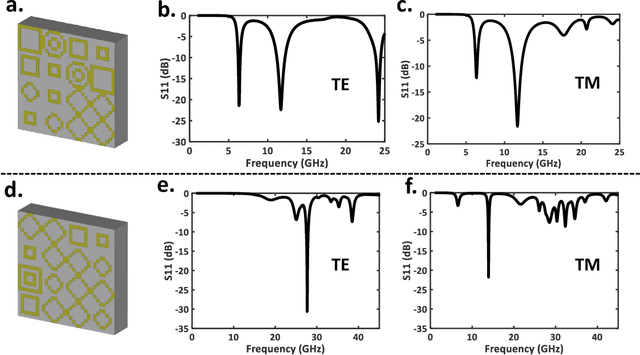

Abstract:Compared to the conventional metasurface design, machine learning-based methods have recently created an inspiring platform for an inverse realization of the metasurfaces. Here, we have used the Deep Neural Network (DNN) for the generation of desired output unit cell structures in an ultra-wide working frequency band for both TE and TM polarized waves. To automatically generate metasurfaces in a wide range of working frequencies from 4 to 45 GHz, we deliberately design an 8 ring-shaped pattern in such a way that the unit-cells generated in the dataset can produce single or multiple notches in the desired working frequency band. Compared to the general approach, whereby the final metasurface structure may be formed by any randomly distributed "0" and "1", we propose here a restricted output structure. By restricting the output, the number of calculations will be reduced and the learning speed will be increased. Moreover, we have shown that the accuracy of the network reaches 91\%. Obtaining the final unit cell directly without any time-consuming optimization algorithms for both TE and TM polarized waves, and high average accuracy, promises an effective strategy for the metasurface design; thus, the designer is required only to focus on the design goal.

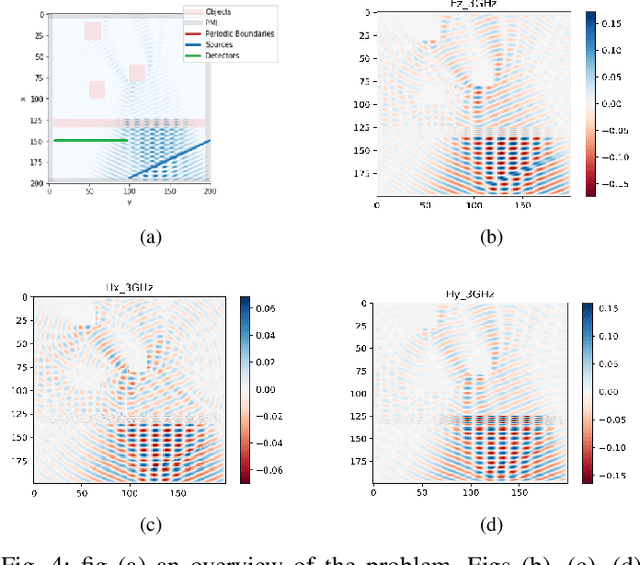

Deep Learning Approach for Target Locating in Through-the-Wall Radar under Electromagnetic Complex Wall

Feb 16, 2021

Abstract:In this paper, we used the deep learning approach to perform two-dimensional, multi-target locating in Throughthe-Wall Radar under conditions where the wall is modeled as a complex electromagnetic media. We have assumed 5 models for the wall and 3 modes for the number of targets. The target modes are single, double and triple. The wall scenarios are homogeneous wall, wall with airgap, inhomogeneous wall, anisotropic wall and inhomogeneous-anisotropic wall. For this purpose, we have used the deep neural network algorithm. Using the Python FDTD library, we generated a dataset, and then modeled it with deep learning. Assuming the wall as a complex electromagnetic media, we achieved 97:7% accuracy for single-target 2D locating, and for two-targets, three-targets we achieved an accuracy of 94:1% and 62:2%, respectively.

Deep neural network-based automatic metasurface design with a wide frequency range

Jan 22, 2021

Abstract:Beyond the scope of conventional metasurface which necessitates plenty of computational resources and time, an inverse design approach using machine learning algorithms promises an effective way for metasurfaces design. In this paper, benefiting from Deep Neural Network (DNN), an inverse design procedure of a metasurface in an ultra-wide working frequency band is presented where the output unit cell structure can be directly computed by a specified design target. To reach the highest working frequency, for training the DNN, we consider 8 ring-shaped patterns to generate resonant notches at a wide range of working frequencies from 4 to 45 GHz. We propose two network architectures. In one architecture, we restricted the output of the DNN, so the network can only generate the metasurface structure from the input of 8 ring-shaped patterns. This approach drastically reduces the computational time, while keeping the network's accuracy above 91\%. We show that our model based on DNN can satisfactorily generate the output metasurface structure with an average accuracy of over 90\% in both network architectures. Determination of the metasurface structure directly without time-consuming optimization procedures, having an ultra-wide working frequency, and high average accuracy equip an inspiring platform for engineering projects without the need for complex electromagnetic theory.

On segmentation of pectoralis muscle in digital mammograms by means of deep learning

Aug 29, 2020

Abstract:Computer-aided diagnosis (CAD) has long become an integral part of radiological management of breast disease, facilitating a number of important clinical applications, including quantitative assessment of breast density and early detection of malignancies based on X-ray mammography. Common to such applications is the need to automatically discriminate between breast tissue and adjacent anatomy, with the latter being predominantly represented by pectoralis major (or pectoral muscle). Especially in the case of mammograms acquired in the mediolateral oblique (MLO) view, the muscle is easily confusable with some elements of breast anatomy due to their morphological and photometric similarity. As a result, the problem of automatic detection and segmentation of pectoral muscle in MLO mammograms remains a challenging task, innovative approaches to which are still required and constantly searched for. To address this problem, the present paper introduces a two-step segmentation strategy based on a combined use of data-driven prediction (deep learning) and graph-based image processing. In particular, the proposed method employs a convolutional neural network (CNN) which is designed to predict the location of breast-pectoral boundary at different levels of spatial resolution. Subsequently, the predictions are used by the second stage of the algorithm, in which the desired boundary is recovered as a solution to the shortest path problem on a specially designed graph. The proposed algorithm has been tested on three different datasets (i.e., MIAS, CBIS-DDSm and InBreast) using a range of quantitative metrics. The results of comparative analysis show considerable improvement over state-of-the-art, while offering the possibility of model-free and fully automatic processing.

Palmprint image registration using convolutional neural networks and Hough transform

Apr 03, 2019

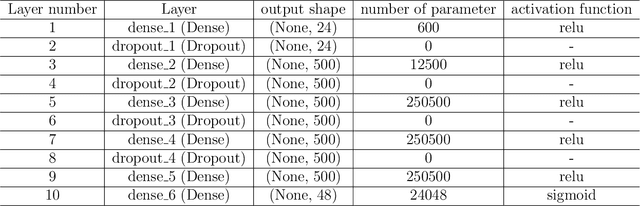

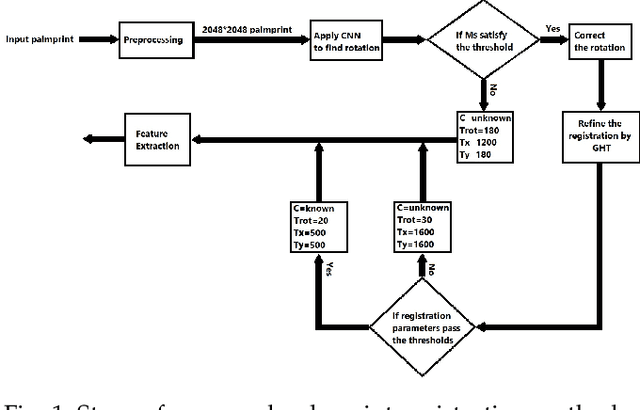

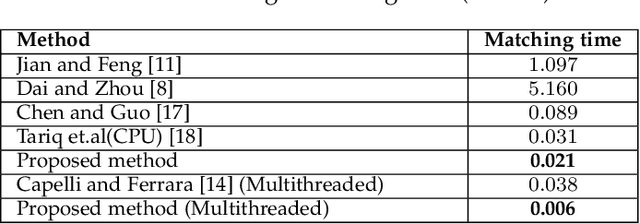

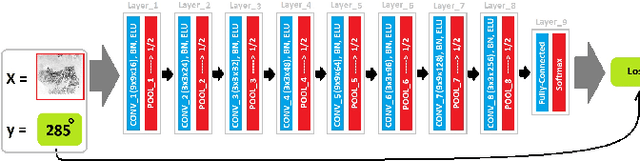

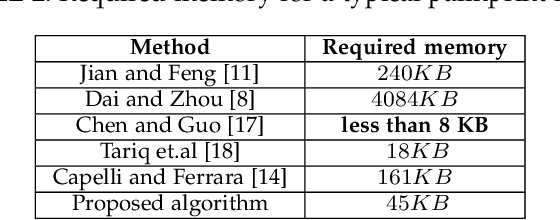

Abstract:Minutia-based palmprint recognition systems has got lots of interest in last two decades. Due to the large number of minutiae in a palmprint, approximately 1000 minutiae, the matching process is time consuming which makes it unpractical for real time applications. One way to address this issue is aligning all palmprint images to a reference image and bringing them to a same coordinate system. Bringing all palmprint images to a same coordinate system, results in fewer computations during minutia matching. In this paper, using convolutional neural network (CNN) and generalized Hough transform (GHT), we propose a new method to register palmprint images accurately. This method, finds the corresponding rotation and displacement (in both x and y direction) between the palmprint and a reference image. Exact palmprint registration can enhance the speed and the accuracy of matching process. Proposed method is capable of distinguishing between left and right palmprint automatically which helps to speed up the matching process. Furthermore, designed structure of CNN in registration stage, gives us the segmented palmprint image from background which is a pre-processing step for minutia extraction. The proposed registration method followed by minutia-cylinder code (MCC) matching algorithm has been evaluated on the THUPALMLAB database, and the results show the superiority of our algorithm over most of the state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge