Hossein Nejatbakhsh Esfahani

Quasi-Newton Iteration in Deterministic Policy Gradient

Mar 25, 2022

Abstract:This paper presents a model-free approximation for the Hessian of the performance of deterministic policies to use in the context of Reinforcement Learning based on Quasi-Newton steps in the policy parameters. We show that the approximate Hessian converges to the exact Hessian at the optimal policy, and allows for a superlinear convergence in the learning, provided that the policy parametrization is rich. The natural policy gradient method can be interpreted as a particular case of the proposed method. We analytically verify the formulation in a simple linear case and compare the convergence of the proposed method with the natural policy gradient in a nonlinear example.

Approximate Robust NMPC using Reinforcement Learning

Apr 06, 2021

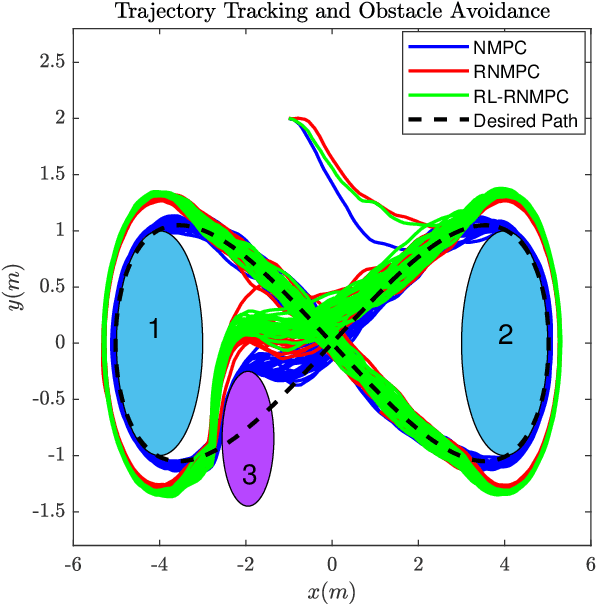

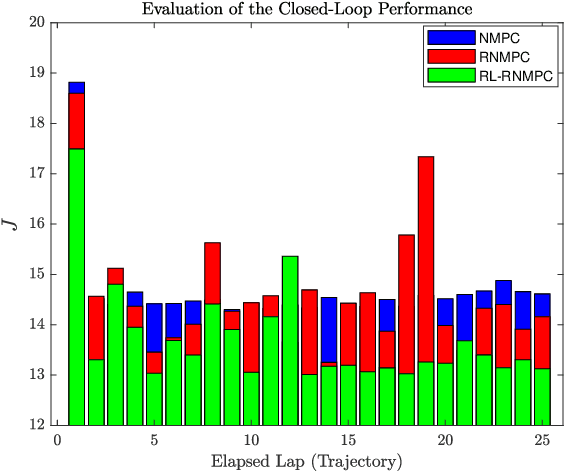

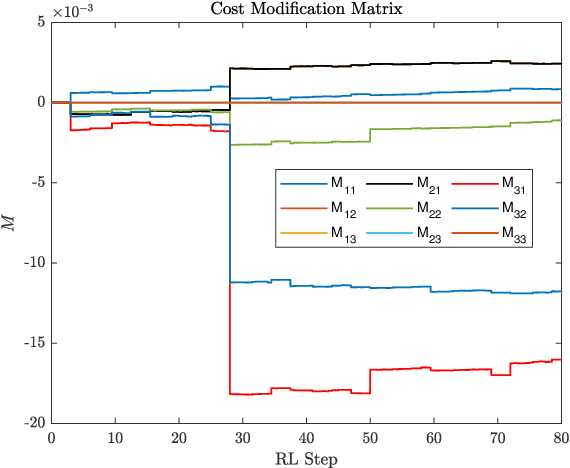

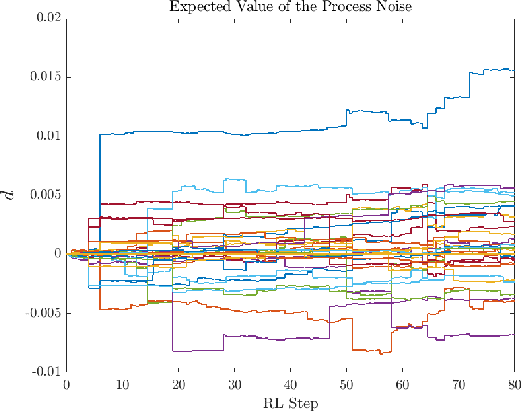

Abstract:We present a Reinforcement Learning-based Robust Nonlinear Model Predictive Control (RL-RNMPC) framework for controlling nonlinear systems in the presence of disturbances and uncertainties. An approximate Robust Nonlinear Model Predictive Control (RNMPC) of low computational complexity is used in which the state trajectory uncertainty is modelled via ellipsoids. Reinforcement Learning is then used in order to handle the ellipsoidal approximation and improve the closed-loop performance of the scheme by adjusting the MPC parameters generating the ellipsoids. The approach is tested on a simulated Wheeled Mobile Robot (WMR) tracking a desired trajectory while avoiding static obstacles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge