Hossain Shahriar

A Trustable LSTM-Autoencoder Network for Cyberbullying Detection on Social Media Using Synthetic Data

Aug 15, 2023

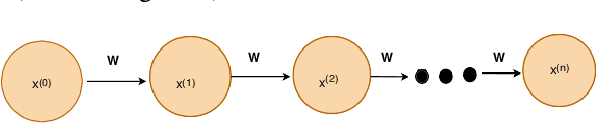

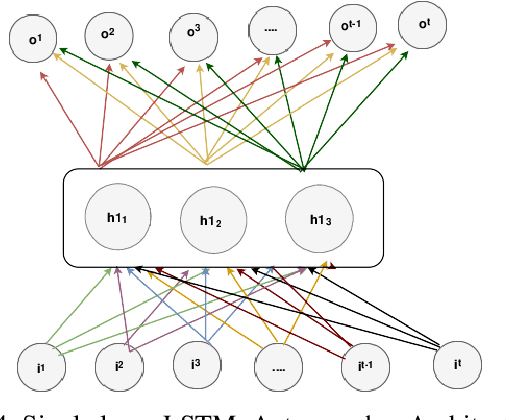

Abstract:Social media cyberbullying has a detrimental effect on human life. As online social networking grows daily, the amount of hate speech also increases. Such terrible content can cause depression and actions related to suicide. This paper proposes a trustable LSTM-Autoencoder Network for cyberbullying detection on social media using synthetic data. We have demonstrated a cutting-edge method to address data availability difficulties by producing machine-translated data. However, several languages such as Hindi and Bangla still lack adequate investigations due to a lack of datasets. We carried out experimental identification of aggressive comments on Hindi, Bangla, and English datasets using the proposed model and traditional models, including Long Short-Term Memory (LSTM), Bidirectional Long Short-Term Memory (BiLSTM), LSTM-Autoencoder, Word2vec, Bidirectional Encoder Representations from Transformers (BERT), and Generative Pre-trained Transformer 2 (GPT-2) models. We employed evaluation metrics such as f1-score, accuracy, precision, and recall to assess the models performance. Our proposed model outperformed all the models on all datasets, achieving the highest accuracy of 95%. Our model achieves state-of-the-art results among all the previous works on the dataset we used in this paper.

BlockTheFall: Wearable Device-based Fall Detection Framework Powered by Machine Learning and Blockchain for Elderly Care

Jun 10, 2023

Abstract:Falls among the elderly are a major health concern, frequently resulting in serious injuries and a reduced quality of life. In this paper, we propose "BlockTheFall," a wearable device-based fall detection framework which detects falls in real time by using sensor data from wearable devices. To accurately identify patterns and detect falls, the collected sensor data is analyzed using machine learning algorithms. To ensure data integrity and security, the framework stores and verifies fall event data using blockchain technology. The proposed framework aims to provide an efficient and dependable solution for fall detection with improved emergency response, and elderly individuals' overall well-being. Further experiments and evaluations are being carried out to validate the effectiveness and feasibility of the proposed framework, which has shown promising results in distinguishing genuine falls from simulated falls. By providing timely and accurate fall detection and response, this framework has the potential to substantially boost the quality of elderly care.

Autism Disease Detection Using Transfer Learning Techniques: Performance Comparison Between Central Processing Unit vs Graphics Processing Unit Functions for Neural Networks

Jun 01, 2023Abstract:Neural network approaches are machine learning methods that are widely used in various domains, such as healthcare and cybersecurity. Neural networks are especially renowned for their ability to deal with image datasets. During the training process with images, various fundamental mathematical operations are performed in the neural network. These operations include several algebraic and mathematical functions, such as derivatives, convolutions, and matrix inversions and transpositions. Such operations demand higher processing power than what is typically required for regular computer usage. Since CPUs are built with serial processing, they are not appropriate for handling large image datasets. On the other hand, GPUs have parallel processing capabilities and can provide higher speed. This paper utilizes advanced neural network techniques, such as VGG16, Resnet50, Densenet, Inceptionv3, Xception, Mobilenet, XGBOOST VGG16, and our proposed models, to compare CPU and GPU resources. We implemented a system for classifying Autism disease using face images of autistic and non-autistic children to compare performance during testing. We used evaluation matrices such as Accuracy, F1 score, Precision, Recall, and Execution time. It was observed that GPU outperformed CPU in all tests conducted. Moreover, the performance of the neural network models in terms of accuracy increased on GPU compared to CPU.

Feature Engineering-Based Detection of Buffer Overflow Vulnerability in Source Code Using Neural Networks

Jun 01, 2023

Abstract:One of the most significant challenges in the field of software code auditing is the presence of vulnerabilities in software source code. Every year, more and more software flaws are discovered, either internally in proprietary code or publicly disclosed. These flaws are highly likely to be exploited and can lead to system compromise, data leakage, or denial of service. To create a large-scale machine learning system for function level vulnerability identification, we utilized a sizable dataset of C and C++ open-source code containing millions of functions with potential buffer overflow exploits. We have developed an efficient and scalable vulnerability detection method based on neural network models that learn features extracted from the source codes. The source code is first converted into an intermediate representation to remove unnecessary components and shorten dependencies. We maintain the semantic and syntactic information using state of the art word embedding algorithms such as GloVe and fastText. The embedded vectors are subsequently fed into neural networks such as LSTM, BiLSTM, LSTM Autoencoder, word2vec, BERT, and GPT2 to classify the possible vulnerabilities. We maintain the semantic and syntactic information using state of the art word embedding algorithms such as GloVe and fastText. The embedded vectors are subsequently fed into neural networks such as LSTM, BiLSTM, LSTM Autoencoder, word2vec, BERT, and GPT2 to classify the possible vulnerabilities. Furthermore, we have proposed a neural network model that can overcome issues associated with traditional neural networks. We have used evaluation metrics such as F1 score, precision, recall, accuracy, and total execution time to measure the performance. We have conducted a comparative analysis between results derived from features containing a minimal text representation and semantic and syntactic information.

Case Study-Based Approach of Quantum Machine Learning in Cybersecurity: Quantum Support Vector Machine for Malware Classification and Protection

Jun 01, 2023

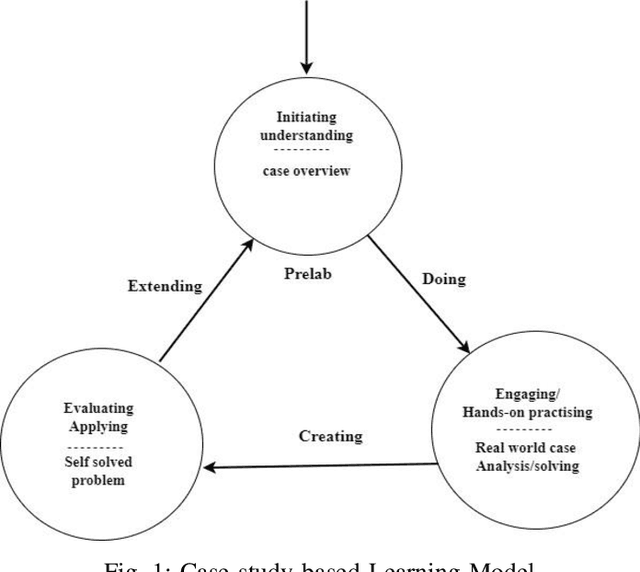

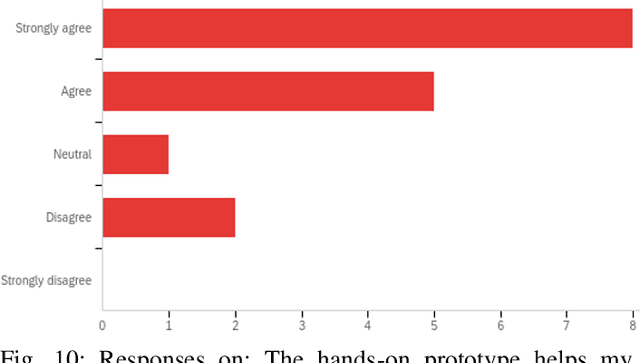

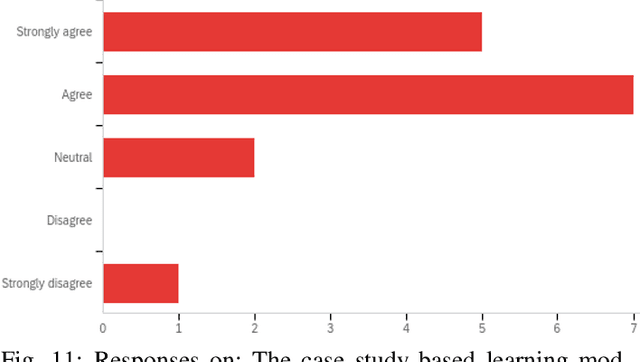

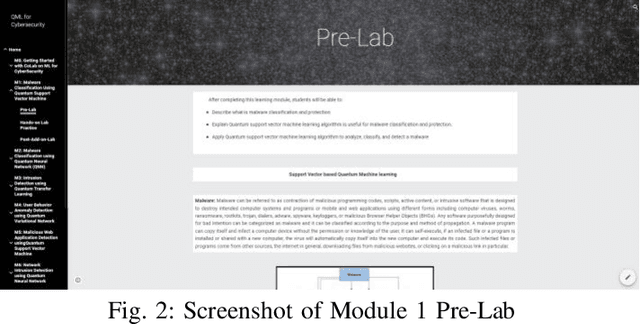

Abstract:Quantum machine learning (QML) is an emerging field of research that leverages quantum computing to improve the classical machine learning approach to solve complex real world problems. QML has the potential to address cybersecurity related challenges. Considering the novelty and complex architecture of QML, resources are not yet explicitly available that can pave cybersecurity learners to instill efficient knowledge of this emerging technology. In this research, we design and develop QML-based ten learning modules covering various cybersecurity topics by adopting student centering case-study based learning approach. We apply one subtopic of QML on a cybersecurity topic comprised of pre-lab, lab, and post-lab activities towards providing learners with hands-on QML experiences in solving real-world security problems. In order to engage and motivate students in a learning environment that encourages all students to learn, pre-lab offers a brief introduction to both the QML subtopic and cybersecurity problem. In this paper, we utilize quantum support vector machine (QSVM) for malware classification and protection where we use open source Pennylane QML framework on the drebin215 dataset. We demonstrate our QSVM model and achieve an accuracy of 95% in malware classification and protection. We will develop all the modules and introduce them to the cybersecurity community in the coming days.

Exploring the Vulnerabilities of Machine Learning and Quantum Machine Learning to Adversarial Attacks using a Malware Dataset: A Comparative Analysis

May 31, 2023

Abstract:The burgeoning fields of machine learning (ML) and quantum machine learning (QML) have shown remarkable potential in tackling complex problems across various domains. However, their susceptibility to adversarial attacks raises concerns when deploying these systems in security sensitive applications. In this study, we present a comparative analysis of the vulnerability of ML and QML models, specifically conventional neural networks (NN) and quantum neural networks (QNN), to adversarial attacks using a malware dataset. We utilize a software supply chain attack dataset known as ClaMP and develop two distinct models for QNN and NN, employing Pennylane for quantum implementations and TensorFlow and Keras for traditional implementations. Our methodology involves crafting adversarial samples by introducing random noise to a small portion of the dataset and evaluating the impact on the models performance using accuracy, precision, recall, and F1 score metrics. Based on our observations, both ML and QML models exhibit vulnerability to adversarial attacks. While the QNNs accuracy decreases more significantly compared to the NN after the attack, it demonstrates better performance in terms of precision and recall, indicating higher resilience in detecting true positives under adversarial conditions. We also find that adversarial samples crafted for one model type can impair the performance of the other, highlighting the need for robust defense mechanisms. Our study serves as a foundation for future research focused on enhancing the security and resilience of ML and QML models, particularly QNN, given its recent advancements. A more extensive range of experiments will be conducted to better understand the performance and robustness of both models in the face of adversarial attacks.

Software Supply Chain Vulnerabilities Detection in Source Code: Performance Comparison between Traditional and Quantum Machine Learning Algorithms

May 31, 2023

Abstract:The software supply chain (SSC) attack has become one of the crucial issues that are being increased rapidly with the advancement of the software development domain. In general, SSC attacks execute during the software development processes lead to vulnerabilities in software products targeting downstream customers and even involved stakeholders. Machine Learning approaches are proven in detecting and preventing software security vulnerabilities. Besides, emerging quantum machine learning can be promising in addressing SSC attacks. Considering the distinction between traditional and quantum machine learning, performance could be varies based on the proportions of the experimenting dataset. In this paper, we conduct a comparative analysis between quantum neural networks (QNN) and conventional neural networks (NN) with a software supply chain attack dataset known as ClaMP. Our goal is to distinguish the performance between QNN and NN and to conduct the experiment, we develop two different models for QNN and NN by utilizing Pennylane for quantum and TensorFlow and Keras for traditional respectively. We evaluated the performance of both models with different proportions of the ClaMP dataset to identify the f1 score, recall, precision, and accuracy. We also measure the execution time to check the efficiency of both models. The demonstration result indicates that execution time for QNN is slower than NN with a higher percentage of datasets. Due to recent advancements in QNN, a large level of experiments shall be carried out to understand both models accurately in our future research.

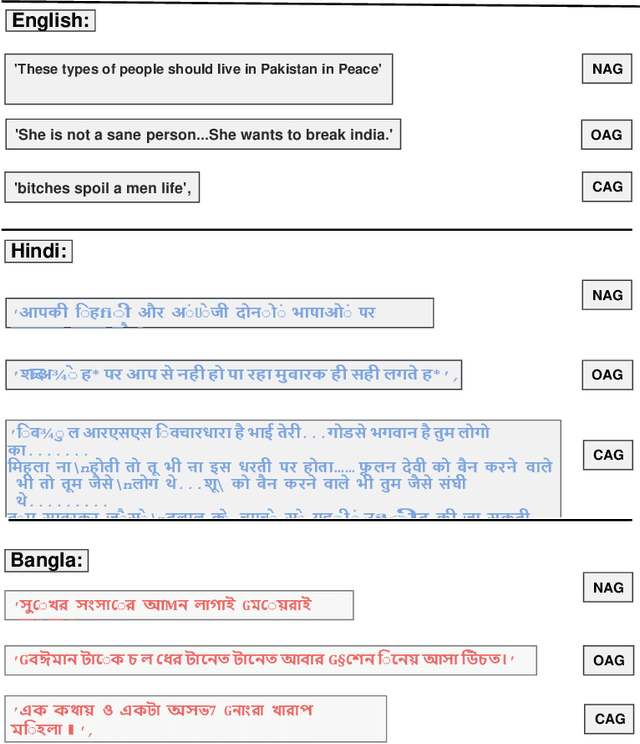

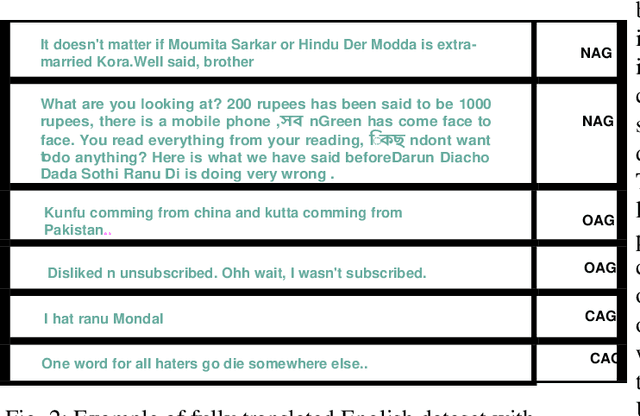

Deep Learning Approach for Classifying the Aggressive Comments on Social Media: Machine Translated Data Vs Real Life Data

Mar 13, 2023Abstract:Aggressive comments on social media negatively impact human life. Such offensive contents are responsible for depression and suicidal-related activities. Since online social networking is increasing day by day, the hate content is also increasing. Several investigations have been done on the domain of cyberbullying, cyberaggression, hate speech, etc. The majority of the inquiry has been done in the English language. Some languages (Hindi and Bangla) still lack proper investigations due to the lack of a dataset. This paper particularly worked on the Hindi, Bangla, and English datasets to detect aggressive comments and have shown a novel way of generating machine-translated data to resolve data unavailability issues. A fully machine-translated English dataset has been analyzed with the models such as the Long Short term memory model (LSTM), Bidirectional Long-short term memory model (BiLSTM), LSTM-Autoencoder, word2vec, Bidirectional Encoder Representations from Transformers (BERT), and generative pre-trained transformer (GPT-2) to make an observation on how the models perform on a machine-translated noisy dataset. We have compared the performance of using the noisy data with two more datasets such as raw data, which does not contain any noises, and semi-noisy data, which contains a certain amount of noisy data. We have classified both the raw and semi-noisy data using the aforementioned models. To evaluate the performance of the models, we have used evaluation metrics such as F1-score,accuracy, precision, and recall. We have achieved the highest accuracy on raw data using the gpt2 model, semi-noisy data using the BERT model, and fully machine-translated data using the BERT model. Since many languages do not have proper data availability, our approach will help researchers create machine-translated datasets for several analysis purposes.

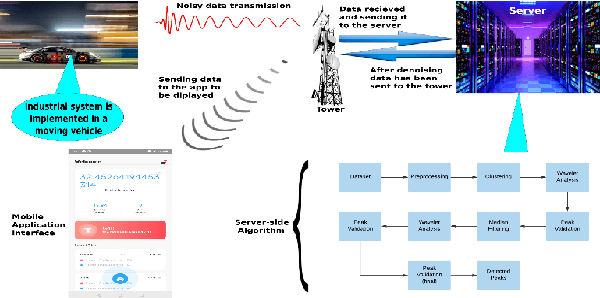

Towards Unsupervised Learning based Denoising of Cyber Physical System Data to Mitigate Security Concerns

Mar 13, 2023

Abstract:A dataset, collected under an industrial setting, often contains a significant portion of noises. In many cases, using trivial filters is not enough to retrieve useful information i.e., accurate value without the noise. One such data is time-series sensor readings collected from moving vehicles containing fuel information. Due to the noisy dynamics and mobile environment, the sensor readings can be very noisy. Denoising such a dataset is a prerequisite for any useful application and security issues. Security is a primitive concern in present vehicular schemes. The server side for retrieving the fuel information can be easily hacked. Providing the accurate and noise free fuel information via vehicular networks become crutial. Therefore, it has led us to develop a system that can remove noise and keep the original value. The system is also helpful for vehicle industry, fuel station, and power-plant station that require fuel. In this work, we have only considered the value of fuel level, and we have come up with a unique solution to filter out the noise of high magnitudes using several algorithms such as interpolation, extrapolation, spectral clustering, agglomerative clustering, wavelet analysis, and median filtering. We have also employed peak detection and peak validation algorithms to detect fuel refill and consumption in charge-discharge cycles. We have used the R-squared metric to evaluate our model, and it is 98 percent In most cases, the difference between detected value and real value remains within the range of 1L.

Multi-class Skin Cancer Classification Architecture Based on Deep Convolutional Neural Network

Mar 13, 2023

Abstract:Skin cancer detection is challenging since different types of skin lesions share high similarities. This paper proposes a computer-based deep learning approach that will accurately identify different kinds of skin lesions. Deep learning approaches can detect skin cancer very accurately since the models learn each pixel of an image. Sometimes humans can get confused by the similarities of the skin lesions, which we can minimize by involving the machine. However, not all deep learning approaches can give better predictions. Some deep learning models have limitations, leading the model to a false-positive result. We have introduced several deep learning models to classify skin lesions to distinguish skin cancer from different types of skin lesions. Before classifying the skin lesions, data preprocessing and data augmentation methods are used. Finally, a Convolutional Neural Network (CNN) model and six transfer learning models such as Resnet-50, VGG-16, Densenet, Mobilenet, Inceptionv3, and Xception are applied to the publically available benchmark HAM10000 dataset to classify seven classes of skin lesions and to conduct a comparative analysis. The models will detect skin cancer by differentiating the cancerous cell from the non-cancerous ones. The models performance is measured using performance metrics such as precision, recall, f1 score, and accuracy. We receive accuracy of 90, 88, 88, 87, 82, and 77 percent for inceptionv3, Xception, Densenet, Mobilenet, Resnet, CNN, and VGG16, respectively. Furthermore, we develop five different stacking models such as inceptionv3-inceptionv3, Densenet-mobilenet, inceptionv3-Xception, Resnet50-Vgg16, and stack-six for classifying the skin lesions and found that the stacking models perform poorly. We achieve the highest accuracy of 78 percent among all the stacking models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge