Horace Ho Shing Ip

SEFE: Superficial and Essential Forgetting Eliminator for Multimodal Continual Instruction Tuning

May 05, 2025Abstract:Multimodal Continual Instruction Tuning (MCIT) aims to enable Multimodal Large Language Models (MLLMs) to incrementally learn new tasks without catastrophic forgetting. In this paper, we explore forgetting in this context, categorizing it into superficial forgetting and essential forgetting. Superficial forgetting refers to cases where the model's knowledge may not be genuinely lost, but its responses to previous tasks deviate from expected formats due to the influence of subsequent tasks' answer styles, making the results unusable. By contrast, essential forgetting refers to situations where the model provides correctly formatted but factually inaccurate answers, indicating a true loss of knowledge. Assessing essential forgetting necessitates addressing superficial forgetting first, as severe superficial forgetting can obscure the model's knowledge state. Hence, we first introduce the Answer Style Diversification (ASD) paradigm, which defines a standardized process for transforming data styles across different tasks, unifying their training sets into similarly diversified styles to prevent superficial forgetting caused by style shifts. Building on this, we propose RegLoRA to mitigate essential forgetting. RegLoRA stabilizes key parameters where prior knowledge is primarily stored by applying regularization, enabling the model to retain existing competencies. Experimental results demonstrate that our overall method, SEFE, achieves state-of-the-art performance.

Strike a Balance in Continual Panoptic Segmentation

Jul 23, 2024

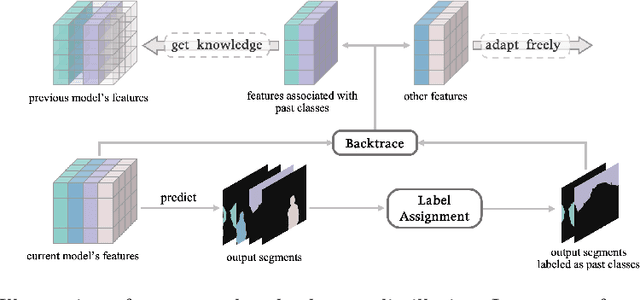

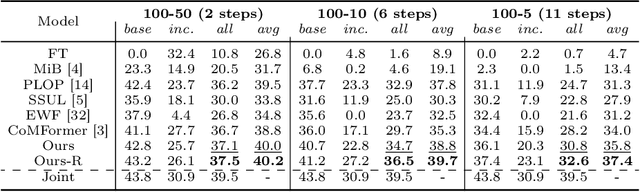

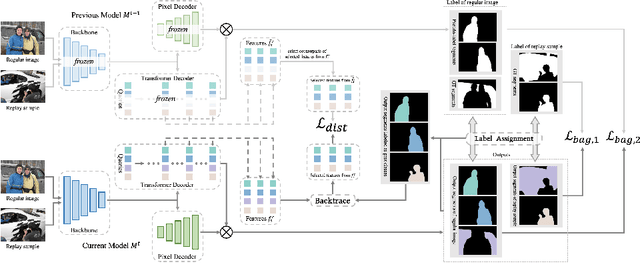

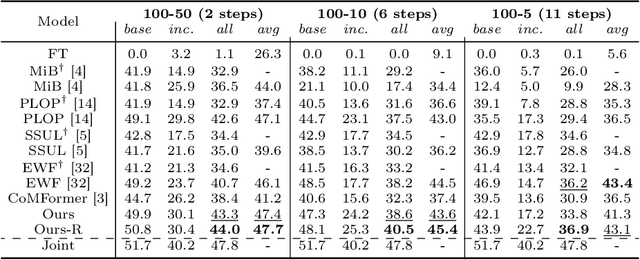

Abstract:This study explores the emerging area of continual panoptic segmentation, highlighting three key balances. First, we introduce past-class backtrace distillation to balance the stability of existing knowledge with the adaptability to new information. This technique retraces the features associated with past classes based on the final label assignment results, performing knowledge distillation targeting these specific features from the previous model while allowing other features to flexibly adapt to new information. Additionally, we introduce a class-proportional memory strategy, which aligns the class distribution in the replay sample set with that of the historical training data. This strategy maintains a balanced class representation during replay, enhancing the utility of the limited-capacity replay sample set in recalling prior classes. Moreover, recognizing that replay samples are annotated only for the classes of their original step, we devise balanced anti-misguidance losses, which combat the impact of incomplete annotations without incurring classification bias. Building upon these innovations, we present a new method named Balanced Continual Panoptic Segmentation (BalConpas). Our evaluation on the challenging ADE20K dataset demonstrates its superior performance compared to existing state-of-the-art methods. The official code is available at https://github.com/jinpeng0528/BalConpas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge