Hongxing Gao

Condensation-Net: Memory-Efficient Network Architecture with Cross-Channel Pooling Layers and Virtual Feature Maps

Apr 29, 2021

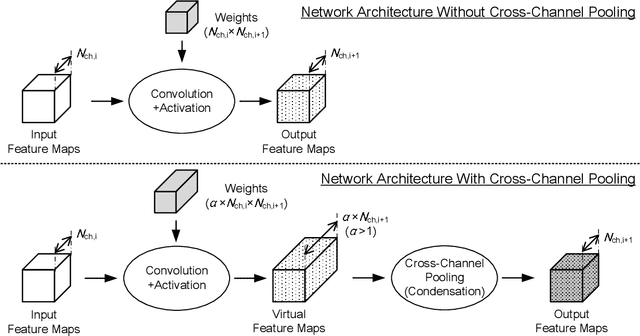

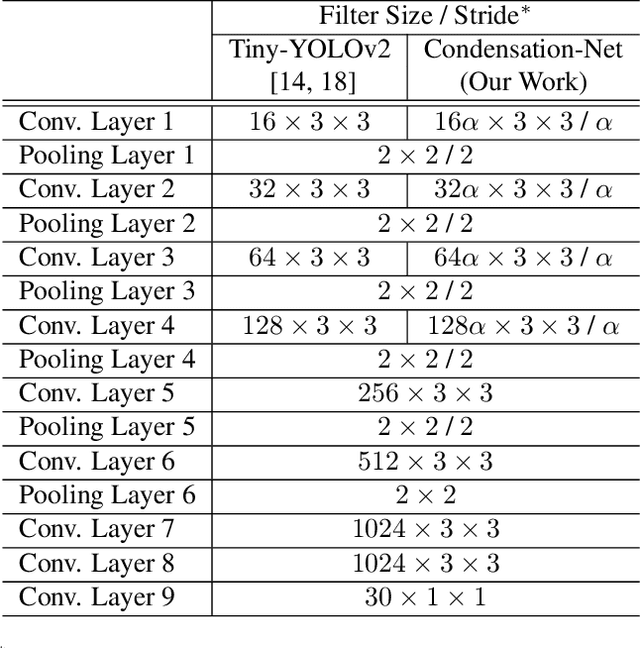

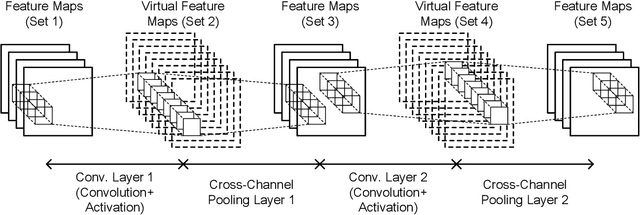

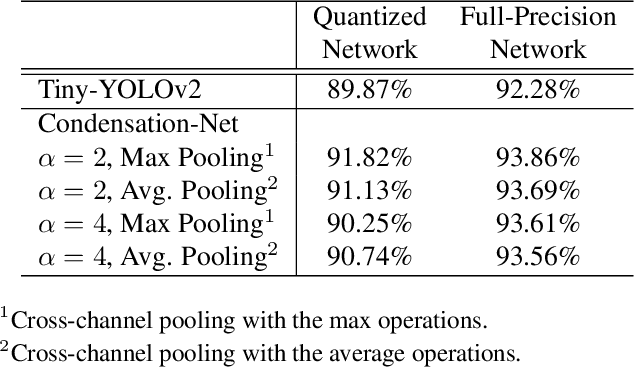

Abstract:"Lightweight convolutional neural networks" is an important research topic in the field of embedded vision. To implement image recognition tasks on a resource-limited hardware platform, it is necessary to reduce the memory size and the computational cost. The contribution of this paper is stated as follows. First, we propose an algorithm to process a specific network architecture (Condensation-Net) without increasing the maximum memory storage for feature maps. The architecture for virtual feature maps saves 26.5% of memory bandwidth by calculating the results of cross-channel pooling before storing the feature map into the memory. Second, we show that cross-channel pooling can improve the accuracy of object detection tasks, such as face detection, because it increases the number of filter weights. Compared with Tiny-YOLOv2, the improvement of accuracy is 2.0% for quantized networks and 1.5% for full-precision networks when the false-positive rate is 0.1. Last but not the least, the analysis results show that the overhead to support the cross-channel pooling with the proposed hardware architecture is negligible small. The extra memory cost to support Condensation-Net is 0.2% of the total size, and the extra gate count is only 1.0% of the total size.

* Camera-ready version for CVPR 2019 workshop (Embedded Vision Workshop)

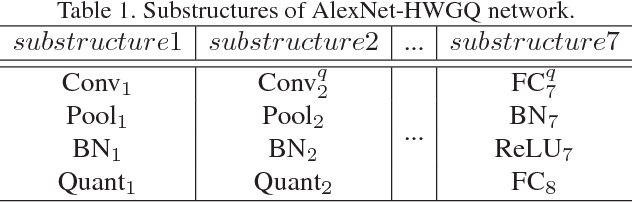

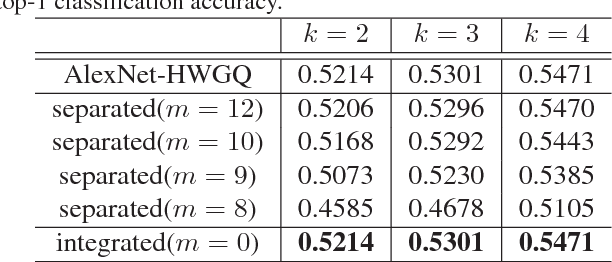

IFQ-Net: Integrated Fixed-point Quantization Networks for Embedded Vision

Nov 19, 2019

Abstract:Deploying deep models on embedded devices has been a challenging problem since the great success of deep learning based networks. Fixed-point networks, which represent their data with low bits fixed-point and thus give remarkable savings on memory usage, are generally preferred. Even though current fixed-point networks employ relative low bits (e.g. 8-bits), the memory saving is far from enough for the embedded devices. On the other hand, quantization deep networks, for example XNOR-Net and HWGQNet, quantize the data into 1 or 2 bits resulting in more significant memory savings but still contain lots of floatingpoint data. In this paper, we propose a fixed-point network for embedded vision tasks through converting the floatingpoint data in a quantization network into fixed-point. Furthermore, to overcome the data loss caused by the conversion, we propose to compose floating-point data operations across multiple layers (e.g. convolution, batch normalization and quantization layers) and convert them into fixedpoint. We name the fixed-point network obtained through such integrated conversion as Integrated Fixed-point Quantization Networks (IFQ-Net). We demonstrate that our IFQNet gives 2.16x and 18x more savings on model size and runtime feature map memory respectively with similar accuracy on ImageNet. Furthermore, based on YOLOv2, we design IFQ-Tinier-YOLO face detector which is a fixed-point network with 256x reduction in model size (246k Bytes) than Tiny-YOLO. We illustrate the promising performance of our face detector in terms of detection rate on Face Detection Data Set and Bencmark (FDDB) and qualitative results of detecting small faces of Wider Face dataset.

* 9 pages, 6 figures

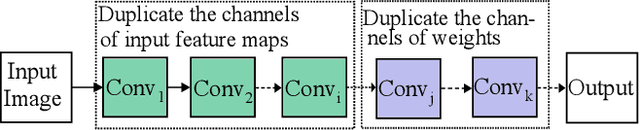

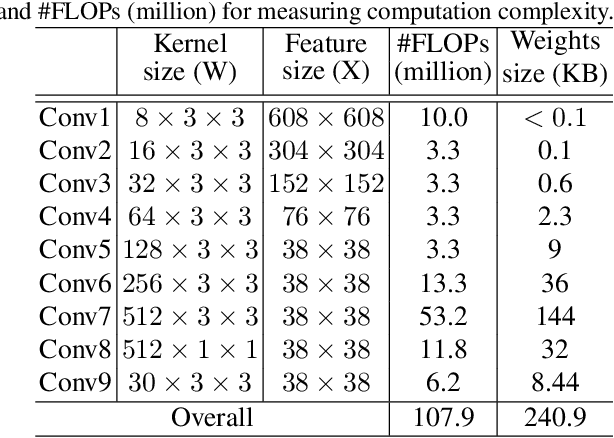

DupNet: Towards Very Tiny Quantized CNN with Improved Accuracy for Face Detection

Nov 13, 2019

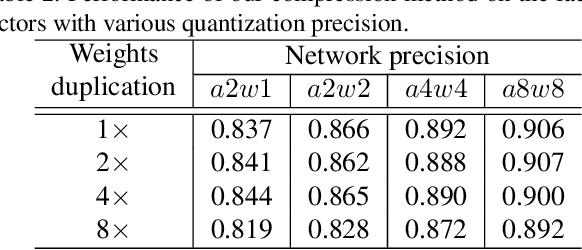

Abstract:Deploying deep learning based face detectors on edge devices is a challenging task due to the limited computation resources. Even though binarizing the weights of a very tiny network gives impressive compactness on model size (e.g. 240.9 KB for IFQ-Tinier-YOLO), it is not tiny enough to fit in the embedded devices with strict memory constraints. In this paper, we propose DupNet which consists of two parts. Firstly, we employ weights with duplicated channels for the weight-intensive layers to reduce the model size. Secondly, for the quantization-sensitive layers whose quantization causes notable accuracy drop, we duplicate its input feature maps. It allows us to use more weights channels for convolving more representative outputs. Based on that, we propose a very tiny face detector, DupNet-Tinier-YOLO, which is 6.5X times smaller on model size and 42.0% less complex on computation and meanwhile achieves 2.4% higher detection than IFQ-Tinier-YOLO. Comparing with the full precision Tiny-YOLO, our DupNet-Tinier-YOLO gives 1,694.2X and 389.9X times savings on model size and computation complexity respectively with only 4.0% drop on detection rate (0.880 vs. 0.920). Moreover, our DupNet-Tinier-YOLO is only 36.9 KB, which is the tiniest deep face detector to our best knowledge.

Knowledge Representing: Efficient, Sparse Representation of Prior Knowledge for Knowledge Distillation

Nov 13, 2019

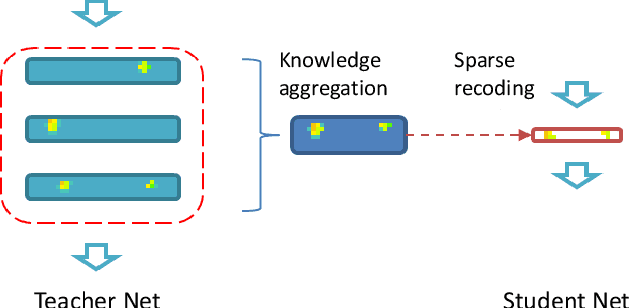

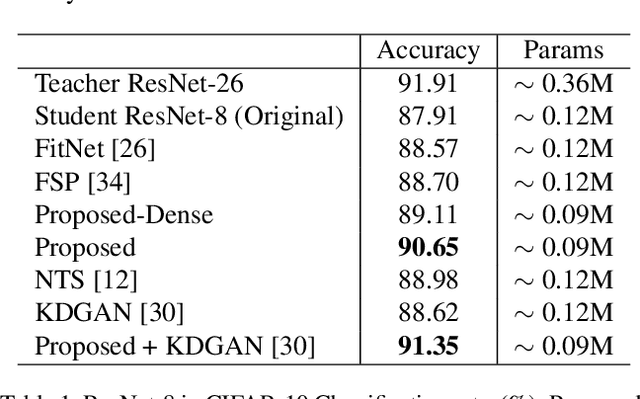

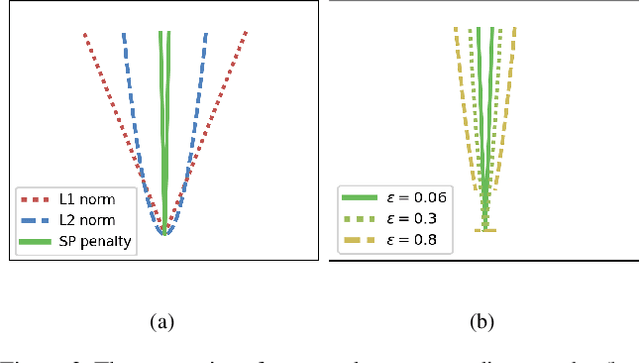

Abstract:Despite the recent works on knowledge distillation (KD) have achieved a further improvement through elaborately modeling the decision boundary as the posterior knowledge, their performance is still dependent on the hypothesis that the target network has a powerful capacity (representation ability). In this paper, we propose a knowledge representing (KR) framework mainly focusing on modeling the parameters distribution as prior knowledge. Firstly, we suggest a knowledge aggregation scheme in order to answer how to represent the prior knowledge from teacher network. Through aggregating the parameters distribution from teacher network into more abstract level, the scheme is able to alleviate the phenomenon of residual accumulation in the deeper layers. Secondly, as the critical issue of what the most important prior knowledge is for better distilling, we design a sparse recoding penalty for constraining the student network to learn with the penalized gradients. With the proposed penalty, the student network can effectively avoid the over-regularization during knowledge distilling and converge faster. The quantitative experiments exhibit that the proposed framework achieves the state-ofthe-arts performance, even though the target network does not have the expected capacity. Moreover, the framework is flexible enough for combining with other KD methods based on the posterior knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge