Hongliang Qiao

Diffusion Model Agnostic Social Influence Maximization in Hyperbolic Space

Feb 19, 2025Abstract:The Influence Maximization (IM) problem aims to find a small set of influential users to maximize their influence spread in a social network. Traditional methods rely on fixed diffusion models with known parameters, limiting their generalization to real-world scenarios. In contrast, graph representation learning-based methods have gained wide attention for overcoming this limitation by learning user representations to capture influence characteristics. However, existing studies are built on Euclidean space, which fails to effectively capture the latent hierarchical features of social influence distribution. As a result, users' influence spread cannot be effectively measured through the learned representations. To alleviate these limitations, we propose HIM, a novel diffusion model agnostic method that leverages hyperbolic representation learning to estimate users' potential influence spread from social propagation data. HIM consists of two key components. First, a hyperbolic influence representation module encodes influence spread patterns from network structure and historical influence activations into expressive hyperbolic user representations. Hence, the influence magnitude of users can be reflected through the geometric properties of hyperbolic space, where highly influential users tend to cluster near the space origin. Second, a novel adaptive seed selection module is developed to flexibly and effectively select seed users using the positional information of learned user representations. Extensive experiments on five network datasets demonstrate the superior effectiveness and efficiency of our method for the IM problem with unknown diffusion model parameters, highlighting its potential for large-scale real-world social networks.

UER: A Heuristic Bias Addressing Approach for Online Continual Learning

Sep 08, 2023

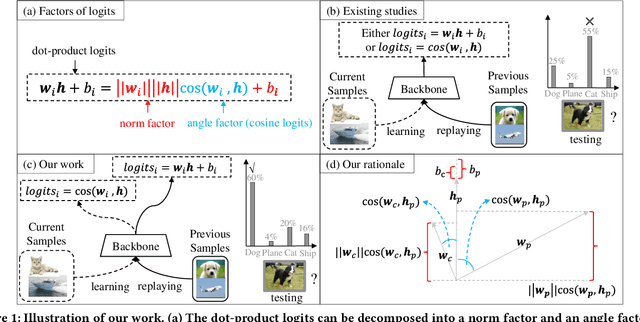

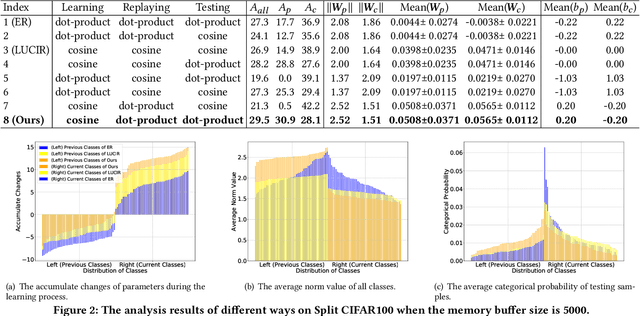

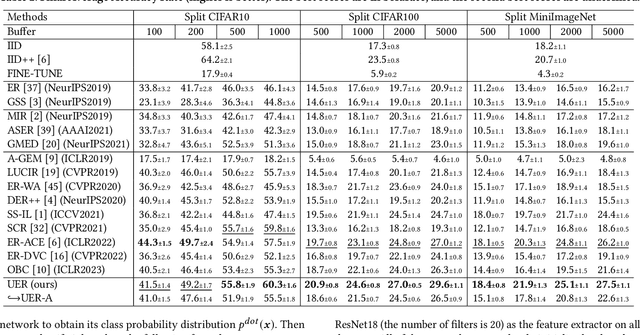

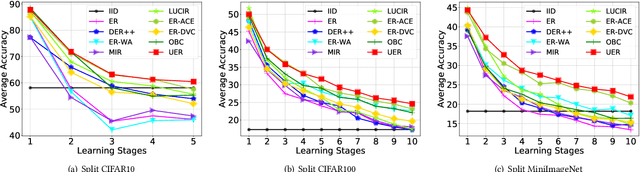

Abstract:Online continual learning aims to continuously train neural networks from a continuous data stream with a single pass-through data. As the most effective approach, the rehearsal-based methods replay part of previous data. Commonly used predictors in existing methods tend to generate biased dot-product logits that prefer to the classes of current data, which is known as a bias issue and a phenomenon of forgetting. Many approaches have been proposed to overcome the forgetting problem by correcting the bias; however, they still need to be improved in online fashion. In this paper, we try to address the bias issue by a more straightforward and more efficient method. By decomposing the dot-product logits into an angle factor and a norm factor, we empirically find that the bias problem mainly occurs in the angle factor, which can be used to learn novel knowledge as cosine logits. On the contrary, the norm factor abandoned by existing methods helps remember historical knowledge. Based on this observation, we intuitively propose to leverage the norm factor to balance the new and old knowledge for addressing the bias. To this end, we develop a heuristic approach called unbias experience replay (UER). UER learns current samples only by the angle factor and further replays previous samples by both the norm and angle factors. Extensive experiments on three datasets show that UER achieves superior performance over various state-of-the-art methods. The code is in https://github.com/FelixHuiweiLin/UER.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge