Hongfa Zhao

Unsupervised Trajectory Segmentation and Promoting of Multi-Modal Surgical Demonstrations

Oct 01, 2018

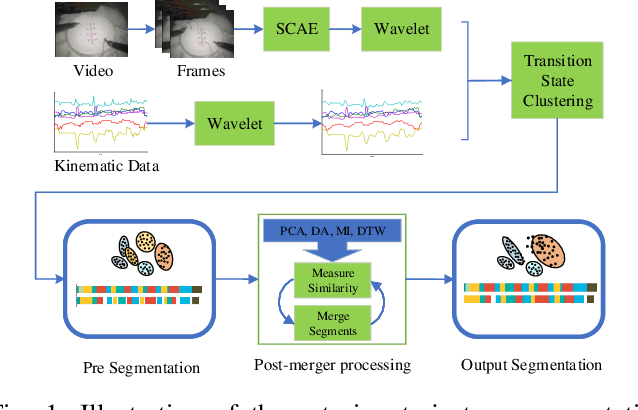

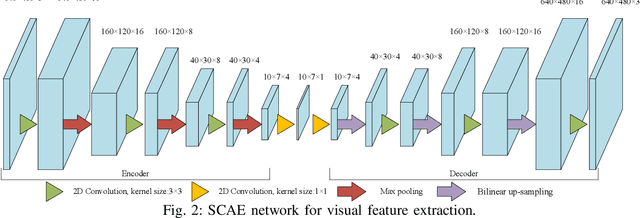

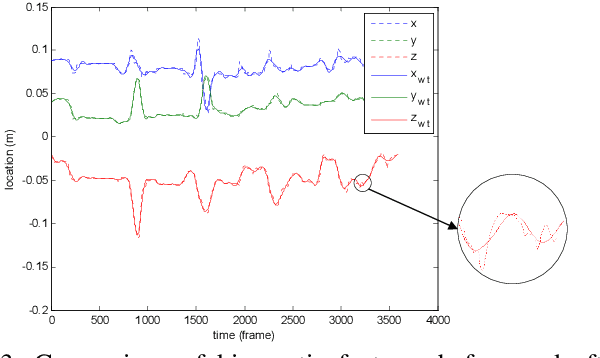

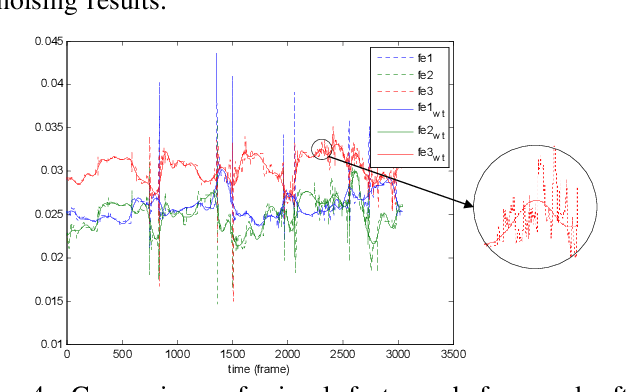

Abstract:To improve the efficiency of surgical trajectory segmentation for robot learning in robot-assisted minimally invasive surgery, this paper presents a fast unsupervised method using video and kinematic data, followed by a promoting procedure to address the over-segmentation issue. Unsupervised deep learning network, stacking convolutional auto-encoder, is employed to extract more discriminative features from videos in an effective way. To further improve the accuracy of segmentation, on one hand, wavelet transform is used to filter out the noises existed in the features from video and kinematic data. On the other hand, the segmentation result is promoted by identifying the adjacent segments with no state transition based on the predefined similarity measurements. Extensive experiments on a public dataset JIGSAWS show that our method achieves much higher accuracy of segmentation than state-of-the-art methods in the shorter time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge