Hiromu Taketsugu

MMCM: Multimodality-aware Metric using Clustering-based Modes for Probabilistic Human Motion Prediction

Nov 19, 2025

Abstract:This paper proposes a novel metric for Human Motion Prediction (HMP). Since a single past sequence can lead to multiple possible futures, a probabilistic HMP method predicts such multiple motions. While a single motion predicted by a deterministic method is evaluated only with the difference from its ground truth motion, multiple predicted motions should also be evaluated based on their distribution. For this evaluation, this paper focuses on the following two criteria. \textbf{(a) Coverage}: motions should be distributed among multiple motion modes to cover diverse possibilities. \textbf{(b) Validity}: motions should be kinematically valid as future motions observable from a given past motion. However, existing metrics simply appreciate widely distributed motions even if these motions are observed in a single mode and kinematically invalid. To resolve these disadvantages, this paper proposes a Multimodality-aware Metric using Clustering-based Modes (MMCM). For (a) coverage, MMCM divides a motion space into several clusters, each of which is regarded as a mode. These modes are used to explicitly evaluate whether predicted motions are distributed among multiple modes. For (b) validity, MMCM identifies valid modes by collecting possible future motions from a motion dataset. Our experiments validate that our clustering yields sensible mode definitions and that MMCM accurately scores multimodal predictions. Code: https://github.com/placerkyo/MMCM

Human Motion Prediction via Test-domain-aware Adaptation with Easily-available Human Motions Estimated from Videos

May 13, 2025Abstract:In 3D Human Motion Prediction (HMP), conventional methods train HMP models with expensive motion capture data. However, the data collection cost of such motion capture data limits the data diversity, which leads to poor generalizability to unseen motions or subjects. To address this issue, this paper proposes to enhance HMP with additional learning using estimated poses from easily available videos. The 2D poses estimated from the monocular videos are carefully transformed into motion capture-style 3D motions through our pipeline. By additional learning with the obtained motions, the HMP model is adapted to the test domain. The experimental results demonstrate the quantitative and qualitative impact of our method.

Physical Plausibility-aware Trajectory Prediction via Locomotion Embodiment

Mar 21, 2025

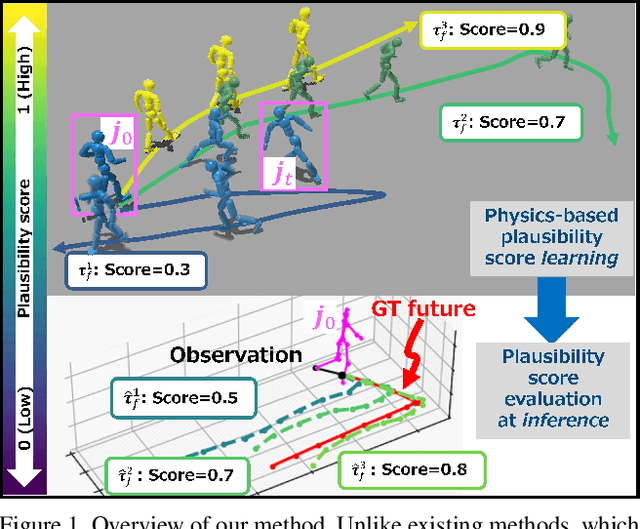

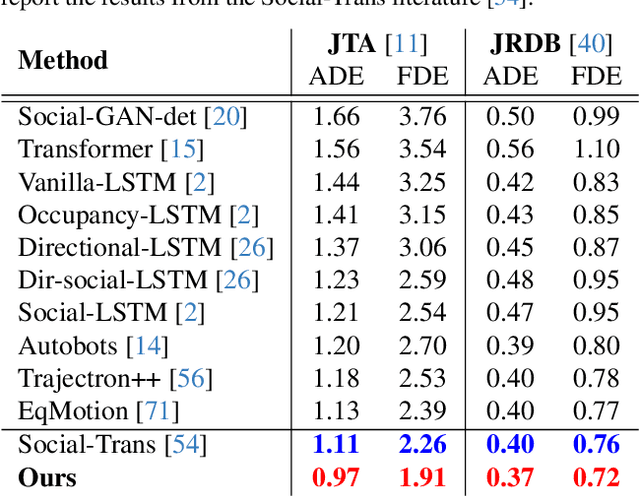

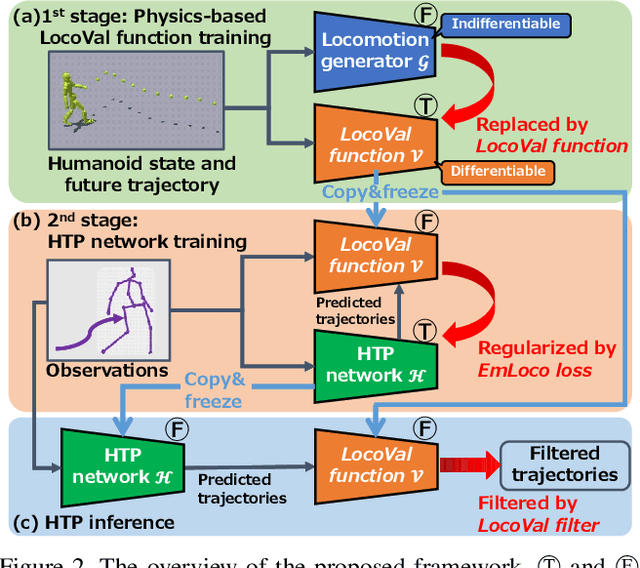

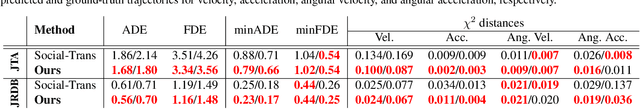

Abstract:Humans can predict future human trajectories even from momentary observations by using human pose-related cues. However, previous Human Trajectory Prediction (HTP) methods leverage the pose cues implicitly, resulting in implausible predictions. To address this, we propose Locomotion Embodiment, a framework that explicitly evaluates the physical plausibility of the predicted trajectory by locomotion generation under the laws of physics. While the plausibility of locomotion is learned with an indifferentiable physics simulator, it is replaced by our differentiable Locomotion Value function to train an HTP network in a data-driven manner. In particular, our proposed Embodied Locomotion loss is beneficial for efficiently training a stochastic HTP network using multiple heads. Furthermore, the Locomotion Value filter is proposed to filter out implausible trajectories at inference. Experiments demonstrate that our method enhances even the state-of-the-art HTP methods across diverse datasets and problem settings. Our code is available at: https://github.com/ImIntheMiddle/EmLoco.

Active Transfer Learning for Efficient Video-Specific Human Pose Estimation

Nov 08, 2023Abstract:Human Pose (HP) estimation is actively researched because of its wide range of applications. However, even estimators pre-trained on large datasets may not perform satisfactorily due to a domain gap between the training and test data. To address this issue, we present our approach combining Active Learning (AL) and Transfer Learning (TL) to adapt HP estimators to individual video domains efficiently. For efficient learning, our approach quantifies (i) the estimation uncertainty based on the temporal changes in the estimated heatmaps and (ii) the unnaturalness in the estimated full-body HPs. These quantified criteria are then effectively combined with the state-of-the-art representativeness criterion to select uncertain and diverse samples for efficient HP estimator learning. Furthermore, we reconsider the existing Active Transfer Learning (ATL) method to introduce novel ideas related to the retraining methods and Stopping Criteria (SC). Experimental results demonstrate that our method enhances learning efficiency and outperforms comparative methods. Our code is publicly available at: https://github.com/ImIntheMiddle/VATL4Pose-WACV2024

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge