Himanshu Buckchash

Hedging Is Not All You Need: A Simple Baseline for Online Learning Under Haphazard Inputs

Sep 16, 2024

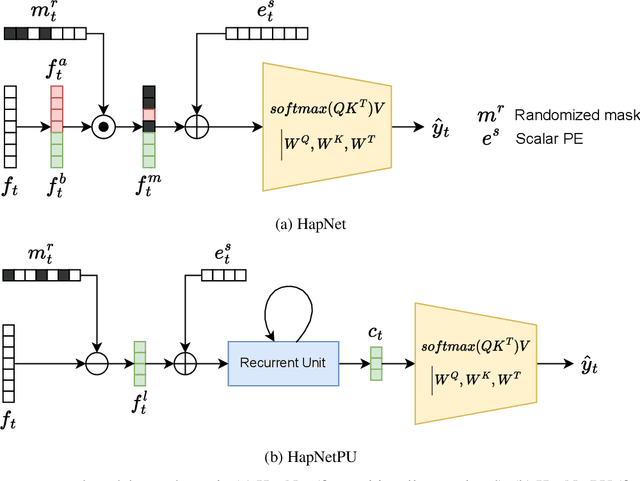

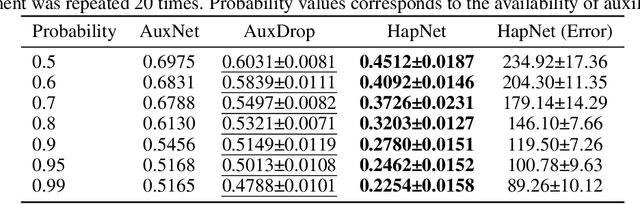

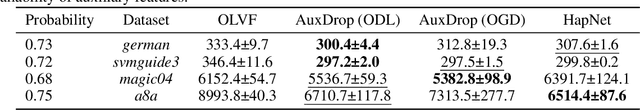

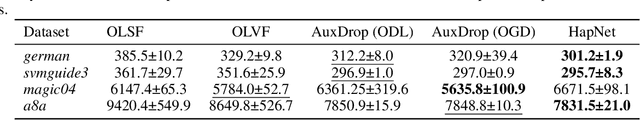

Abstract:Handling haphazard streaming data, such as data from edge devices, presents a challenging problem. Over time, the incoming data becomes inconsistent, with missing, faulty, or new inputs reappearing. Therefore, it requires models that are reliable. Recent methods to solve this problem depend on a hedging-based solution and require specialized elements like auxiliary dropouts, forked architectures, and intricate network design. We observed that hedging can be reduced to a special case of weighted residual connection; this motivated us to approximate it with plain self-attention. In this work, we propose HapNet, a simple baseline that is scalable, does not require online backpropagation, and is adaptable to varying input types. All present methods are restricted to scaling with a fixed window; however, we introduce a more complex problem of scaling with a variable window where the data becomes positionally uncorrelated, and cannot be addressed by present methods. We demonstrate that a variant of the proposed approach can work even for this complex scenario. We extensively evaluated the proposed approach on five benchmarks and found competitive performance.

Towards a More Inclusive AI: Progress and Perspectives in Large Language Model Training for the Sámi Language

May 09, 2024Abstract:S\'ami, an indigenous language group comprising multiple languages, faces digital marginalization due to the limited availability of data and sophisticated language models designed for its linguistic intricacies. This work focuses on increasing technological participation for the S\'ami language. We draw the attention of the ML community towards the language modeling problem of Ultra Low Resource (ULR) languages. ULR languages are those for which the amount of available textual resources is very low, and the speaker count for them is also very low. ULRLs are also not supported by mainstream Large Language Models (LLMs) like ChatGPT, due to which gathering artificial training data for them becomes even more challenging. Mainstream AI foundational model development has given less attention to this category of languages. Generally, these languages have very few speakers, making it hard to find them. However, it is important to develop foundational models for these ULR languages to promote inclusion and the tangible abilities and impact of LLMs. To this end, we have compiled the available S\'ami language resources from the web to create a clean dataset for training language models. In order to study the behavior of modern LLM models with ULR languages (S\'ami), we have experimented with different kinds of LLMs, mainly at the order of $\sim$ seven billion parameters. We have also explored the effect of multilingual LLM training for ULRLs. We found that the decoder-only models under a sequential multilingual training scenario perform better than joint multilingual training, whereas multilingual training with high semantic overlap, in general, performs better than training from scratch.This is the first study on the S\'ami language for adapting non-statistical language models that use the latest developments in the field of natural language processing (NLP).

pNNCLR: Stochastic Pseudo Neighborhoods for Contrastive Learning based Unsupervised Representation Learning Problems

Aug 14, 2023

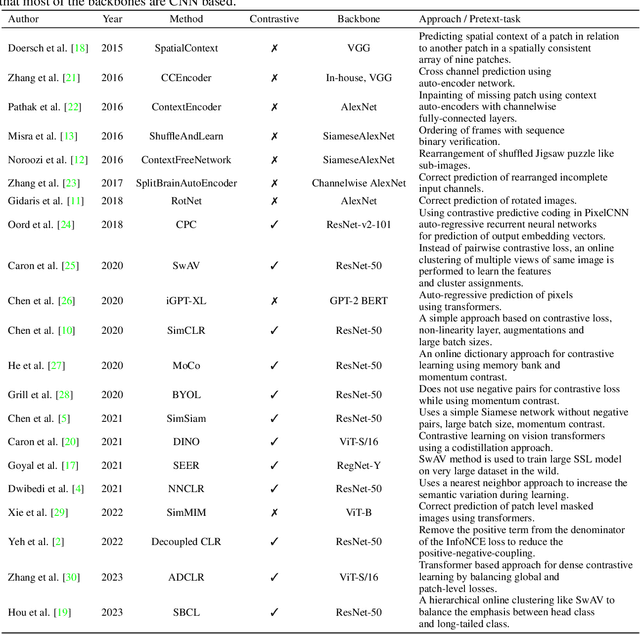

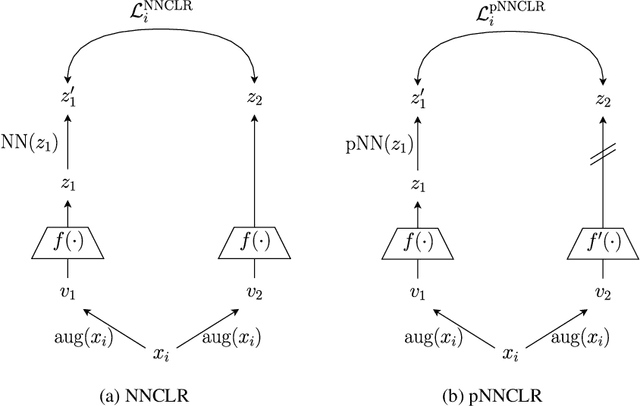

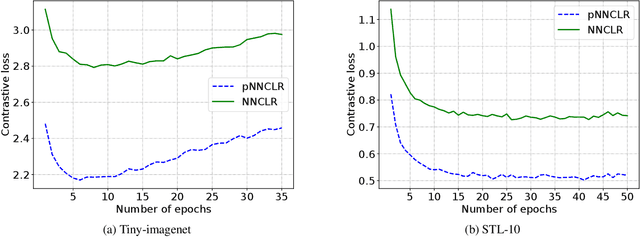

Abstract:Nearest neighbor (NN) sampling provides more semantic variations than pre-defined transformations for self-supervised learning (SSL) based image recognition problems. However, its performance is restricted by the quality of the support set, which holds positive samples for the contrastive loss. In this work, we show that the quality of the support set plays a crucial role in any nearest neighbor based method for SSL. We then provide a refined baseline (pNNCLR) to the nearest neighbor based SSL approach (NNCLR). To this end, we introduce pseudo nearest neighbors (pNN) to control the quality of the support set, wherein, rather than sampling the nearest neighbors, we sample in the vicinity of hard nearest neighbors by varying the magnitude of the resultant vector and employing a stochastic sampling strategy to improve the performance. Additionally, to stabilize the effects of uncertainty in NN-based learning, we employ a smooth-weight-update approach for training the proposed network. Evaluation of the proposed method on multiple public image recognition and medical image recognition datasets shows that it performs up to 8 percent better than the baseline nearest neighbor method, and is comparable to other previously proposed SSL methods.

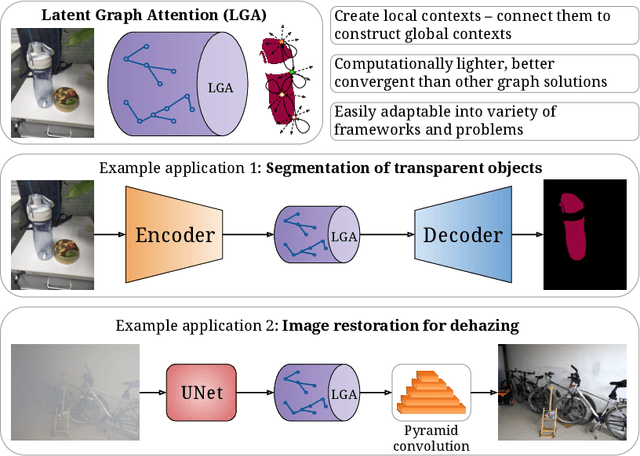

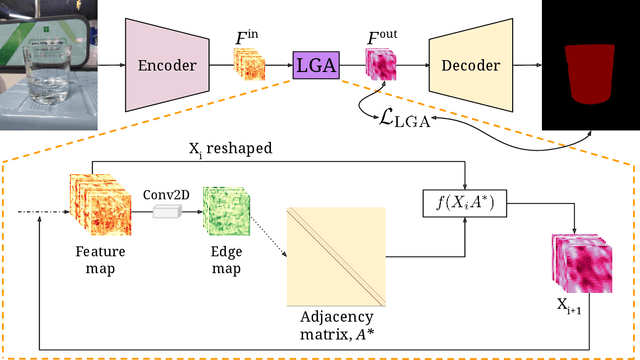

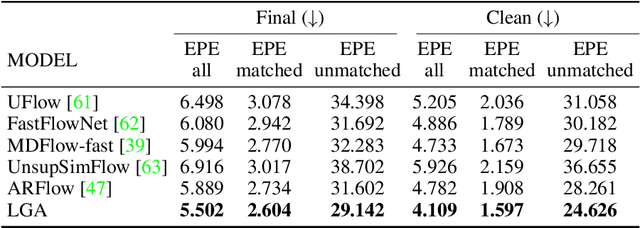

Latent Graph Attention for Enhanced Spatial Context

Jul 12, 2023

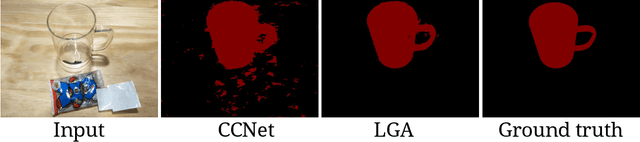

Abstract:Global contexts in images are quite valuable in image-to-image translation problems. Conventional attention-based and graph-based models capture the global context to a large extent, however, these are computationally expensive. Moreover, the existing approaches are limited to only learning the pairwise semantic relation between any two points on the image. In this paper, we present Latent Graph Attention (LGA) a computationally inexpensive (linear to the number of nodes) and stable, modular framework for incorporating the global context in the existing architectures, especially empowering small-scale architectures to give performance closer to large size architectures, thus making the light-weight architectures more useful for edge devices with lower compute power and lower energy needs. LGA propagates information spatially using a network of locally connected graphs, thereby facilitating to construct a semantically coherent relation between any two spatially distant points that also takes into account the influence of the intermediate pixels. Moreover, the depth of the graph network can be used to adapt the extent of contextual spread to the target dataset, thereby being able to explicitly control the added computational cost. To enhance the learning mechanism of LGA, we also introduce a novel contrastive loss term that helps our LGA module to couple well with the original architecture at the expense of minimal additional computational load. We show that incorporating LGA improves the performance on three challenging applications, namely transparent object segmentation, image restoration for dehazing and optical flow estimation.

Computational Models for Academic Performance Estimation

Sep 06, 2020

Abstract:Evaluation of students' performance for the completion of courses has been a major problem for both students and faculties during the work-from-home period in this COVID pandemic situation. To this end, this paper presents an in-depth analysis of deep learning and machine learning approaches for the formulation of an automated students' performance estimation system that works on partially available students' academic records. Our main contributions are (a) a large dataset with fifteen courses (shared publicly for academic research) (b) statistical analysis and ablations on the estimation problem for this dataset (c) predictive analysis through deep learning approaches and comparison with other arts and machine learning algorithms. Unlike previous approaches that rely on feature engineering or logical function deduction, our approach is fully data-driven and thus highly generic with better performance across different prediction tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge