Henni Ouerdane

Fusion-ResNet: A Lightweight multi-label NILM Model Using PCA-ICA Feature Fusion

Nov 15, 2025

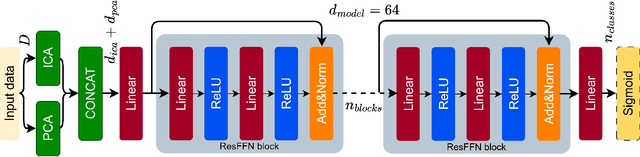

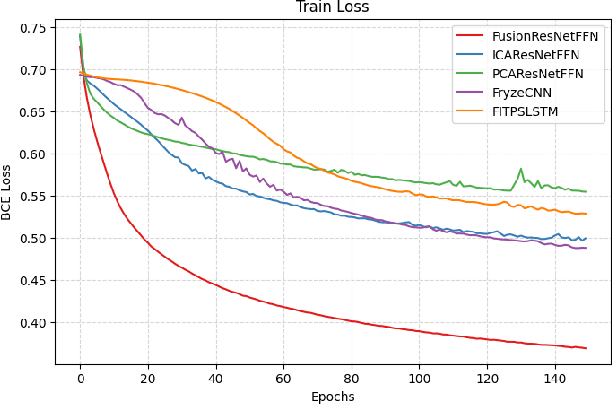

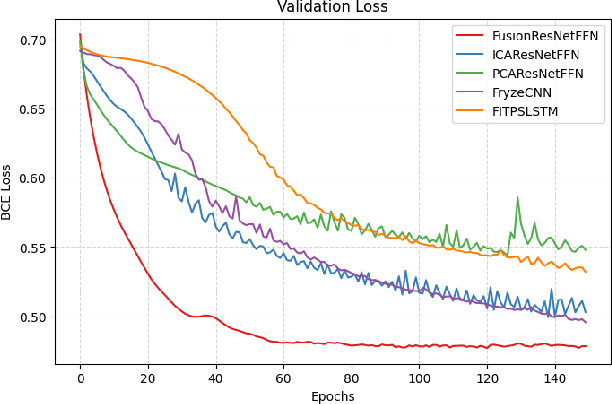

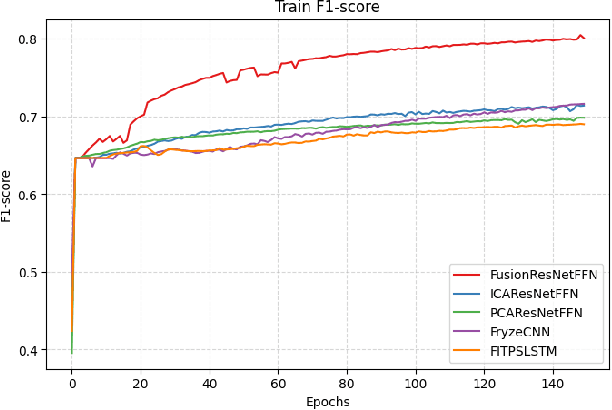

Abstract:Non-intrusive load monitoring (NILM) is an advanced load monitoring technique that uses data-driven algorithms to disaggregate the total power consumption of a household into the consumption of individual appliances. However, real-world NILM deployment still faces major challenges, including overfitting, low model generalization, and disaggregating a large number of appliances operating at the same time. To address these challenges, this work proposes an end-to-end framework for the NILM classification task, which consists of high-frequency labeled data, a feature extraction method, and a lightweight neural network. Within this framework, we introduce a novel feature extraction method that fuses Independent Component Analysis (ICA) and Principal Component Analysis (PCA) features. Moreover, we propose a lightweight architecture for multi-label NILM classification (Fusion-ResNet). The proposed feature-based model achieves a higher $F1$ score on average and across different appliances compared to state-of-the-art NILM classifiers while minimizing the training and inference time. Finally, we assessed the performance of our model against baselines with a varying number of simultaneously active devices. Results demonstrate that Fusion-ResNet is relatively robust to stress conditions with up to 15 concurrently active appliances.

Enhancing Non-Intrusive Load Monitoring with Features Extracted by Independent Component Analysis

Jan 28, 2025Abstract:In this paper, a novel neural network architecture is proposed to address the challenges in energy disaggregation algorithms. These challenges include the limited availability of data and the complexity of disaggregating a large number of appliances operating simultaneously. The proposed model utilizes independent component analysis as the backbone of the neural network and is evaluated using the F1-score for varying numbers of appliances working concurrently. Our results demonstrate that the model is less prone to overfitting, exhibits low complexity, and effectively decomposes signals with many individual components. Furthermore, we show that the proposed model outperforms existing algorithms when applied to real-world data.

Physics-informed appliance signatures generator for energy disaggregation

Jan 03, 2024Abstract:Energy disaggregation is a promising solution to access detailed information on energy consumption in a household, by itemizing its total energy consumption. However, in real-world applications, overfitting remains a challenging problem for data-driven disaggregation methods. First, the available real-world datasets are biased towards the most frequently used appliances. Second, both real and synthetic publicly-available datasets are limited in number of appliances, which may not be sufficient for a disaggregation algorithm to learn complex relations among different types of appliances and their states. To address the lack of appliance data, we propose two physics-informed data generators: one for high sampling rate signals (kHz) and another for low sampling rate signals (Hz). These generators rely on prior knowledge of the physics of appliance energy consumption, and are capable of simulating a virtually unlimited number of different appliances and their corresponding signatures for any time period. Both methods involve defining a mathematical model, selecting centroids corresponding to individual appliances, sampling model parameters around each centroid, and finally substituting the obtained parameters into the mathematical model. Additionally, by using Principal Component Analysis and Kullback-Leibler divergence, we demonstrate that our methods significantly outperform the previous approaches.

Combining model-predictive control and predictive reinforcement learning for stable quadrupedal robot locomotion

Jul 15, 2023

Abstract:Stable gait generation is a crucial problem for legged robot locomotion as this impacts other critical performance factors such as, e.g. mobility over an uneven terrain and power consumption. Gait generation stability results from the efficient control of the interaction between the legged robot's body and the environment where it moves. Here, we study how this can be achieved by a combination of model-predictive and predictive reinforcement learning controllers. Model-predictive control (MPC) is a well-established method that does not utilize any online learning (except for some adaptive variations) as it provides a convenient interface for state constraints management. Reinforcement learning (RL), in contrast, relies on adaptation based on pure experience. In its bare-bone variants, RL is not always suitable for robots due to their high complexity and expensive simulation/experimentation. In this work, we combine both control methods to address the quadrupedal robot stable gate generation problem. The hybrid approach that we develop and apply uses a cost roll-out algorithm with a tail cost in the form of a Q-function modeled by a neural network; this allows to alleviate the computational complexity, which grows exponentially with the prediction horizon in a purely MPC approach. We demonstrate that our RL gait controller achieves stable locomotion at short horizons, where a nominal MP controller fails. Further, our controller is capable of live operation, meaning that it does not require previous training. Our results suggest that the hybridization of MPC with RL, as presented here, is beneficial to achieve a good balance between online control capabilities and computational complexity.

Data-driven control of micro-climate in buildings; an event-triggered reinforcement learning approach

Jan 28, 2020

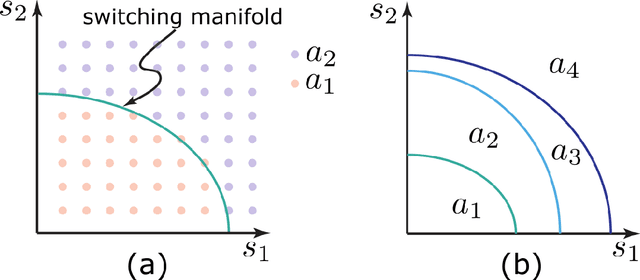

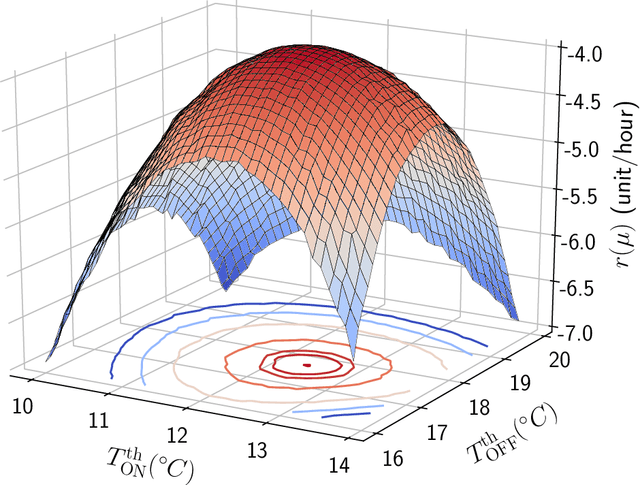

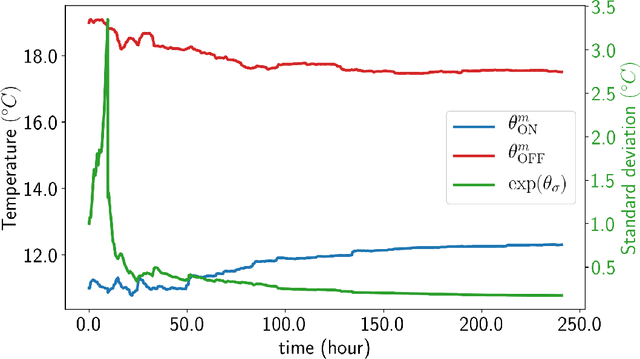

Abstract:Smart buildings have great potential for shaping an energy-efficient, sustainable, and more economic future for our planet as buildings account for approximately 40% of the global energy consumption. A key challenge for large-scale plug and play deployment of the smart building technology is the ability to learn a good control policy in a short period of time, i.e. having a low sample complexity for the learning control agent. Motivated by this problem and to remedy the issue of high sample complexity in the general context of cyber-physical systems, we propose an event-triggered paradigm for learning and control with variable-time intervals, as opposed to the traditional constant-time sampling. The events occur when the system state crosses the a priori-parameterized switching manifolds; this crossing triggers the learning as well as the control processes. Policy gradient and temporal difference methods are employed to learn the optimal switching manifolds which define the optimal control policy. We propose two event-triggered learning algorithms for stochastic and deterministic control policies. We show the efficacy of our proposed approach via designing a smart learning thermostat for autonomous micro-climate control in buildings. The event-triggered algorithms are implemented on a single-zone building to decrease buildings' energy consumption as well as to increase occupants' comfort. Simulation results confirm the efficacy and improved sample efficiency of the proposed event-triggered approach for online learning and control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge