Sahar Moghimian Hoosh

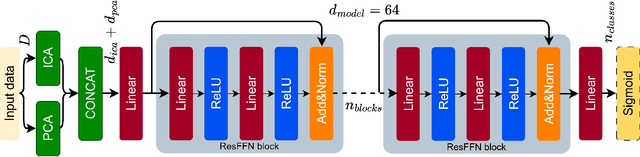

Fusion-ResNet: A Lightweight multi-label NILM Model Using PCA-ICA Feature Fusion

Nov 15, 2025

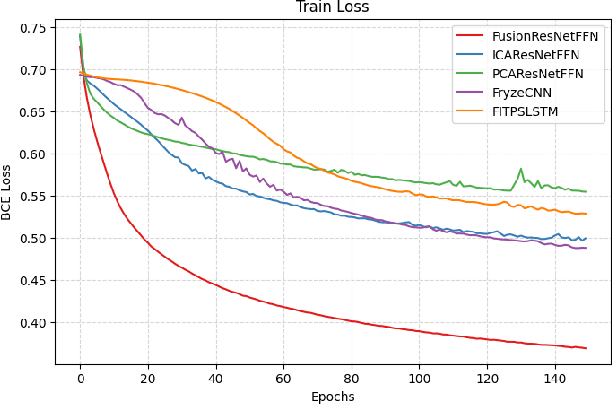

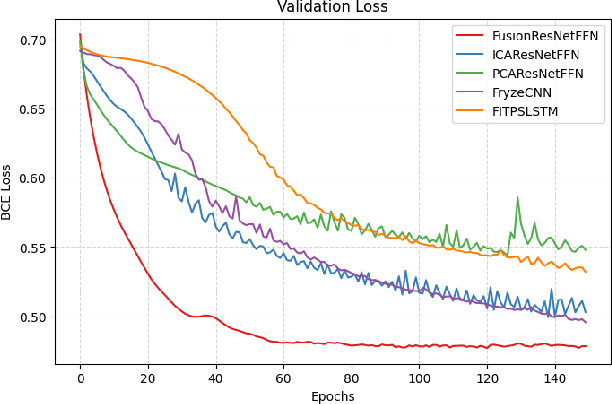

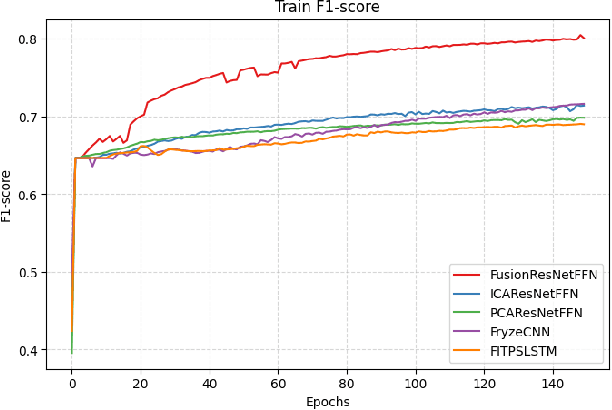

Abstract:Non-intrusive load monitoring (NILM) is an advanced load monitoring technique that uses data-driven algorithms to disaggregate the total power consumption of a household into the consumption of individual appliances. However, real-world NILM deployment still faces major challenges, including overfitting, low model generalization, and disaggregating a large number of appliances operating at the same time. To address these challenges, this work proposes an end-to-end framework for the NILM classification task, which consists of high-frequency labeled data, a feature extraction method, and a lightweight neural network. Within this framework, we introduce a novel feature extraction method that fuses Independent Component Analysis (ICA) and Principal Component Analysis (PCA) features. Moreover, we propose a lightweight architecture for multi-label NILM classification (Fusion-ResNet). The proposed feature-based model achieves a higher $F1$ score on average and across different appliances compared to state-of-the-art NILM classifiers while minimizing the training and inference time. Finally, we assessed the performance of our model against baselines with a varying number of simultaneously active devices. Results demonstrate that Fusion-ResNet is relatively robust to stress conditions with up to 15 concurrently active appliances.

Enhancing Non-Intrusive Load Monitoring with Features Extracted by Independent Component Analysis

Jan 28, 2025Abstract:In this paper, a novel neural network architecture is proposed to address the challenges in energy disaggregation algorithms. These challenges include the limited availability of data and the complexity of disaggregating a large number of appliances operating simultaneously. The proposed model utilizes independent component analysis as the backbone of the neural network and is evaluated using the F1-score for varying numbers of appliances working concurrently. Our results demonstrate that the model is less prone to overfitting, exhibits low complexity, and effectively decomposes signals with many individual components. Furthermore, we show that the proposed model outperforms existing algorithms when applied to real-world data.

Physics-informed appliance signatures generator for energy disaggregation

Jan 03, 2024Abstract:Energy disaggregation is a promising solution to access detailed information on energy consumption in a household, by itemizing its total energy consumption. However, in real-world applications, overfitting remains a challenging problem for data-driven disaggregation methods. First, the available real-world datasets are biased towards the most frequently used appliances. Second, both real and synthetic publicly-available datasets are limited in number of appliances, which may not be sufficient for a disaggregation algorithm to learn complex relations among different types of appliances and their states. To address the lack of appliance data, we propose two physics-informed data generators: one for high sampling rate signals (kHz) and another for low sampling rate signals (Hz). These generators rely on prior knowledge of the physics of appliance energy consumption, and are capable of simulating a virtually unlimited number of different appliances and their corresponding signatures for any time period. Both methods involve defining a mathematical model, selecting centroids corresponding to individual appliances, sampling model parameters around each centroid, and finally substituting the obtained parameters into the mathematical model. Additionally, by using Principal Component Analysis and Kullback-Leibler divergence, we demonstrate that our methods significantly outperform the previous approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge