Henghao Zhao

VideoExpert: Augmented LLM for Temporal-Sensitive Video Understanding

Apr 10, 2025

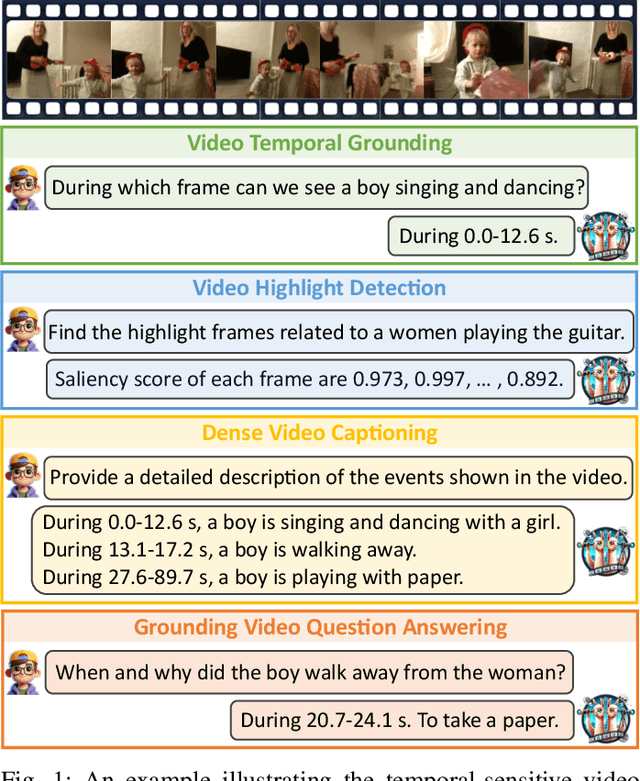

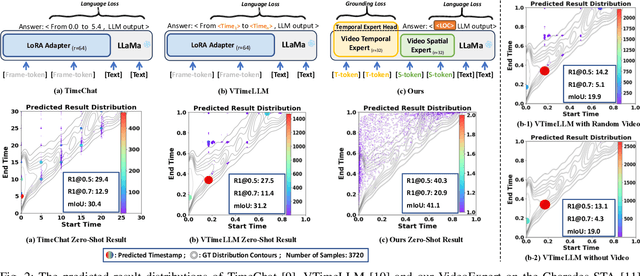

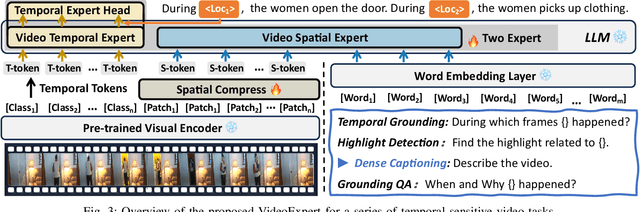

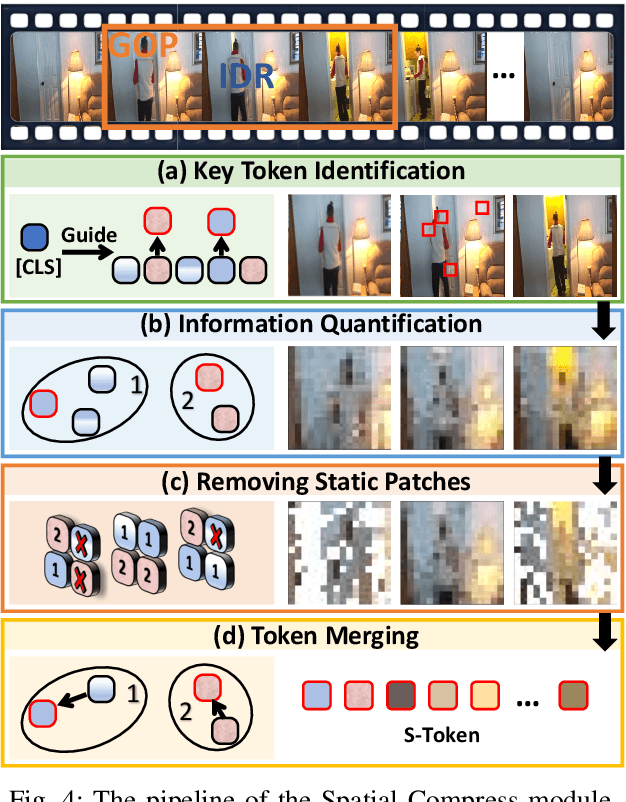

Abstract:The core challenge in video understanding lies in perceiving dynamic content changes over time. However, multimodal large language models struggle with temporal-sensitive video tasks, which requires generating timestamps to mark the occurrence of specific events. Existing strategies require MLLMs to generate absolute or relative timestamps directly. We have observed that those MLLMs tend to rely more on language patterns than visual cues when generating timestamps, affecting their performance. To address this problem, we propose VideoExpert, a general-purpose MLLM suitable for several temporal-sensitive video tasks. Inspired by the expert concept, VideoExpert integrates two parallel modules: the Temporal Expert and the Spatial Expert. The Temporal Expert is responsible for modeling time sequences and performing temporal grounding. It processes high-frame-rate yet compressed tokens to capture dynamic variations in videos and includes a lightweight prediction head for precise event localization. The Spatial Expert focuses on content detail analysis and instruction following. It handles specially designed spatial tokens and language input, aiming to generate content-related responses. These two experts collaborate seamlessly via a special token, ensuring coordinated temporal grounding and content generation. Notably, the Temporal and Spatial Experts maintain independent parameter sets. By offloading temporal grounding from content generation, VideoExpert prevents text pattern biases in timestamp predictions. Moreover, we introduce a Spatial Compress module to obtain spatial tokens. This module filters and compresses patch tokens while preserving key information, delivering compact yet detail-rich input for the Spatial Expert. Extensive experiments demonstrate the effectiveness and versatility of the VideoExpert.

DiffusionVMR: Diffusion Model for Video Moment Retrieval

Aug 29, 2023Abstract:Video moment retrieval is a fundamental visual-language task that aims to retrieve target moments from an untrimmed video based on a language query. Existing methods typically generate numerous proposals manually or via generative networks in advance as the support set for retrieval, which is not only inflexible but also time-consuming. Inspired by the success of diffusion models on object detection, this work aims at reformulating video moment retrieval as a denoising generation process to get rid of the inflexible and time-consuming proposal generation. To this end, we propose a novel proposal-free framework, namely DiffusionVMR, which directly samples random spans from noise as candidates and introduces denoising learning to ground target moments. During training, Gaussian noise is added to the real moments, and the model is trained to learn how to reverse this process. In inference, a set of time spans is progressively refined from the initial noise to the final output. Notably, the training and inference of DiffusionVMR are decoupled, and an arbitrary number of random spans can be used in inference without being consistent with the training phase. Extensive experiments conducted on three widely-used benchmarks (i.e., QVHighlight, Charades-STA, and TACoS) demonstrate the effectiveness of the proposed DiffusionVMR by comparing it with state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge