Hema Maheshwari

Building a Domain-specific Guardrail Model in Production

Jul 24, 2024

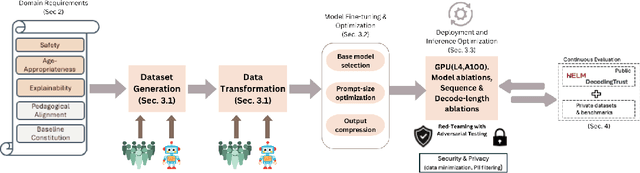

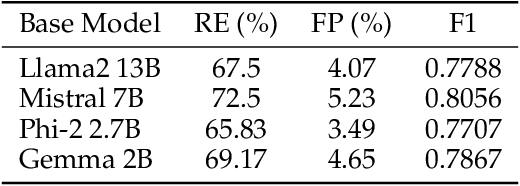

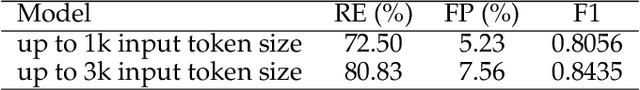

Abstract:Generative AI holds the promise of enabling a range of sought-after capabilities and revolutionizing workflows in various consumer and enterprise verticals. However, putting a model in production involves much more than just generating an output. It involves ensuring the model is reliable, safe, performant and also adheres to the policy of operation in a particular domain. Guardrails as a necessity for models has evolved around the need to enforce appropriate behavior of models, especially when they are in production. In this paper, we use education as a use case, given its stringent requirements of the appropriateness of content in the domain, to demonstrate how a guardrail model can be trained and deployed in production. Specifically, we describe our experience in building a production-grade guardrail model for a K-12 educational platform. We begin by formulating the requirements for deployment to this sensitive domain. We then describe the training and benchmarking of our domain-specific guardrail model, which outperforms competing open- and closed- instruction-tuned models of similar and larger size, on proprietary education-related benchmarks and public benchmarks related to general aspects of safety. Finally, we detail the choices we made on architecture and the optimizations for deploying this service in production; these range across the stack from the hardware infrastructure to the serving layer to language model inference optimizations. We hope this paper will be instructive to other practitioners looking to create production-grade domain-specific services based on generative AI and large language models.

Automating question generation from educational text

Sep 26, 2023Abstract:The use of question-based activities (QBAs) is wide-spread in education, traditionally forming an integral part of the learning and assessment process. In this paper, we design and evaluate an automated question generation tool for formative and summative assessment in schools. We present an expert survey of one hundred and four teachers, demonstrating the need for automated generation of QBAs, as a tool that can significantly reduce the workload of teachers and facilitate personalized learning experiences. Leveraging the recent advancements in generative AI, we then present a modular framework employing transformer based language models for automatic generation of multiple-choice questions (MCQs) from textual content. The presented solution, with distinct modules for question generation, correct answer prediction, and distractor formulation, enables us to evaluate different language models and generation techniques. Finally, we perform an extensive quantitative and qualitative evaluation, demonstrating trade-offs in the use of different techniques and models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge