Hashan K. Weerasooriya

Ultrafast High-Flux Single-Photon LiDAR Simulator via Neural Mapping

May 29, 2025Abstract:Efficient simulation of photon registrations in single-photon LiDAR (SPL) is essential for applications such as depth estimation under high-flux conditions, where hardware dead time significantly distorts photon measurements. However, the conventional wisdom is computationally intensive due to their inherently sequential, photon-by-photon processing. In this paper, we propose a learning-based framework that accelerates the simulation process by modeling the photon count and directly predicting the photon registration probability density function (PDF) using an autoencoder (AE). Our method achieves high accuracy in estimating both the total number of registered photons and their temporal distribution, while substantially reducing simulation time. Extensive experiments validate the effectiveness and efficiency of our approach, highlighting its potential to enable fast and accurate SPL simulations for data-intensive imaging tasks in the high-flux regime.

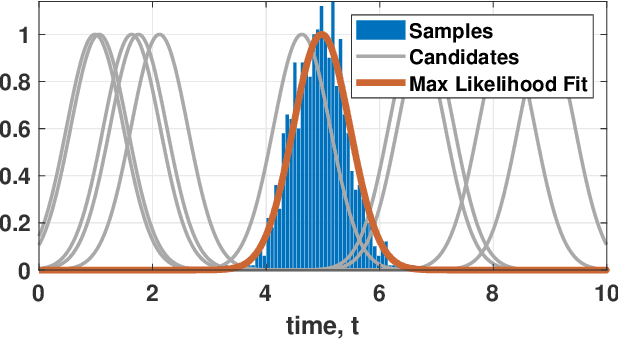

Joint Depth and Reflectivity Estimation using Single-Photon LiDAR

May 19, 2025Abstract:Single-Photon Light Detection and Ranging (SP-LiDAR is emerging as a leading technology for long-range, high-precision 3D vision tasks. In SP-LiDAR, timestamps encode two complementary pieces of information: pulse travel time (depth) and the number of photons reflected by the object (reflectivity). Existing SP-LiDAR reconstruction methods typically recover depth and reflectivity separately or sequentially use one modality to estimate the other. Moreover, the conventional 3D histogram construction is effective mainly for slow-moving or stationary scenes. In dynamic scenes, however, it is more efficient and effective to directly process the timestamps. In this paper, we introduce an estimation method to simultaneously recover both depth and reflectivity in fast-moving scenes. We offer two contributions: (1) A theoretical analysis demonstrating the mutual correlation between depth and reflectivity and the conditions under which joint estimation becomes beneficial. (2) A novel reconstruction method, "SPLiDER", which exploits the shared information to enhance signal recovery. On both synthetic and real SP-LiDAR data, our method outperforms existing approaches, achieving superior joint reconstruction quality.

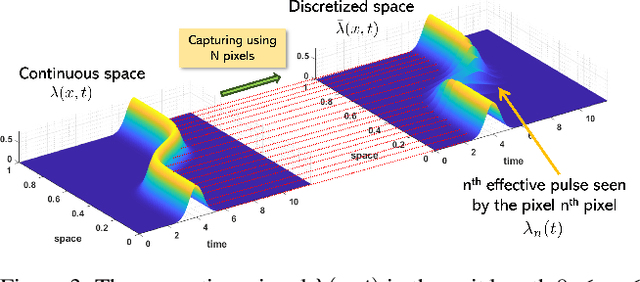

Parametric Modeling and Estimation of Photon Registrations for 3D Imaging

Jul 02, 2024Abstract:In single-photon light detection and ranging (SP-LiDAR) systems, the histogram distortion due to hardware dead time fundamentally limits the precision of depth estimation. To compensate for the dead time effects, the photon registration distribution is typically modeled based on the Markov chain self-excitation process. However, this is a discrete process and it is computationally expensive, thus hindering potential neural network applications and fast simulations. In this paper, we overcome the modeling challenge by proposing a continuous parametric model. We introduce a Gaussian-uniform mixture model (GUMM) and periodic padding to address high noise floors and noise slopes respectively. By deriving and implementing a customized expectation maximization (EM) algorithm, we achieve accurate histogram matching in scenarios that were deemed difficult in the literature.

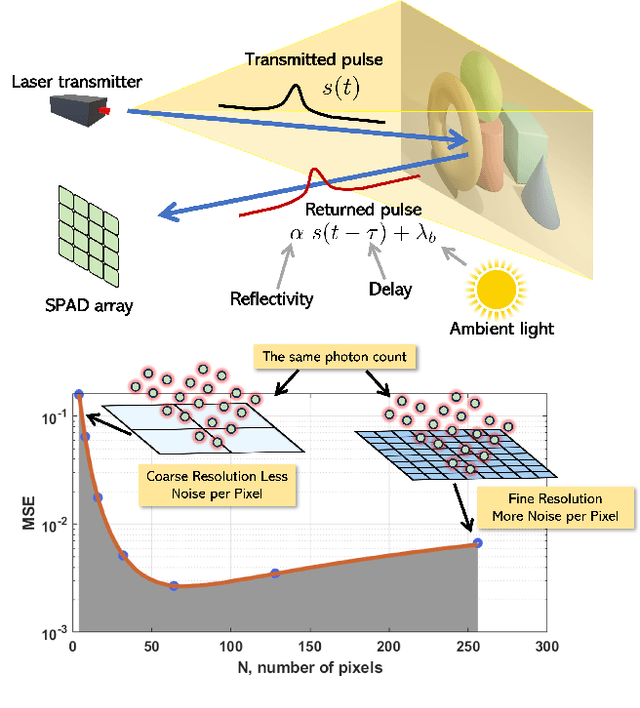

Resolution Limit of Single-Photon LiDAR

Mar 31, 2024

Abstract:Single-photon Light Detection and Ranging (LiDAR) systems are often equipped with an array of detectors for improved spatial resolution and sensing speed. However, given a fixed amount of flux produced by the laser transmitter across the scene, the per-pixel Signal-to-Noise Ratio (SNR) will decrease when more pixels are packed in a unit space. This presents a fundamental trade-off between the spatial resolution of the sensor array and the SNR received at each pixel. Theoretical characterization of this fundamental limit is explored. By deriving the photon arrival statistics and introducing a series of new approximation techniques, the Mean Squared Error (MSE) of the maximum-likelihood estimator of the time delay is derived. The theoretical predictions align well with simulations and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge