Harsharaj Pathak

Source-Free Domain Adaptation by Optimizing Batch-Wise Cosine Similarity

Jan 24, 2026Abstract:Source-Free Domain Adaptation (SFDA) is an emerging area of research that aims to adapt a model trained on a labeled source domain to an unlabeled target domain without accessing the source data. Most of the successful methods in this area rely on the concept of neighborhood consistency but are prone to errors due to misleading neighborhood information. In this paper, we explore this approach from the point of view of learning more informative clusters and mitigating the effect of noisy neighbors using a concept called neighborhood signature, and demonstrate that adaptation can be achieved using just a single loss term tailored to optimize the similarity and dissimilarity of predictions of samples in the target domain. In particular, our proposed method outperforms existing methods in the challenging VisDA dataset while also yielding competitive results on other benchmark datasets.

Learning Causal Attributions in Neural Networks: Beyond Direct Effects

Mar 24, 2023

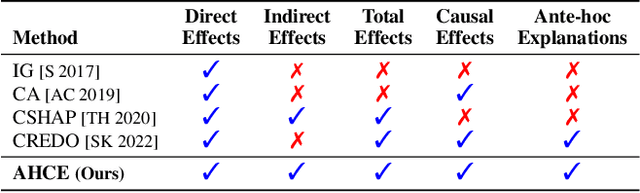

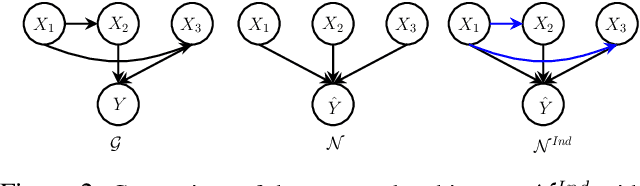

Abstract:There has been a growing interest in capturing and maintaining causal relationships in Neural Network (NN) models in recent years. We study causal approaches to estimate and maintain input-output attributions in NN models in this work. In particular, existing efforts in this direction assume independence among input variables (by virtue of the NN architecture), and hence study only direct causal effects. Viewing an NN as a structural causal model (SCM), we instead focus on going beyond direct effects, introduce edges among input features, and provide a simple yet effective methodology to capture and maintain direct and indirect causal effects while training an NN model. We also propose effective approximation strategies to quantify causal attributions in high dimensional data. Our wide range of experiments on synthetic and real-world datasets show that the proposed ante-hoc method learns causal attributions for both direct and indirect causal effects close to the ground truth effects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge