Hardik Dalal

Robust Federated Finetuning of Foundation Models via Alternating Minimization of LoRA

Sep 04, 2024

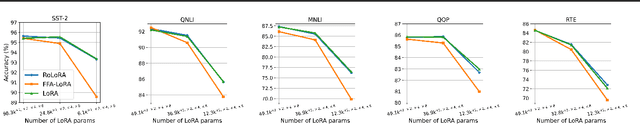

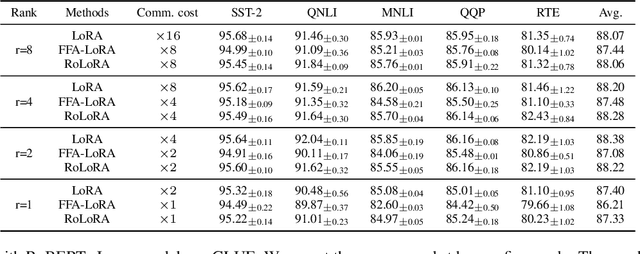

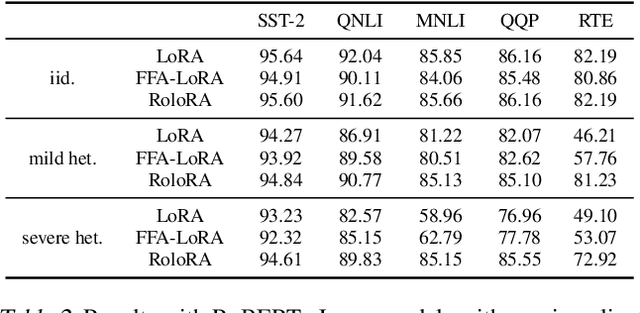

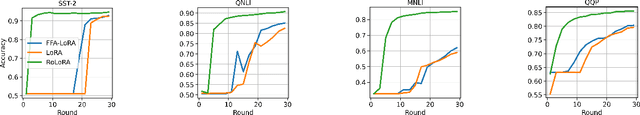

Abstract:Parameter-Efficient Fine-Tuning (PEFT) has risen as an innovative training strategy that updates only a select few model parameters, significantly lowering both computational and memory demands. PEFT also helps to decrease data transfer in federated learning settings, where communication depends on the size of updates. In this work, we explore the constraints of previous studies that integrate a well-known PEFT method named LoRA with federated fine-tuning, then introduce RoLoRA, a robust federated fine-tuning framework that utilizes an alternating minimization approach for LoRA, providing greater robustness against decreasing fine-tuning parameters and increasing data heterogeneity. Our results indicate that RoLoRA not only presents the communication benefits but also substantially enhances the robustness and effectiveness in multiple federated fine-tuning scenarios.

Adaptive Learning for Service Monitoring Data

Aug 25, 2022

Abstract:Service monitoring applications continuously produce data to monitor their availability. Hence, it is critical to classify incoming data in real-time and accurately. For this purpose, our study develops an adaptive classification approach using Learn++ that can handle evolving data distributions. This approach sequentially predicts and updates the monitoring model with new data, gradually forgets past knowledge and identifies sudden concept drift. We employ consecutive data chunks obtained from an industrial application to evaluate the performance of the predictors incrementally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge