Hao Zhuo

Whence Is A Model Fair? Fixing Fairness Bugs via Propensity Score Matching

Apr 23, 2025

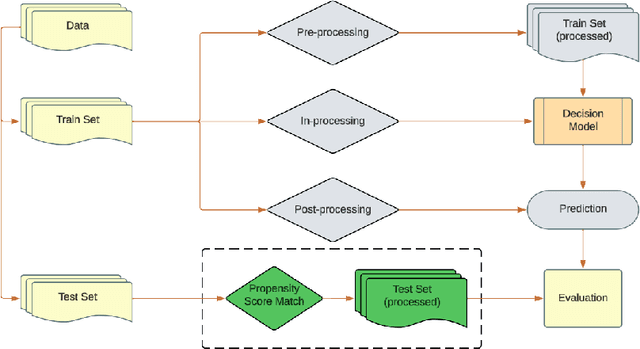

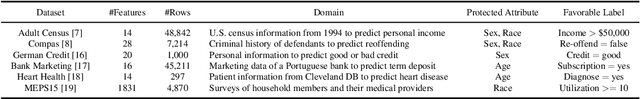

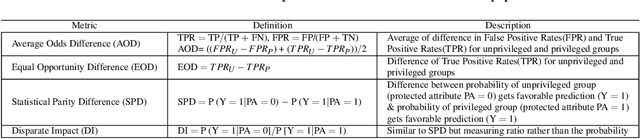

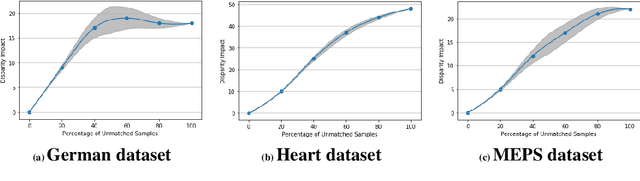

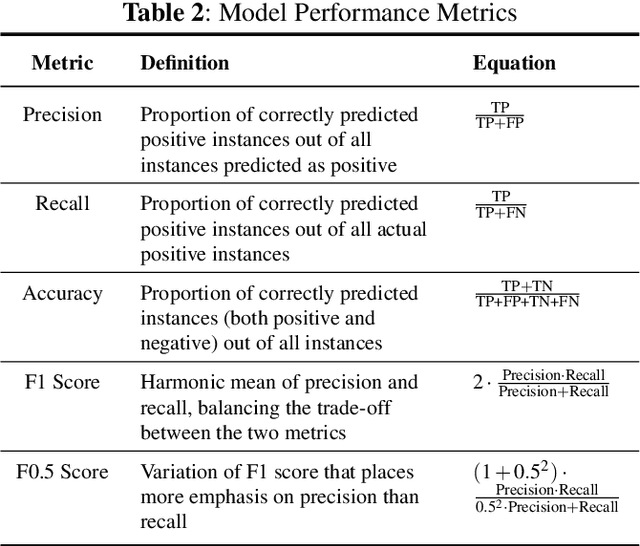

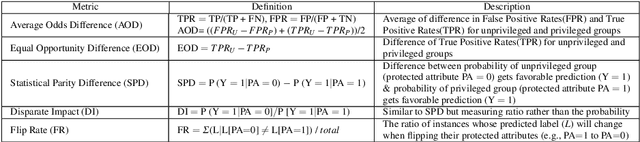

Abstract:Fairness-aware learning aims to mitigate discrimination against specific protected social groups (e.g., those categorized by gender, ethnicity, age) while minimizing predictive performance loss. Despite efforts to improve fairness in machine learning, prior studies have shown that many models remain unfair when measured against various fairness metrics. In this paper, we examine whether the way training and testing data are sampled affects the reliability of reported fairness metrics. Since training and test sets are often randomly sampled from the same population, bias present in the training data may still exist in the test data, potentially skewing fairness assessments. To address this, we propose FairMatch, a post-processing method that applies propensity score matching to evaluate and mitigate bias. FairMatch identifies control and treatment pairs with similar propensity scores in the test set and adjusts decision thresholds for different subgroups accordingly. For samples that cannot be matched, we perform probabilistic calibration using fairness-aware loss functions. Experimental results demonstrate that our approach can (a) precisely locate subsets of the test data where the model is unbiased, and (b) significantly reduce bias on the remaining data. Overall, propensity score matching offers a principled way to improve both fairness evaluation and mitigation, without sacrificing predictive performance.

Combating Toxic Language: A Review of LLM-Based Strategies for Software Engineering

Apr 21, 2025

Abstract:Large Language Models (LLMs) have become integral to software engineering (SE), where they are increasingly used in development workflows. However, their widespread use raises concerns about the presence and propagation of toxic language--harmful or offensive content that can foster exclusionary environments. This paper provides a comprehensive review of recent research on toxicity detection and mitigation, focusing on both SE-specific and general-purpose datasets. We examine annotation and preprocessing techniques, assess detection methodologies, and evaluate mitigation strategies, particularly those leveraging LLMs. Additionally, we conduct an ablation study demonstrating the effectiveness of LLM-based rewriting for reducing toxicity. By synthesizing existing work and identifying open challenges, this review highlights key areas for future research to ensure the responsible deployment of LLMs in SE and beyond.

Software Engineering Principles for Fairer Systems: Experiments with GroupCART

Apr 17, 2025

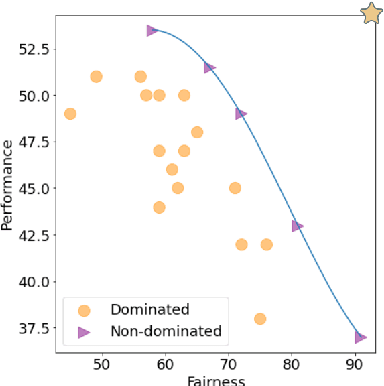

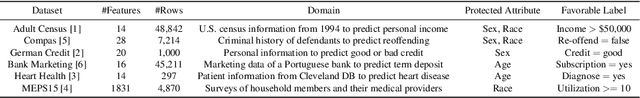

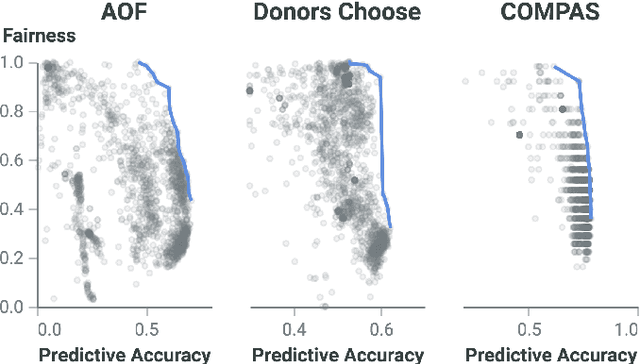

Abstract:Discrimination-aware classification aims to make accurate predictions while satisfying fairness constraints. Traditional decision tree learners typically optimize for information gain in the target attribute alone, which can result in models that unfairly discriminate against protected social groups (e.g., gender, ethnicity). Motivated by these shortcomings, we propose GroupCART, a tree-based ensemble optimizer that avoids bias during model construction by optimizing not only for decreased entropy in the target attribute but also for increased entropy in protected attributes. Our experiments show that GroupCART achieves fairer models without data transformation and with minimal performance degradation. Furthermore, the method supports customizable weighting, offering a smooth and flexible trade-off between predictive performance and fairness based on user requirements. These results demonstrate that algorithmic bias in decision tree models can be mitigated through multi-task, fairness-aware learning. All code and datasets used in this study are available at: https://github.com/anonymous12138/groupCART.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge