Hanhui Deng

FedGAI: Federated Style Learning with Cloud-Edge Collaboration for Generative AI in Fashion Design

Mar 16, 2025Abstract:Collaboration can amalgamate diverse ideas, styles, and visual elements, fostering creativity and innovation among different designers. In collaborative design, sketches play a pivotal role as a means of expressing design creativity. However, designers often tend to not openly share these meticulously crafted sketches. This phenomenon of data island in the design area hinders its digital transformation under the third wave of AI. In this paper, we introduce a Federated Generative Artificial Intelligence Clothing system, namely FedGAI, employing federated learning to aid in sketch design. FedGAI is committed to establishing an ecosystem wherein designers can exchange sketch styles among themselves. Through FedGAI, designers can generate sketches that incorporate various designers' styles from their peers, drawing inspiration from collaboration without the need for data disclosure or upload. Extensive performance evaluations indicate that our FedGAI system can produce multi-styled sketches of comparable quality to human-designed ones while significantly enhancing efficiency compared to hand-drawn sketches.

BRIEDGE: EEG-Adaptive Edge AI for Multi-Brain to Multi-Robot Interaction

Mar 14, 2024

Abstract:Recent advances in EEG-based BCI technologies have revealed the potential of brain-to-robot collaboration through the integration of sensing, computing, communication, and control. In this paper, we present BRIEDGE as an end-to-end system for multi-brain to multi-robot interaction through an EEG-adaptive neural network and an encoding-decoding communication framework, as illustrated in Fig.1. As depicted, the edge mobile server or edge portable server will collect EEG data from the users and utilize the EEG-adaptive neural network to identify the users' intentions. The encoding-decoding communication framework then encodes the EEG-based semantic information and decodes it into commands in the process of data transmission. To better extract the joint features of heterogeneous EEG data as well as enhance classification accuracy, BRIEDGE introduces an informer-based ProbSparse self-attention mechanism. Meanwhile, parallel and secure transmissions for multi-user multi-task scenarios under physical channels are addressed by dynamic autoencoder and autodecoder communications. From mobile computing and edge AI perspectives, model compression schemes composed of pruning, weight sharing, and quantization are also used to deploy lightweight EEG-adaptive models running on both transmitter and receiver sides. Based on the effectiveness of these components, a code map representing various commands enables multiple users to control multiple intelligent agents concurrently. Our experiments in comparison with state-of-the-art works show that BRIEDGE achieves the best classification accuracy of heterogeneous EEG data, and more stable performance under noisy environments.

Attend Who is Weak: Enhancing Graph Condensation via Cross-Free Adversarial Training

Nov 27, 2023

Abstract:In this paper, we study the \textit{graph condensation} problem by compressing the large, complex graph into a concise, synthetic representation that preserves the most essential and discriminative information of structure and features. We seminally propose the concept of Shock Absorber (a type of perturbation) that enhances the robustness and stability of the original graphs against changes in an adversarial training fashion. Concretely, (I) we forcibly match the gradients between pre-selected graph neural networks (GNNs) trained on a synthetic, simplified graph and the original training graph at regularly spaced intervals. (II) Before each update synthetic graph point, a Shock Absorber serves as a gradient attacker to maximize the distance between the synthetic dataset and the original graph by selectively perturbing the parts that are underrepresented or insufficiently informative. We iteratively repeat the above two processes (I and II) in an adversarial training fashion to maintain the highly-informative context without losing correlation with the original dataset. More importantly, our shock absorber and the synthesized graph parallelly share the backward process in a free training manner. Compared to the original adversarial training, it introduces almost no additional time overhead. We validate our framework across 8 datasets (3 graph and 5 node classification datasets) and achieve prominent results: for example, on Cora, Citeseer and Ogbn-Arxiv, we can gain nearly 1.13% to 5.03% improvements compare with SOTA models. Moreover, our algorithm adds only about 0.2% to 2.2% additional time overhead over Flicker, Citeseer and Ogbn-Arxiv. Compared to the general adversarial training, our approach improves time efficiency by nearly 4-fold.

Software-Defined Edge Computing: A New Architecture Paradigm to Support IoT Data Analysis

Apr 26, 2021

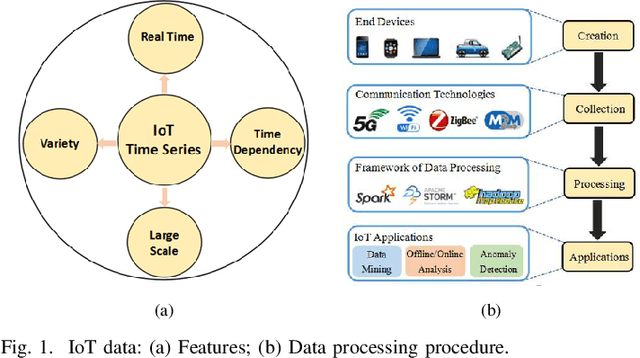

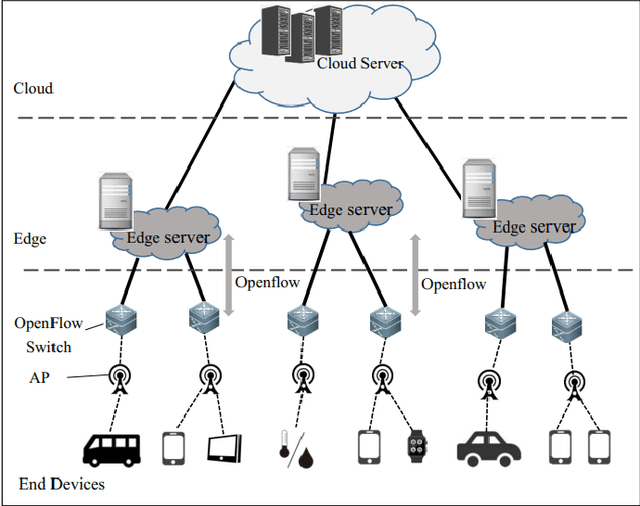

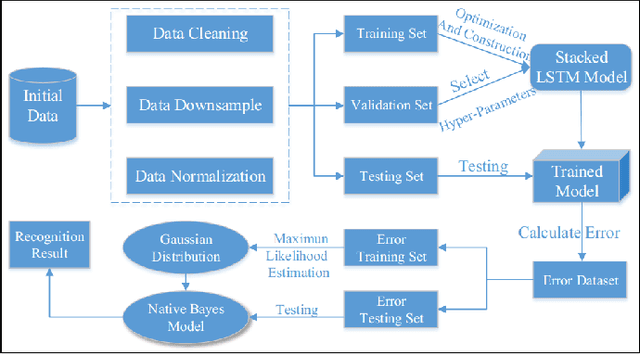

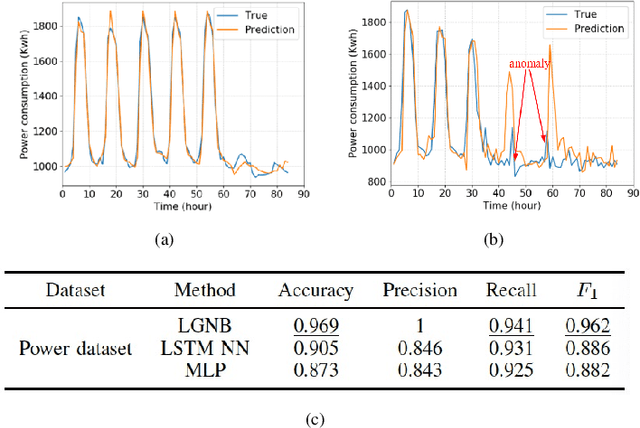

Abstract:The rapid deployment of Internet of Things (IoT) applications leads to massive data that need to be processed. These IoT applications have specific communication requirements on latency and bandwidth, and present new features on their generated data such as time-dependency. Therefore, it is desirable to reshape the current IoT architectures by exploring their inherent nature of communication and computing to support smart IoT data process and analysis. We introduce in this paper features of IoT data, trends of IoT network architectures, some problems in IoT data analysis, and their solutions. Specifically, we view that software-defined edge computing is a promising architecture to support the unique needs of IoT data analysis. We further present an experiment on data anomaly detection in this architecture, and the comparison between two architectures for ECG diagnosis. Results show that our method is effective and feasible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge