Hangtong Xu

DPR: An Algorithm Mitigate Bias Accumulation in Recommendation feedback loops

Nov 10, 2023

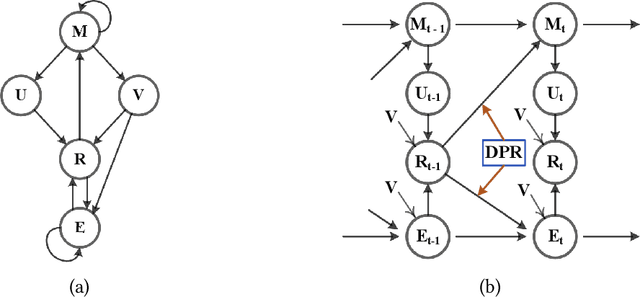

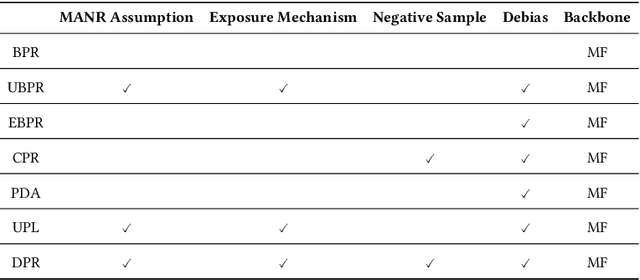

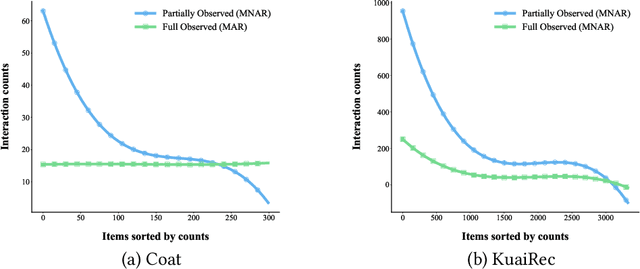

Abstract:Recommendation models trained on the user feedback collected from deployed recommendation systems are commonly biased. User feedback is considerably affected by the exposure mechanism, as users only provide feedback on the items exposed to them and passively ignore the unexposed items, thus producing numerous false negative samples. Inevitably, biases caused by such user feedback are inherited by new models and amplified via feedback loops. Moreover, the presence of false negative samples makes negative sampling difficult and introduces spurious information in the user preference modeling process of the model. Recent work has investigated the negative impact of feedback loops and unknown exposure mechanisms on recommendation quality and user experience, essentially treating them as independent factors and ignoring their cross-effects. To address these issues, we deeply analyze the data exposure mechanism from the perspective of data iteration and feedback loops with the Missing Not At Random (\textbf{MNAR}) assumption, theoretically demonstrating the existence of an available stabilization factor in the transformation of the exposure mechanism under the feedback loops. We further propose Dynamic Personalized Ranking (\textbf{DPR}), an unbiased algorithm that uses dynamic re-weighting to mitigate the cross-effects of exposure mechanisms and feedback loops without additional information. Furthermore, we design a plugin named Universal Anti-False Negative (\textbf{UFN}) to mitigate the negative impact of the false negative problem. We demonstrate theoretically that our approach mitigates the negative effects of feedback loops and unknown exposure mechanisms. Experimental results on real-world datasets demonstrate that models using DPR can better handle bias accumulation and the universality of UFN in mainstream loss methods.

Separating and Learning Latent Confounders to Enhancing User Preferences Modeling

Nov 02, 2023

Abstract:Recommender models aim to capture user preferences from historical feedback and then predict user-specific feedback on candidate items. However, the presence of various unmeasured confounders causes deviations between the user preferences in the historical feedback and the true preferences, resulting in models not meeting their expected performance. Existing debias models either (1) specific to solving one particular bias or (2) directly obtain auxiliary information from user historical feedback, which cannot identify whether the learned preferences are true user preferences or mixed with unmeasured confounders. Moreover, we find that the former recommender system is not only a successor to unmeasured confounders but also acts as an unmeasured confounder affecting user preference modeling, which has always been neglected in previous studies. To this end, we incorporate the effect of the former recommender system and treat it as a proxy for all unmeasured confounders. We propose a novel framework, \textbf{S}eparating and \textbf{L}earning Latent Confounders \textbf{F}or \textbf{R}ecommendation (\textbf{SLFR}), which obtains the representation of unmeasured confounders to identify the counterfactual feedback by disentangling user preferences and unmeasured confounders, then guides the target model to capture the true preferences of users. Extensive experiments in five real-world datasets validate the advantages of our method.

Causal Structure Representation Learning of Confounders in Latent Space for Recommendation

Nov 02, 2023

Abstract:Inferring user preferences from the historical feedback of users is a valuable problem in recommender systems. Conventional approaches often rely on the assumption that user preferences in the feedback data are equivalent to the real user preferences without additional noise, which simplifies the problem modeling. However, there are various confounders during user-item interactions, such as weather and even the recommendation system itself. Therefore, neglecting the influence of confounders will result in inaccurate user preferences and suboptimal performance of the model. Furthermore, the unobservability of confounders poses a challenge in further addressing the problem. To address these issues, we refine the problem and propose a more rational solution. Specifically, we consider the influence of confounders, disentangle them from user preferences in the latent space, and employ causal graphs to model their interdependencies without specific labels. By cleverly combining local and global causal graphs, we capture the user-specificity of confounders on user preferences. We theoretically demonstrate the identifiability of the obtained causal graph. Finally, we propose our model based on Variational Autoencoders, named Causal Structure representation learning of Confounders in latent space (CSC). We conducted extensive experiments on one synthetic dataset and five real-world datasets, demonstrating the superiority of our model. Furthermore, we demonstrate that the learned causal representations of confounders are controllable, potentially offering users fine-grained control over the objectives of their recommendation lists with the learned causal graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge