Hamidreza Rabiee

Enhancing the Prediction of Emotional Experience in Movies using Deep Neural Networks: The Significance of Audio and Language

Jun 17, 2023

Abstract:Our paper focuses on making use of deep neural network models to accurately predict the range of human emotions experienced during watching movies. In this certain setup, there exist three clear-cut input modalities that considerably influence the experienced emotions: visual cues derived from RGB video frames, auditory components encompassing sounds, speech, and music, and linguistic elements encompassing actors' dialogues. Emotions are commonly described using a two-factor model including valence (ranging from happy to sad) and arousal (indicating the intensity of the emotion). In this regard, a Plethora of works have presented a multitude of models aiming to predict valence and arousal from video content. However, non of these models contain all three modalities, with language being consistently eliminated across all of them. In this study, we comprehensively combine all modalities and conduct an analysis to ascertain the importance of each in predicting valence and arousal. Making use of pre-trained neural networks, we represent each input modality in our study. In order to process visual input, we employ pre-trained convolutional neural networks to recognize scenes[1], objects[2], and actions[3,4]. For audio processing, we utilize a specialized neural network designed for handling sound-related tasks, namely SoundNet[5]. Finally, Bidirectional Encoder Representations from Transformers (BERT) models are used to extract linguistic features[6] in our analysis. We report results on the COGNIMUSE dataset[7], where our proposed model outperforms the current state-of-the-art approaches. Surprisingly, our findings reveal that language significantly influences the experienced arousal, while sound emerges as the primary determinant for predicting valence. In contrast, the visual modality exhibits the least impact among all modalities in predicting emotions.

Emotion-Based Crowd Representation for Abnormality Detection

Jul 26, 2016

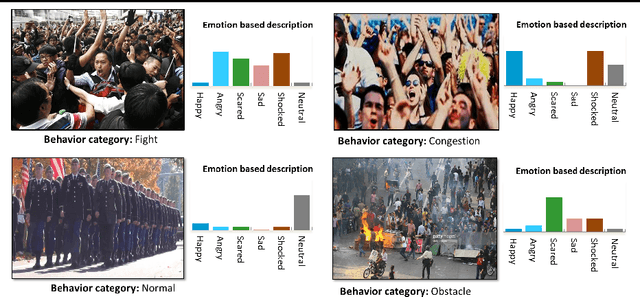

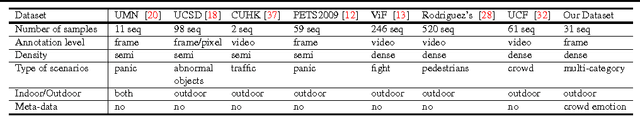

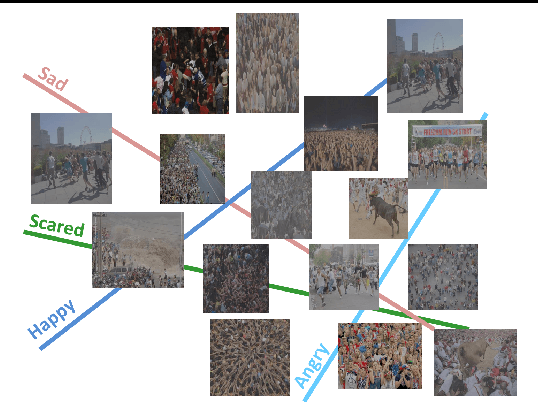

Abstract:In crowd behavior understanding, a model of crowd behavior need to be trained using the information extracted from video sequences. Since there is no ground-truth available in crowd datasets except the crowd behavior labels, most of the methods proposed so far are just based on low-level visual features. However, there is a huge semantic gap between low-level motion/appearance features and high-level concept of crowd behaviors. In this paper we propose an attribute-based strategy to alleviate this problem. While similar strategies have been recently adopted for object and action recognition, as far as we know, we are the first showing that the crowd emotions can be used as attributes for crowd behavior understanding. The main idea is to train a set of emotion-based classifiers, which can subsequently be used to represent the crowd motion. For this purpose, we collect a big dataset of video clips and provide them with both annotations of "crowd behaviors" and "crowd emotions". We show the results of the proposed method on our dataset, which demonstrate that the crowd emotions enable the construction of more descriptive models for crowd behaviors. We aim at publishing the dataset with the article, to be used as a benchmark for the communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge