Hamad Alamri

A BERT-based Empirical Study of Privacy Policies' Compliance with GDPR

Jul 09, 2024

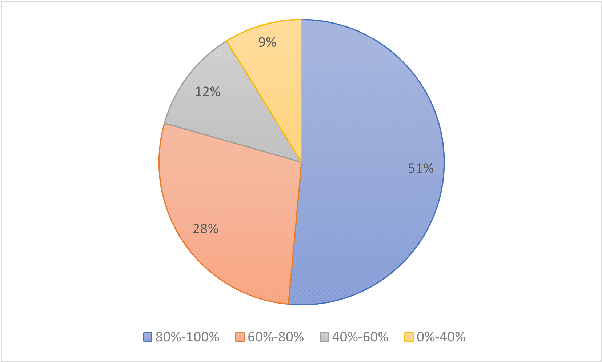

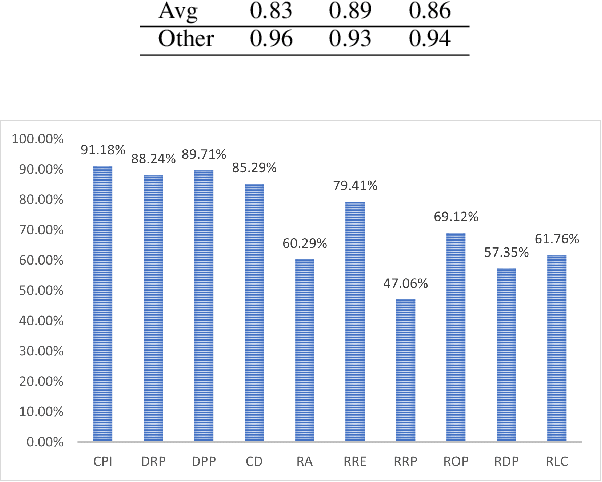

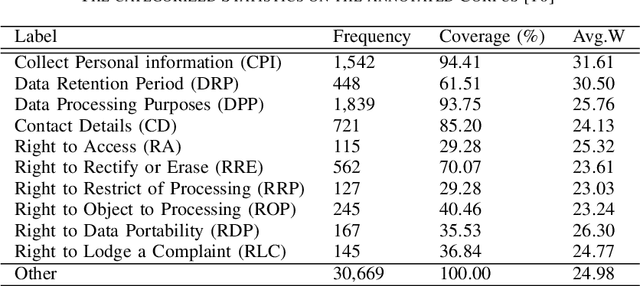

Abstract:Since its implementation in May 2018, the General Data Protection Regulation (GDPR) has prompted businesses to revisit and revise their data handling practices to ensure compliance. The privacy policy, which serves as the primary means of informing users about their privacy rights and the data practices of companies, has been significantly updated by numerous businesses post-GDPR implementation. However, many privacy policies remain packed with technical jargon, lengthy explanations, and vague descriptions of data practices and user rights. This makes it a challenging task for users and regulatory authorities to manually verify the GDPR compliance of these privacy policies. In this study, we aim to address the challenge of compliance analysis between GDPR (Article 13) and privacy policies for 5G networks. We manually collected privacy policies from almost 70 different 5G MNOs, and we utilized an automated BERT-based model for classification. We show that an encouraging 51$\%$ of companies demonstrate a strong adherence to GDPR. In addition, we present the first study that provides current empirical evidence on the readability of privacy policies for 5G network. we adopted readability analysis toolset that incorporates various established readability metrics. The findings empirically show that the readability of the majority of current privacy policies remains a significant challenge. Hence, 5G providers need to invest considerable effort into revising these documents to enhance both their utility and the overall user experience.

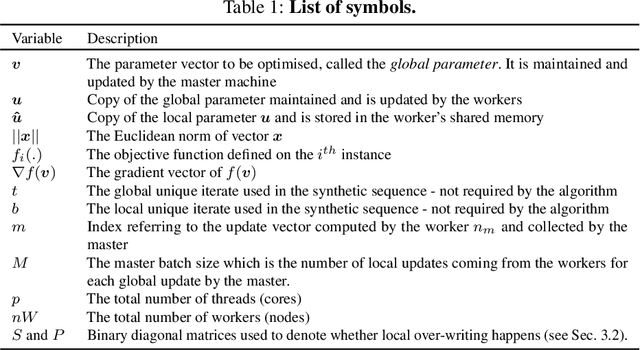

Scaling up Stochastic Gradient Descent for Non-convex Optimisation

Oct 06, 2022

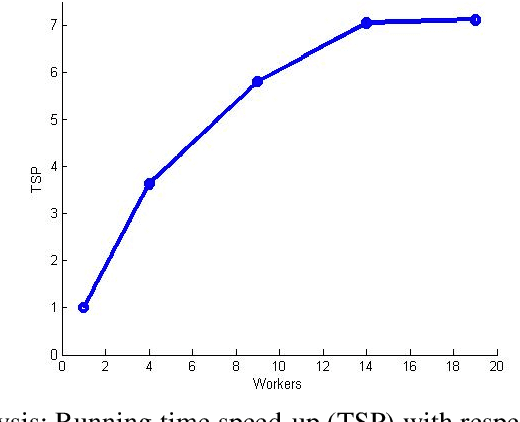

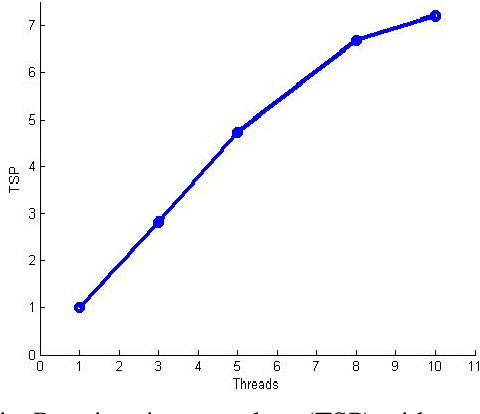

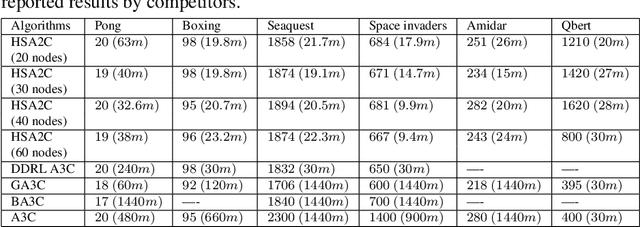

Abstract:Stochastic gradient descent (SGD) is a widely adopted iterative method for optimizing differentiable objective functions. In this paper, we propose and discuss a novel approach to scale up SGD in applications involving non-convex functions and large datasets. We address the bottleneck problem arising when using both shared and distributed memory. Typically, the former is bounded by limited computation resources and bandwidth whereas the latter suffers from communication overheads. We propose a unified distributed and parallel implementation of SGD (named DPSGD) that relies on both asynchronous distribution and lock-free parallelism. By combining two strategies into a unified framework, DPSGD is able to strike a better trade-off between local computation and communication. The convergence properties of DPSGD are studied for non-convex problems such as those arising in statistical modelling and machine learning. Our theoretical analysis shows that DPSGD leads to speed-up with respect to the number of cores and number of workers while guaranteeing an asymptotic convergence rate of $O(1/\sqrt{T})$ given that the number of cores is bounded by $T^{1/4}$ and the number of workers is bounded by $T^{1/2}$ where $T$ is the number of iterations. The potential gains that can be achieved by DPSGD are demonstrated empirically on a stochastic variational inference problem (Latent Dirichlet Allocation) and on a deep reinforcement learning (DRL) problem (advantage actor critic - A2C) resulting in two algorithms: DPSVI and HSA2C. Empirical results validate our theoretical findings. Comparative studies are conducted to show the performance of the proposed DPSGD against the state-of-the-art DRL algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge