Abdelhamid Bouchachia

Event Detection for Non-intrusive Load Monitoring using Tukey s Fences

Feb 27, 2024

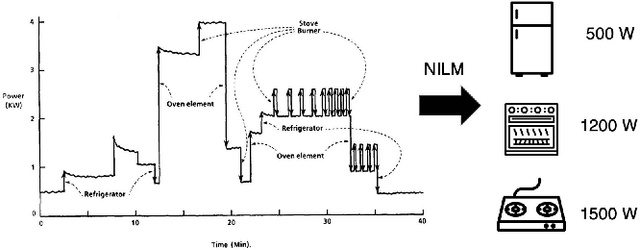

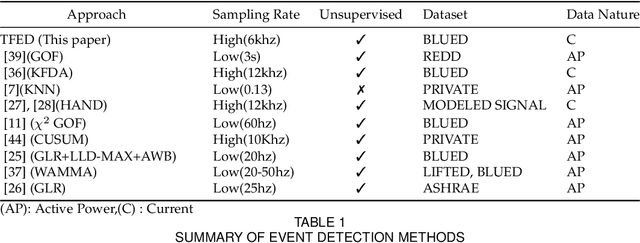

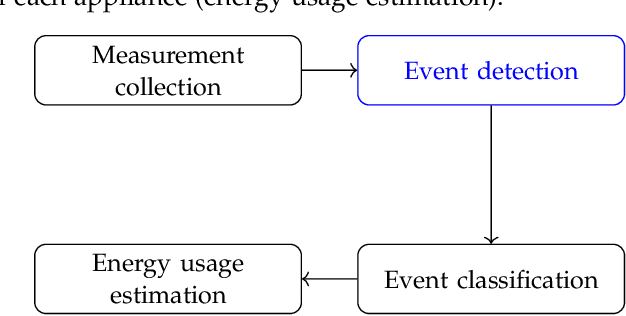

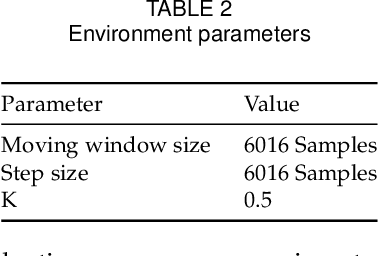

Abstract:The primary objective of non-intrusive load monitoring (NILM) techniques is to monitor and track power consumption within residential buildings. This is achieved by approximating the consumption of each individual appliance from the aggregate energy measurements. Event-based NILM solutions are generally more accurate than other methods. Our paper introduces a novel event detection algorithm called Tukey's Fences-based event detector (TFED). This algorithm uses the fast Fourier transform in conjunction with the Tukey fences rule to detect variations in the aggregated current signal. The primary benefit of TFED is its superior ability to accurately pinpoint the start times of events, as demonstrated through simulations. Our findings reveal that the proposed algorithm boasts an impressive 99% accuracy rate, surpassing the accuracy of other recent works in the literature such as the Cepstrum and $\chi ^2$ GOF statistic-based analyses, which only achieved 98% accuracy.

Scaling up Stochastic Gradient Descent for Non-convex Optimisation

Oct 06, 2022

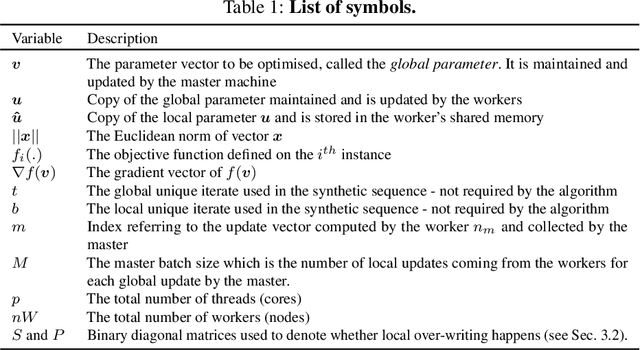

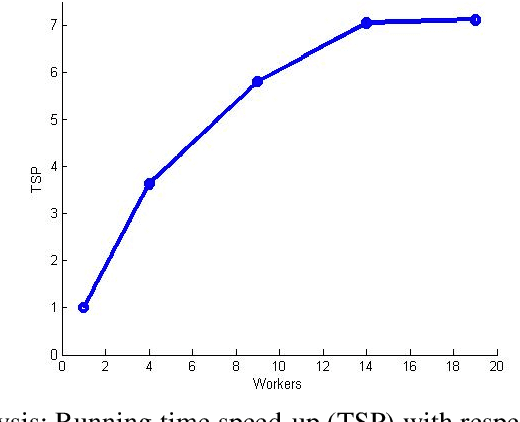

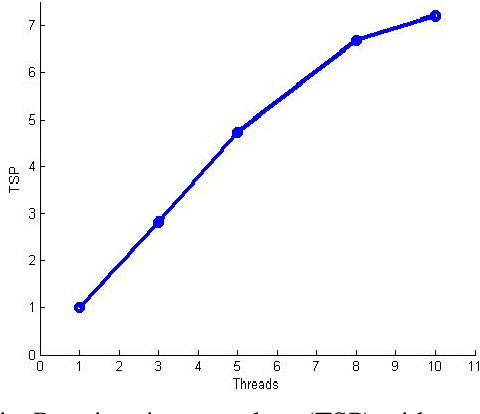

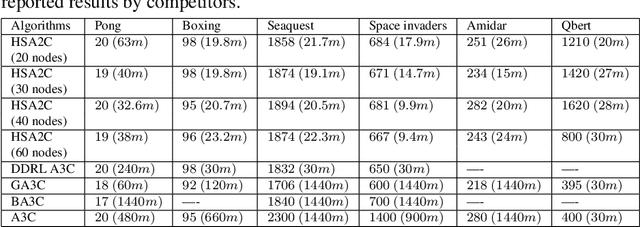

Abstract:Stochastic gradient descent (SGD) is a widely adopted iterative method for optimizing differentiable objective functions. In this paper, we propose and discuss a novel approach to scale up SGD in applications involving non-convex functions and large datasets. We address the bottleneck problem arising when using both shared and distributed memory. Typically, the former is bounded by limited computation resources and bandwidth whereas the latter suffers from communication overheads. We propose a unified distributed and parallel implementation of SGD (named DPSGD) that relies on both asynchronous distribution and lock-free parallelism. By combining two strategies into a unified framework, DPSGD is able to strike a better trade-off between local computation and communication. The convergence properties of DPSGD are studied for non-convex problems such as those arising in statistical modelling and machine learning. Our theoretical analysis shows that DPSGD leads to speed-up with respect to the number of cores and number of workers while guaranteeing an asymptotic convergence rate of $O(1/\sqrt{T})$ given that the number of cores is bounded by $T^{1/4}$ and the number of workers is bounded by $T^{1/2}$ where $T$ is the number of iterations. The potential gains that can be achieved by DPSGD are demonstrated empirically on a stochastic variational inference problem (Latent Dirichlet Allocation) and on a deep reinforcement learning (DRL) problem (advantage actor critic - A2C) resulting in two algorithms: DPSVI and HSA2C. Empirical results validate our theoretical findings. Comparative studies are conducted to show the performance of the proposed DPSGD against the state-of-the-art DRL algorithms.

Evolving Neural Networks with Optimal Balance between Information Flow and Connections Cost

Mar 14, 2022

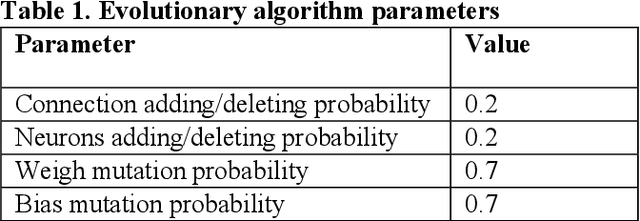

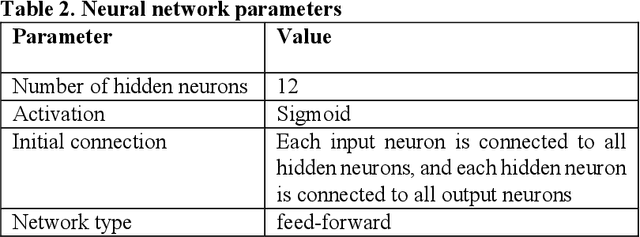

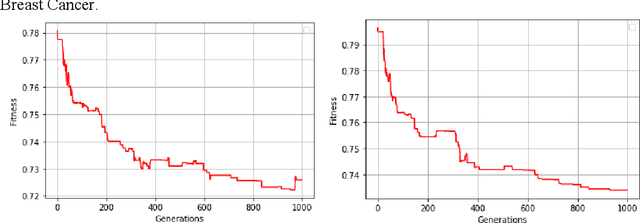

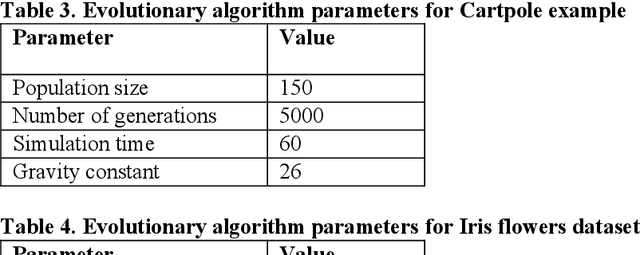

Abstract:Evolving Neural Networks (NNs) has recently seen an increasing interest as an alternative path that might be more successful. It has many advantages compared to other approaches, such as learning the architecture of the NNs. However, the extremely large search space and the existence of many complex interacting parts still represent a major obstacle. Many criteria were recently investigated to help guide the algorithm and to cut down the large search space. Recently there has been growing research bringing insights from network science to improve the design of NNs. In this paper, we investigate evolving NNs architectures that have one of the most fundamental characteristics of real-world networks, namely the optimal balance between connections cost and information flow. The performance of different metrics that represent this balance is evaluated and the improvement in the accuracy of putting more selection pressure toward this balance is demonstrated on three datasets.

Toward Building Science Discovery Machines

Apr 05, 2021Abstract:The dream of building machines that can do science has inspired scientists for decades. Remarkable advances have been made recently; however, we are still far from achieving this goal. In this paper, we focus on the scientific discovery process where a high level of reasoning and remarkable problem-solving ability are required. We review different machine learning techniques used in scientific discovery with their limitations. We survey and discuss the main principles driving the scientific discovery process. These principles are used in different fields and by different scientists to solve problems and discover new knowledge. We provide many examples of the use of these principles in different fields such as physics, mathematics, and biology. We also review AI systems that attempt to implement some of these principles. We argue that building science discovery machines should be guided by these principles as an alternative to the dominant approach of current AI systems that focuses on narrow objectives. Building machines that fully incorporate these principles in an automated way might open the doors for many advancements.

Online Gaussian LDA for Unsupervised Pattern Mining from Utility Usage Data

Oct 25, 2019

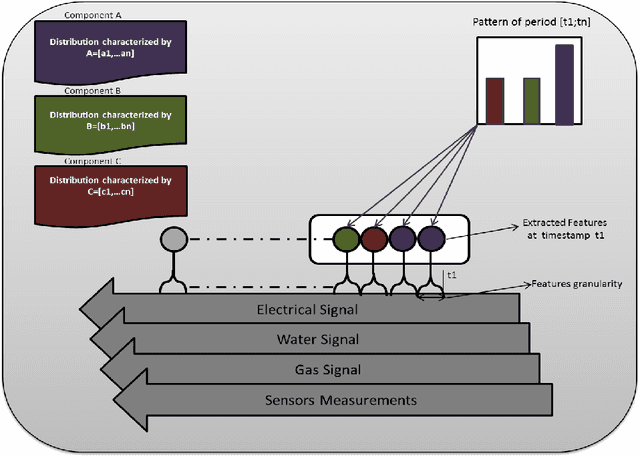

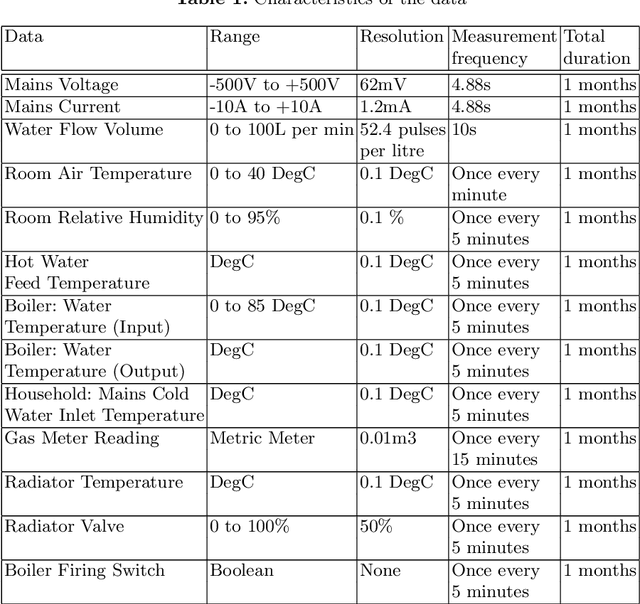

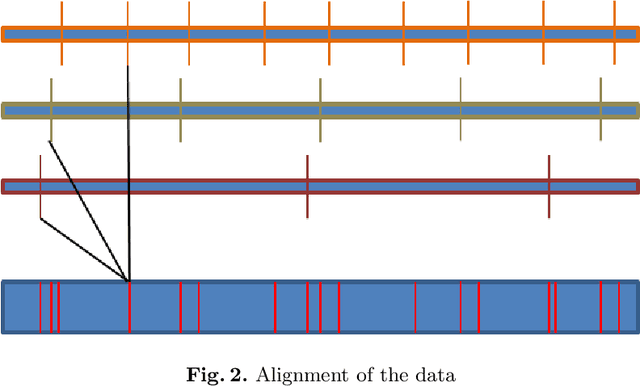

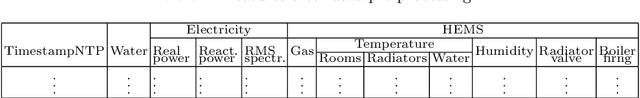

Abstract:Non-intrusive load monitoring (NILM) aims at separating a whole-home energy signal into its appliance components. Such method can be harnessed to provide various services to better manage and control energy consumption (optimal planning and saving). NILM has been traditionally approached from signal processing and electrical engineering perspectives. Recently, machine learning has started to play an important role in NILM. While most work has focused on supervised algorithms, unsupervised approaches can be more interesting and of practical use in real case scenarios. Specifically, they do not require labelled training data to be acquired from individual appliances and the algorithm can be deployed to operate on the measured aggregate data directly. In this paper, we propose a fully unsupervised NILM framework based on Bayesian hierarchical mixture models. In particular, we develop a new method based on Gaussian Latent Dirichlet Allocation (GLDA) in order to extract global components that summarise the energy signal. These components provide a representation of the consumption patterns. Designed to cope with big data, our algorithm, unlike existing NILM ones, does not focus on appliance recognition. To handle this massive data, GLDA works online. Another novelty of this work compared to the existing NILM is that the data involves different utilities (e.g, electricity, water and gas) as well as some sensors measurements. Finally, we propose different evaluation methods to analyse the results which show that our algorithm finds useful patterns.

Asynchronous Stochastic Variational Inference

Jan 12, 2018

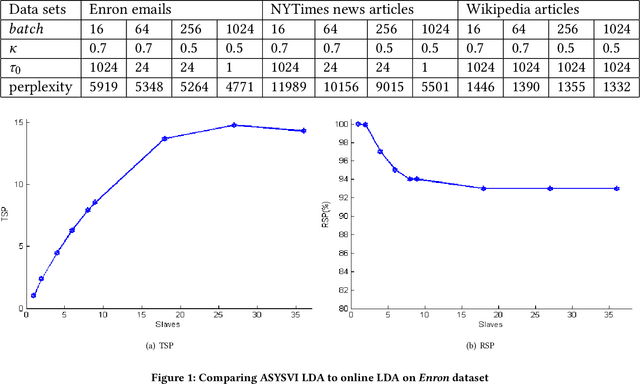

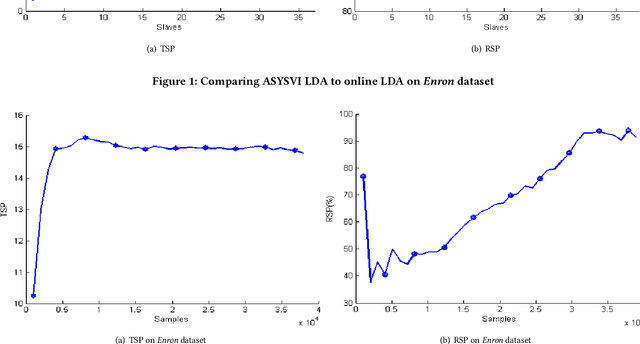

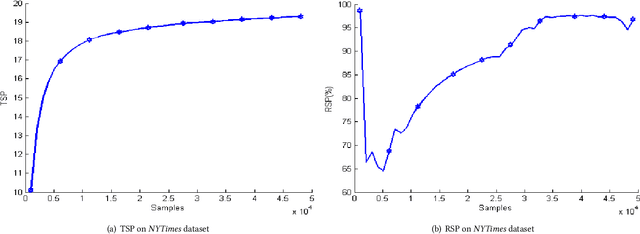

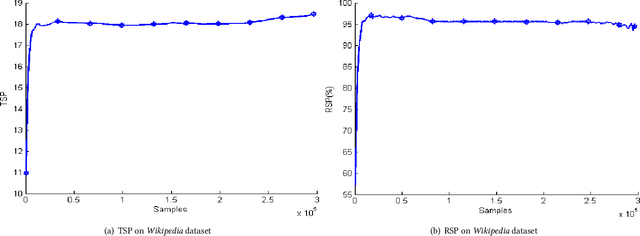

Abstract:Stochastic variational inference (SVI) employs stochastic optimization to scale up Bayesian computation to massive data. Since SVI is at its core a stochastic gradient-based algorithm, horizontal parallelism can be harnessed to allow larger scale inference. We propose a lock-free parallel implementation for SVI which allows distributed computations over multiple slaves in an asynchronous style. We show that our implementation leads to linear speed-up while guaranteeing an asymptotic ergodic convergence rate $O(1/\sqrt(T)$ ) given that the number of slaves is bounded by $\sqrt(T)$ ($T$ is the total number of iterations). The implementation is done in a high-performance computing (HPC) environment using message passing interface (MPI) for python (MPI4py). The extensive empirical evaluation shows that our parallel SVI is lossless, performing comparably well to its counterpart serial SVI with linear speed-up.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge