Haichang Li

Multiple Fusion Adaptation: A Strong Framework for Unsupervised Semantic Segmentation Adaptation

Dec 01, 2021

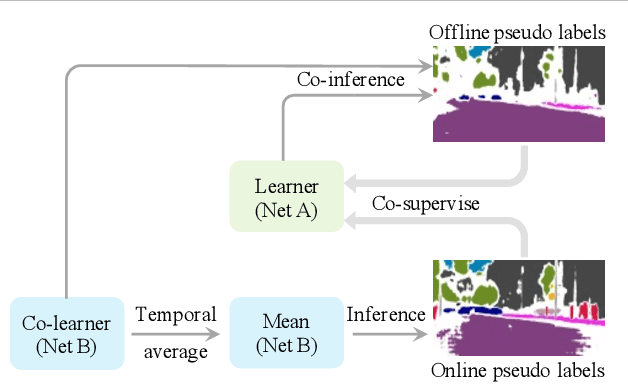

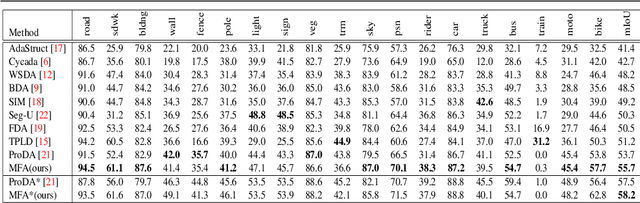

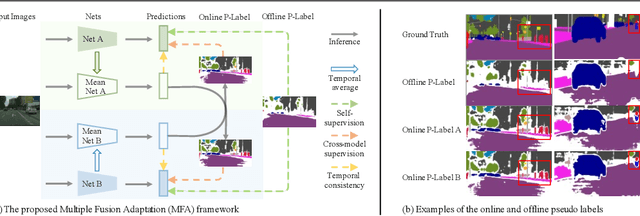

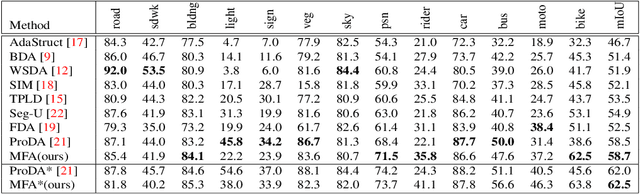

Abstract:This paper challenges the cross-domain semantic segmentation task, aiming to improve the segmentation accuracy on the unlabeled target domain without incurring additional annotation. Using the pseudo-label-based unsupervised domain adaptation (UDA) pipeline, we propose a novel and effective Multiple Fusion Adaptation (MFA) method. MFA basically considers three parallel information fusion strategies, i.e., the cross-model fusion, temporal fusion and a novel online-offline pseudo label fusion. Specifically, the online-offline pseudo label fusion encourages the adaptive training to pay additional attention to difficult regions that are easily ignored by offline pseudo labels, therefore retaining more informative details. While the other two fusion strategies may look standard, MFA pays significant efforts to raise the efficiency and effectiveness for integration, and succeeds in injecting all the three strategies into a unified framework. Experiments on two widely used benchmarks, i.e., GTA5-to-Cityscapes and SYNTHIA-to-Cityscapes, show that our method significantly improves the semantic segmentation adaptation, and sets up new state of the art (58.2% and 62.5% mIoU, respectively). The code will be available at https://github.com/KaiiZhang/MFA.

Cross Modification Attention Based Deliberation Model for Image Captioning

Sep 17, 2021

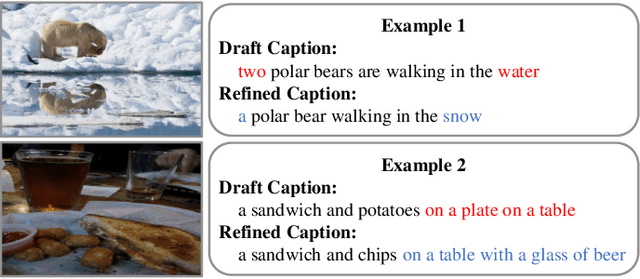

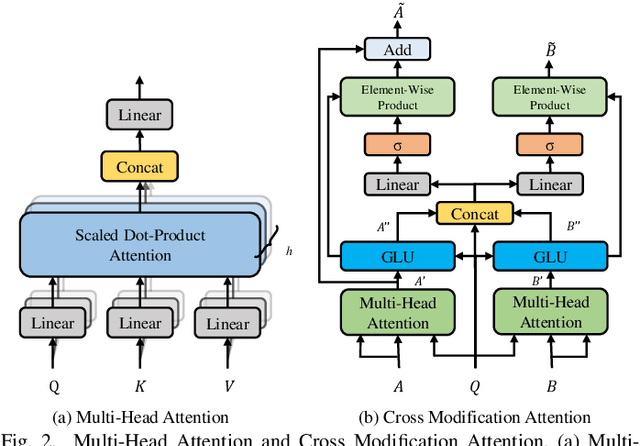

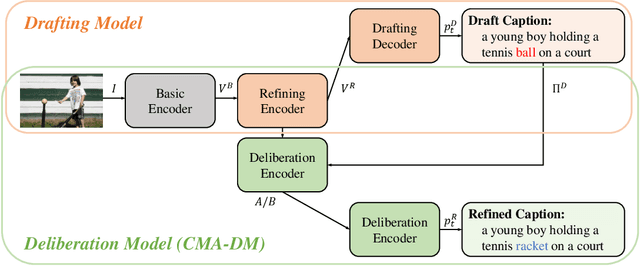

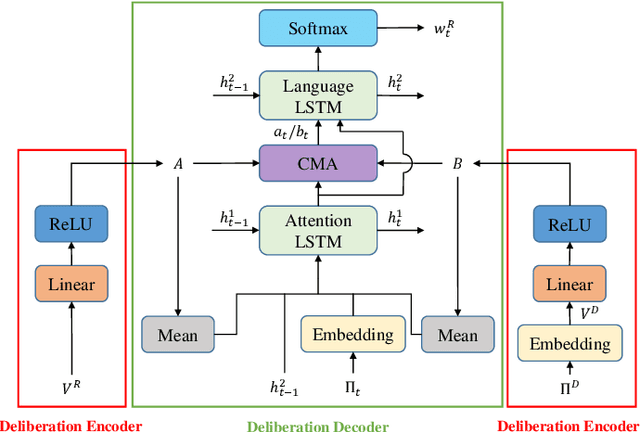

Abstract:The conventional encoder-decoder framework for image captioning generally adopts a single-pass decoding process, which predicts the target descriptive sentence word by word in temporal order. Despite the great success of this framework, it still suffers from two serious disadvantages. Firstly, it is unable to correct the mistakes in the predicted words, which may mislead the subsequent prediction and result in error accumulation problem. Secondly, such a framework can only leverage the already generated words but not the possible future words, and thus lacks the ability of global planning on linguistic information. To overcome these limitations, we explore a universal two-pass decoding framework, where a single-pass decoding based model serving as the Drafting Model first generates a draft caption according to an input image, and a Deliberation Model then performs the polishing process to refine the draft caption to a better image description. Furthermore, inspired from the complementarity between different modalities, we propose a novel Cross Modification Attention (CMA) module to enhance the semantic expression of the image features and filter out error information from the draft captions. We integrate CMA with the decoder of our Deliberation Model and name it as Cross Modification Attention based Deliberation Model (CMA-DM). We train our proposed framework by jointly optimizing all trainable components from scratch with a trade-off coefficient. Experiments on MS COCO dataset demonstrate that our approach obtains significant improvements over single-pass decoding baselines and achieves competitive performances compared with other state-of-the-art two-pass decoding based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge