Guy Cazuguel

Real-time analysis of cataract surgery videos using statistical models

Oct 18, 2016

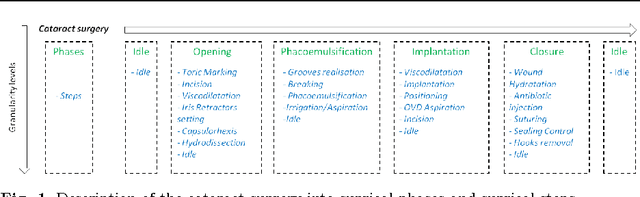

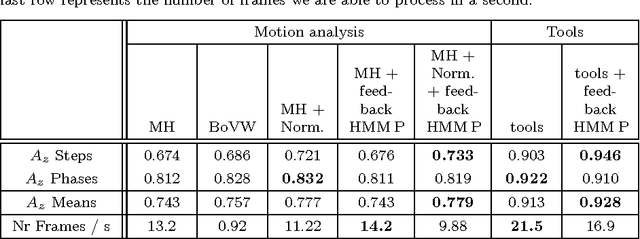

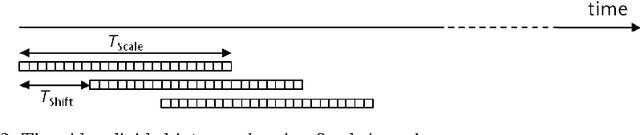

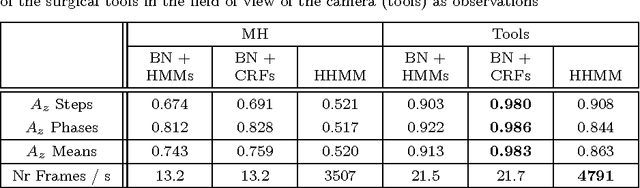

Abstract:The automatic analysis of the surgical process, from videos recorded during surgeries, could be very useful to surgeons, both for training and for acquiring new techniques. The training process could be optimized by automatically providing some targeted recommendations or warnings, similar to the expert surgeon's guidance. In this paper, we propose to reuse videos recorded and stored during cataract surgeries to perform the analysis. The proposed system allows to automatically recognize, in real time, what the surgeon is doing: what surgical phase or, more precisely, what surgical step he or she is performing. This recognition relies on the inference of a multilevel statistical model which uses 1) the conditional relations between levels of description (steps and phases) and 2) the temporal relations among steps and among phases. The model accepts two types of inputs: 1) the presence of surgical tools, manually provided by the surgeons, or 2) motion in videos, automatically analyzed through the Content Based Video retrieval (CBVR) paradigm. Different data-driven statistical models are evaluated in this paper. For this project, a dataset of 30 cataract surgery videos was collected at Brest University hospital. The system was evaluated in terms of area under the ROC curve. Promising results were obtained using either the presence of surgical tools ($A_z$ = 0.983) or motion analysis ($A_z$ = 0.759). The generality of the method allows to adapt it to any kinds of surgeries. The proposed solution could be used in a computer assisted surgery tool to support surgeons during the surgery.

Coarse-to-fine Surgical Instrument Detection for Cataract Surgery Monitoring

Sep 19, 2016

Abstract:The amount of surgical data, recorded during video-monitored surgeries, has extremely increased. This paper aims at improving existing solutions for the automated analysis of cataract surgeries in real time. Through the analysis of a video recording the operating table, it is possible to know which instruments exit or enter the operating table, and therefore which ones are likely being used by the surgeon. Combining these observations with observations from the microscope video should enhance the overall performance of the systems. To this end, the proposed solution is divided into two main parts: one to detect the instruments at the beginning of the surgery and one to update the list of instruments every time a change is detected in the scene. In the first part, the goal is to separate the instruments from the background and from irrelevant objects. For the second, we are interested in detecting the instruments that appear and disappear whenever the surgeon interacts with the table. Experiments on a dataset of 36 cataract surgeries validate the good performance of the proposed solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge