Guorong Hu

Coordinate-conditioned Deconvolution for Scalable Spatially Varying High-Throughput Imaging

Feb 01, 2026Abstract:Wide-field fluorescence microscopy with compact optics often suffers from spatially varying blur due to field-dependent aberrations, vignetting, and sensor truncation, while finite sensor sampling imposes an inherent trade-off between field of view (FOV) and resolution. Computational Miniaturized Mesoscope (CM2) alleviate the sampling limit by multiplexing multiple sub-views onto a single sensor, but introduce view crosstalk and a highly ill-conditioned inverse problem compounded by spatially variant point spread functions (PSFs). Prior learning-based spatially varying (SV) reconstruction methods typically rely on global SV operators with fixed input sizes, resulting in memory and training costs that scale poorly with image dimensions. We propose SV-CoDe (Spatially Varying Coordinate-conditioned Deconvolution), a scalable deep learning framework that achieves uniform, high-resolution reconstruction across a 6.5 mm FOV. Unlike conventional methods, SV-CoDe employs coordinate-conditioned convolutions to locally adapt reconstruction kernels; this enables patch-based training that decouples parameter count from FOV size. SV-CoDe achieves the best image quality in both simulated and experimental measurements while requiring 10x less model size and 10x less training data than prior baselines. Trained purely on physics-based simulations, the network robustly generalizes to bead phantoms, weakly scattering brain slices, and freely moving C. elegans. SV-CoDe offers a scalable, physics-aware solution for correcting SV blur in compact optical systems and is readily extendable to a broad range of biomedical imaging applications.

Wide-Field, High-Resolution Reconstruction in Computational Multi-Aperture Miniscope Using a Fourier Neural Network

Mar 11, 2024Abstract:Traditional fluorescence microscopy is constrained by inherent trade-offs among resolution, field-of-view, and system complexity. To navigate these challenges, we introduce a simple and low-cost computational multi-aperture miniature microscope, utilizing a microlens array for single-shot wide-field, high-resolution imaging. Addressing the challenges posed by extensive view multiplexing and non-local, shift-variant aberrations in this device, we present SV-FourierNet, a novel multi-channel Fourier neural network. SV-FourierNet facilitates high-resolution image reconstruction across the entire imaging field through its learned global receptive field. We establish a close relationship between the physical spatially-varying point-spread functions and the network's learned effective receptive field. This ensures that SV-FourierNet has effectively encapsulated the spatially-varying aberrations in our system, and learned a physically meaningful function for image reconstruction. Training of SV-FourierNet is conducted entirely on a physics-based simulator. We showcase wide-field, high-resolution video reconstructions on colonies of freely moving C. elegans and imaging of a mouse brain section. Our computational multi-aperture miniature microscope, augmented with SV-FourierNet, represents a major advancement in computational microscopy and may find broad applications in biomedical research and other fields requiring compact microscopy solutions.

EventLFM: Event Camera integrated Fourier Light Field Microscopy for Ultrafast 3D imaging

Oct 01, 2023Abstract:Ultrafast 3D imaging is indispensable for visualizing complex and dynamic biological processes. Conventional scanning-based techniques necessitate an inherent tradeoff between the acquisition speed and space-bandwidth product (SBP). While single-shot 3D wide-field techniques have emerged as an attractive solution, they are still bottlenecked by the synchronous readout constraints of conventional CMOS architectures, thereby limiting the data throughput by frame rate to maintain a high SBP. Here, we present EventLFM, a straightforward and cost-effective system that circumnavigates these challenges by integrating an event camera with Fourier light field microscopy (LFM), a single-shot 3D wide-field imaging technique. The event camera operates on a novel asynchronous readout architecture, thereby bypassing the frame rate limitations intrinsic to conventional CMOS systems. We further develop a simple and robust event-driven LFM reconstruction algorithm that can reliably reconstruct 3D dynamics from the unique spatiotemporal measurements from EventLFM. We experimentally demonstrate that EventLFM can robustly image fast-moving and rapidly blinking 3D samples at KHz frame rates and furthermore, showcase EventLFM's ability to achieve 3D tracking of GFP-labeled neurons in freely moving C. elegans. We believe that the combined ultrafast speed and large 3D SBP offered by EventLFM may open up new possibilities across many biomedical applications.

Robust single-shot 3D fluorescence imaging in scattering media with a simulator-trained neural network

Mar 22, 2023

Abstract:Imaging through scattering is a pervasive and difficult problem in many biological applications. The high background and the exponentially attenuated target signals due to scattering fundamentally limits the imaging depth of fluorescence microscopy. Light-field systems are favorable for high-speed volumetric imaging, but the 2D-to-3D reconstruction is fundamentally ill-posed and scattering exacerbates the condition of the inverse problem. Here, we develop a scattering simulator that models low-contrast target signals buried in heterogeneous strong background. We then train a deep neural network solely on synthetic data to descatter and reconstruct a 3D volume from a single-shot light-field measurement with low signal-to-background ratio (SBR). We apply this network to our previously developed Computational Miniature Mesoscope and demonstrate the robustness of our deep learning algorithm on a 75 micron thick fixed mouse brain section and on bulk scattering phantoms with different scattering conditions. The network can robustly reconstruct emitters in 3D with a 2D measurement of SBR as low as 1.05 and as deep as a scattering length. We analyze fundamental tradeoffs based on network design factors and out-of-distribution data that affect the deep learning model's generalizability to real experimental data. Broadly, we believe that our simulator-based deep learning approach can be applied to a wide range of imaging through scattering techniques where experimental paired training data is lacking.

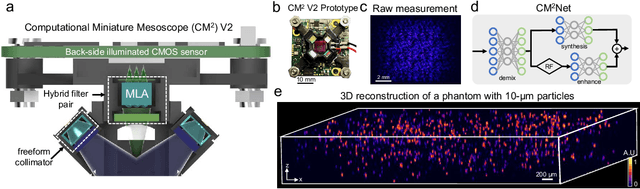

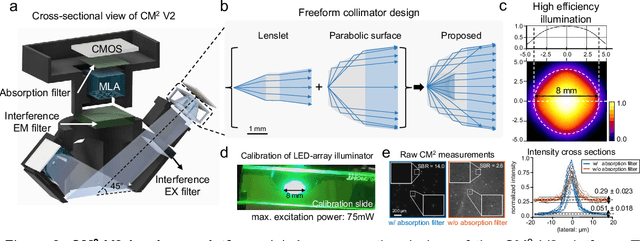

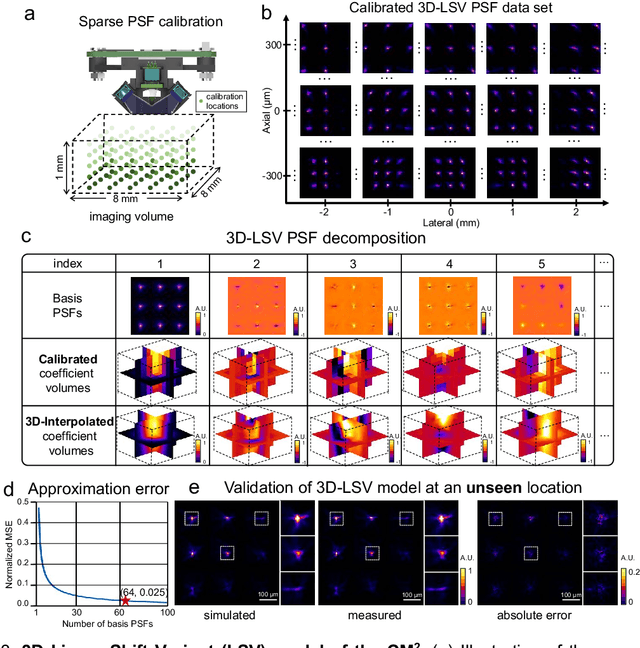

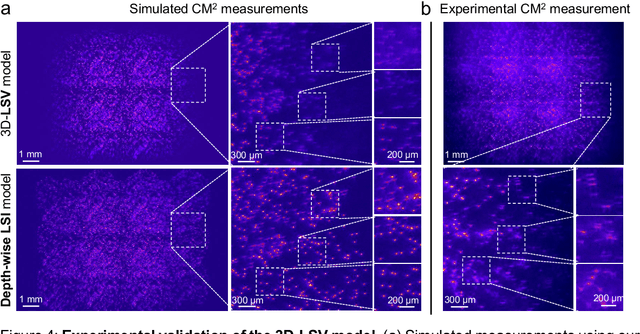

Computational Miniature Mesoscope V2: A deep learning-augmented miniaturized microscope for single-shot 3D high-resolution fluorescence imaging

Apr 30, 2022

Abstract:Computational Miniature Mesoscope (CM2) is a recently developed computational imaging system that enables single-shot 3D imaging across a wide field-of-view (FOV) using a compact optical platform. In this work, we present CM2 V2 - an advanced CM2 system that integrates novel hardware improvements and a new deep learning reconstruction algorithm. The platform features a 3D-printed freeform LED collimator that achieves ~80$\%$ excitation efficiency - a ~3x improvement over our V1 design, and a hybrid emission filter design that improves the measurement contrast by >5x. The new computational pipeline includes an accurate and computationally efficient 3D linear shift-variant (LSV) forward model and a novel multi-module CM2Net deep learning model. As compared to the model-based deconvolution in our V1 system, CM2Net achieves ~8x better axial localization and ~1400x faster reconstruction speed. In addition, CM2Net consistently achieves high detection performance and superior axial localization across a wide FOV at a variety of conditions. Trained entirely on our 3D-LSV simulator generated training data set, CM2Net generalizes well to real experiments. We experimentally demonstrate that CM2Net achieves accurate 3D reconstruction of fluorescent emitters across a ~7-mm FOV and 800-$\mu$m depth, and provides ~7-$\mu$m lateral and ~25-$\mu$m axial resolution. We anticipate that this simple and low-cost computational miniature imaging system will be impactful to many large-scale 3D fluorescence imaging applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge